AI in Education: How to Do A 6-Month AI Integration in 3 Months to Accelerate Product Delivery

Published: – Updated:

In our previous article, we talked about the AI pre-integration stage in edtech software development, the uncertainty, the planning, and the technical “what ifs” that keep even the most forward-thinking teams stuck. We broke down what holds education companies back and how to approach AI like a product decision, not a side experiment.

This time, we’re going further. This article is the next chapter, a real example of how we turned planning into action.

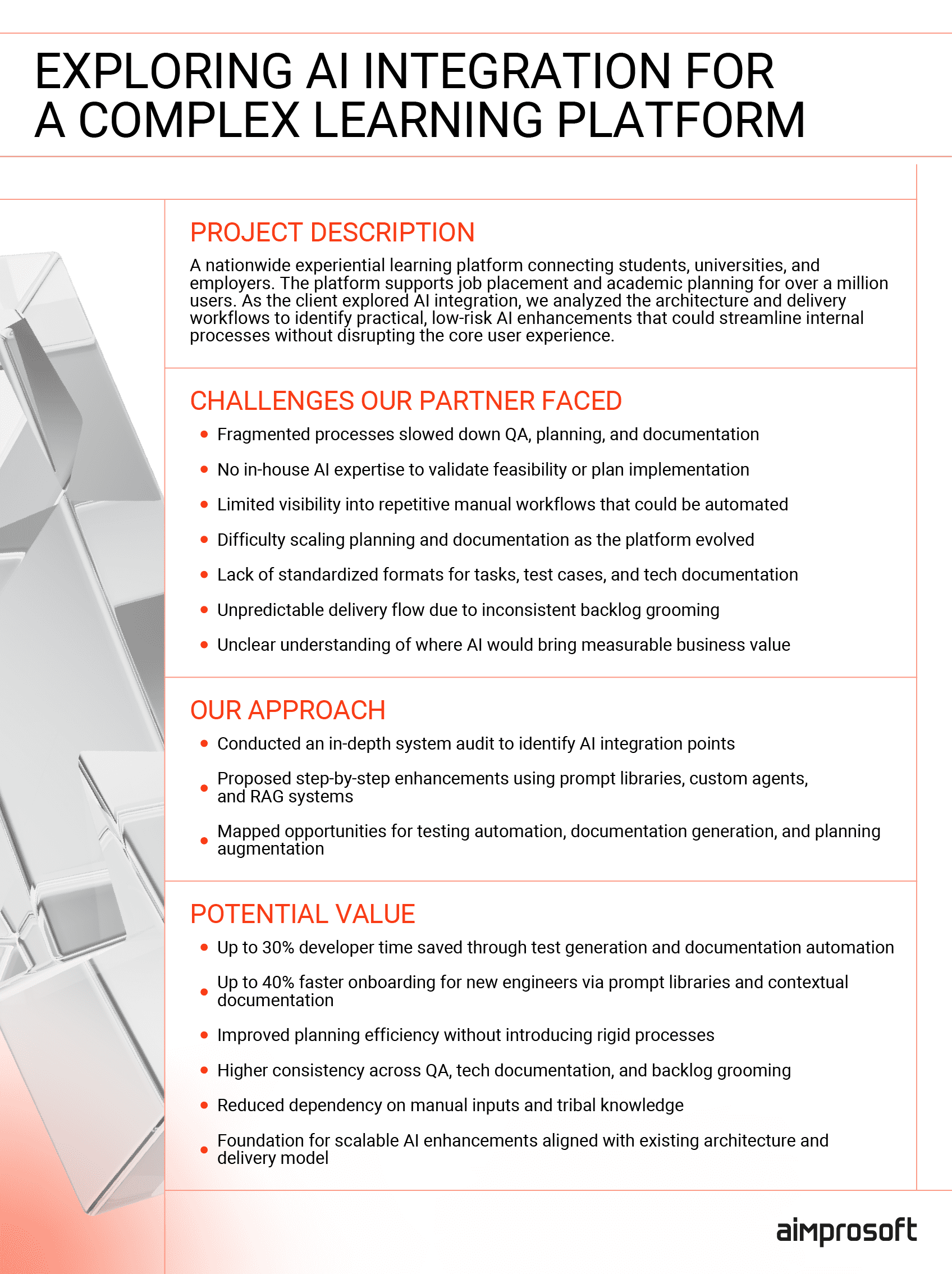

We’ve been working with our client, who runs one of the largest experiential learning platforms, for over five years. Our team has been responsible for modernizing, scaling, and expanding the educational solution, evolving it into a platform used by thousands of students, universities, and employers across the country. The kind of system where any disruption means risk, and every improvement needs to be worth it. So when the opportunity for AI software development came up, it wasn’t about testing ideas. It was about making them work in context, at speed, and without slowing delivery. And in three months, we did.

What follows is a breakdown of how we used our AI integration framework and delivered AI integration in half the average time, embedding automation, streamlining delivery, and creating real business value without slowing the team down. Not a wishlist. Not a concept deck. An actual delivery story built on clear architecture, tight collaboration, and the right use cases — now a successful showcase of how to use AI in software development of educational solutions.

How we approached AI integration for a nationwide education platform

When AI for product development first entered the conversation on our client’s platform, the initial expectations were familiar — personalized learning, automated feedback, maybe a chatbot. Tangible, user-facing features. However, we knew from experience that the most impactful use cases usually live deeper in the system.

Before building anything, we stepped back and examined how the platform already worked, how users interacted with it, how data flowed, and how new features were built. From our perspective, AI in product development isn’t just about adding functionality. It has to align with the existing logic, workflows, and educational goals already in place.

That’s why we didn’t start by asking our client a general question, “What kind of AI do you want to use?” Instead, we began by mapping out the real operational challenges already holding the team back. Here’s what we found:

AI integration in eLearning software

In this case, many of the best opportunities we found were behind the scenes — embedded in test coverage gaps, tribal knowledge, and handoffs between teams. That’s where AI could move the needle the fastest, without disrupting the existing processes.

We approached AI software development as a system-level decision, not a side feature. Each integration was designed to work with the platform’s architecture and delivery rhythm, layering AI into existing workflows instead of introducing friction. Because we weren’t just new to the project, we’d been evolving it for years. By the time we mapped AI integration, we already understood the system inside out, and that’s what made rapid delivery possible.

Turning analysis into action: our 12-week AI integration plan

Once we completed our system analysis and identified high-impact integration zones, the next step was building a clear, realistic implementation roadmap. This wasn’t about flashy AI demos; it was about strategic and responsible AI development by embedding practical tools into the daily workflows of developers, testers, and other team members. Our plan was structured into six focused phases over 12 weeks, each delivering functional outcomes without disrupting the ongoing development lifecycle.

| AI integration roadmap | Stage details |

|---|---|

| Week 1-2: Kickoff and setup | – Register OpenAI team API keys – Configure Fathom (or other transcription tool) – Create a prompt template base |

| Week 3-4: Knowledge and documentation | – Deploy OpenAI Assistant for summarization – Auto-generate READMEs & Swagger from services |

| Week 5-6: Dev loop integration | – Hook OpenAI to PR & ticket system – Begin PR summarization + test suggestion flow |

| Week 7-8: UI and job matching | – Train the matching assistant on feedback – Connect Figma → Vue code assistant using Vision |

| Week 9-10: Planning and QA tools | – Set up the planning analyzer for BAs/PMs – Push testing assistant for QA |

| Week 11-12: Rollout and adoption | – Host internal AI demo sessions – Share metrics + feedback + iteration plan |

Week 1–2: Kickoff and setup

We began by laying the technical foundation, registering API keys, configuring transcription tools like Fathom, and establishing the initial prompt template library. This base ensured a consistent, secure entry point for future assistants and automation.

Week 3–4: Knowledge and documentation

Next, we deployed OpenAI Assistants to help with summarization and documentation. The system could auto-generate READMEs and Swagger/OpenAPI files directly from existing services, reducing time spent on manual writing and helping capture tribal knowledge.

Week 5–6: Dev loop integration

By week five, we integrated AI into pull request workflows and ticketing systems. This enabled summarization of code changes, suggested test ideas, and laid the groundwork for better planning and QA handoff — all without leaving the tools the team was already using.

Week 7–8: UI and job matching

We introduced AI-based vision tools to assist in front-end transformation, converting Figma designs into Vue templates. We also began training the matching assistant based on user feedback to suggest relevant educational or career opportunities.

Week 9–10: Planning and QA tools

We enhanced planning accuracy by introducing AI support for business analysts and product managers. Simultaneously, our QA assistant began helping testers generate test cases and identify potential edge scenarios earlier in the cycle.

Week 11–12: Rollout and adoption

In the final phase, we hosted internal AI demo sessions and collected team feedback. Based on usage data and hands-on testing, we fine-tuned the integration and mapped out the next iterations for continued scaling.

This roadmap wasn’t just a plan on paper. It became a blueprint for transforming routine delivery work through AI app development, and in the next section, we’ll break down the specific impact across onboarding, QA, testing, design, and more.

From blueprint to results: embedding AI into delivery

With the foundation in place, we began integrating AI into critical delivery workflows, not as isolated tools, but as embedded enhancements across the product lifecycle. Drawing on our experience providing AI development services, each integration targeted a specific pain point with measurable results. In the following section, we break down exactly how we applied AI to accelerate delivery, reduce friction, and support consistent growth.

Streamlining knowledge sharing, requirements gathering, and onboarding

The challenge

When e-learning platforms grow over time, knowledge sharing often breaks down. Documentation falls out of sync, requirements get buried in meeting recordings, and onboarding slows to a crawl. In this case, we used AI application development to address those exact challenges.

What we did

To solve this, we introduced a unified AI-powered strategy combining documentation automation and intelligent requirements processing:

- Used AI transcription tools to filter noisy meeting input and extract clear action points

- Set up OpenAI Assistant trained on internal service code and READMEs for contextual documentation generation

- Built a categorized prompt library for legacy explanation, refactoring, test generation, and onboarding

- Ran weekly AI workshops to improve internal adoption and prompt engineering skills

Outcomes

- Reduced onboarding time for new engineers by up to 50%

- Made undocumented logic searchable and reusable

- Cut the time spent in clarification meetings across teams

- Enabled consistent, AI-assisted documentation without added manual effort

Automating testing and ticket flow for faster, safer releases

The challenge

For large-scale learning platforms, testing delays and vague task descriptions often snowball into unstable releases. As part of our AI product development approach, we focused on solving exactly that. Our client’s QA process was burdened by manual test creation, inconsistent ticket quality, and poor visibility into code changes. Test coverage suffered, release cycles slowed, and the risk of defects reaching production increased, all of which undermined delivery speed and platform reliability.

What we did

We automated the full QA and ticketing cycle with integrated AI tools, improving both speed and accuracy. Our team implemented:

- PR-based generation of unit and integration test suggestions based on code diffs and past failures

- A “ticket completer” plugin that enriches stories with acceptance criteria, edge cases, and behavior prompts

- AI-generated summaries of code changes for easier tester handoff

- Prompt library for smoke, regression, and sanity testing

- Auto-generated test data and scripts for CI/CD-based test execution

- Test report analysis support using structured prompts

Outcomes

- Reduced manual QA effort while increasing test coverage

- Faster tester handoffs and clearer understanding of what changed

- Fewer bugs in production thanks to earlier detection and structured validation

- Stronger support for CI/CD and scalable QA in fast-moving delivery cycles

Reducing bugs and technical debt through modular code and AI-assisted reviews

In edtech development, codebases often evolve rapidly to support new features, roles, and learning flows, but that speed comes at a cost. Over time, large services, duplicated logic, and inconsistent structure begin to pile up, making future development harder to manage. In our client’s case, legacy modules mixed unrelated business logic, which slowed delivery, increased onboarding friction, and raised the risk of introducing bugs during releases. Refactoring was risky and time-consuming, and tech debt was already impacting the team’s ability to scale cleanly.

What we did

To increase system stability and prevent bugs before they happen, we implemented a modularization and code quality strategy powered by AI. Key actions included:

- Detecting legacy code patterns (e.g., overused services, large classes) with Semgrep and AI prompts

- Adding a CI-integrated code reviewer to flag risk zones, tight coupling, and refactoring suggestions

- Encouraging modular commits and prompting developers to follow clean code principles

- Running static code analysis with AI-generated recommendations pushed back into documentation and tooling

- Differentiating logic for MVPs vs. mature features by creating separate prompt sets and style rules for each

Outcomes

- Lowered regression risk through early code structure analysis

- Reduced manual rework by guiding devs with AI-backed feedback

- Supported transition from monolith to a more modular architecture

- Created a more scalable and maintainable codebase without rewriting it from scratch

Accelerating front-end delivery with AI-assisted design handoff

The challenge

Educational platforms often undergo constant UI evolution — adding new modules, adapting layouts for different learner types, or improving accessibility. In edtech app development, these changes demand tight coordination between design, front-end, and QA. Each handoff can introduce friction or delay. In our client’s case, the Figma-to-code flow lacked automation, making each redesign effort manual, time-consuming, and difficult to scale without increasing resource load or breaking visual consistency.

What we did

We used AI to automate key parts of the redesign-to-delivery pipeline:

- Exported Figma screens as images or SVGs, then processed them with ChatGPT Vision to generate Vue.js templates

- Enabled AI to scaffold backend API stubs (e.g., Java Spring) for dynamic data population

- Streamlined the QA handoff by exporting complete UI components and assigning them directly for review and merge

- Used Tailwind-aware prompting to ensure accurate style reproduction and fewer manual fixes

Outcomes

- Reduced front-end dev time by automating Figma to Vue transformations

- Accelerated feature delivery without waiting on design clarification

- Reduced design inconsistencies by aligning style and component logic automatically

- Enabled fast iteration without breaking the delivery rhythm

Wrapping up: how we accelerated delivery with AI integration

Twelve weeks. One platform. And dozens of behind-the-scenes improvements that added up to a faster, more reliable delivery cycle. In the context of edtech software development, these changes weren’t abstract; they were targeted and built into real workflows. Below are the highlights of what we achieved by integrating AI directly into daily delivery operations:

- Cut onboarding time by 40% through automated documentation and AI prompt libraries

- Streamlined QA cycles, reaching almost 60% test coverage via auto-generated suggestions

- Reduced regression risks with AI-assisted code analysis and modular refactoring

- Accelerated UI build by 80% with <20% manual fixes using Figma to Vue transformation

- Improved planning accuracy, auto-enriching 75% of BA tasks with business logic context

- Reduced PR review time by 30%, enabling faster handoffs and smoother merges

- Enabled platform-wide consistency by separating logic for MVPs vs. mature modules

But the technical outcomes were only part of the story. As a provider of edtech app development services, we’ve seen how much strategy shapes success. In the final section, we’ll share the insights that guided this delivery and the principles any team can apply when considering how to use AI in software development.

What worked, why it mattered — and how to use it in your own roadmap

Helping one of the country’s largest learning platforms embed AI wasn’t just a delivery milestone. It clarified what real AI integration looks like in edtech software development, when it’s tied to product logic, delivery speed, and sustainable scale. Here are a few insights we gained, ones that can guide any team exploring how to use AI in software development:

Start with your system, not with a model

If it doesn’t fit your workflows, it won’t deliver value, no matter how advanced it sounds.

Look for high-impact, low-friction wins

The biggest gains often come from internal workflows: testing, docs, planning, and not just user-facing features.

Don’t build AI for its own sake

If the use case doesn’t address a real need for your team or users, it’s not worth the effort yet.

Expect your vendor to think like a product partner

It’s not just about implementation. It’s about prioritizing, aligning, and delivering AI that fits your roadmap.

Treat AI as a product decision, not a lab experiment

It should be measurable, iterative, and directly tied to how your team builds and improves the product.

If you’re exploring AI custom software development but not sure where to begin, or if you need a second set of eyes on how it could fit into your existing product, we’re here to help. We don’t push technology for the sake of it. We help edtech app development companies make smart, sustainable decisions rooted in product logic and long-term value. Let’s talk about where AI makes sense for you. And where it doesn’t.

FAQ

Do we need to have clean, labeled data before starting AI integration?

Not necessarily. Part of the AI pre-integration stage during the edtech software development process is assessing what data you already have and whether it’s usable. In many cases, we can start with what’s available, run small-scale tests, and identify what needs to be cleaned or enriched as we go.

Can you integrate AI without disrupting the rest of our system?

That’s the whole point of our approach when we provide AI software development services. We design AI components to be modular and isolated, meaning they can be rolled out incrementally, tested independently, and scaled as needed. This reduces risk and avoids any major impact on existing workflows or uptime.

How long does this pre-integration analysis take?

Typically, 2 to 4 weeks, depending on the complexity of your product and how much access we have to the system and team. When we provide generative AI development services, we move fast but methodically because the goal isn’t to delay action, it’s to ensure the right action is taken from the start.