Before You Build: How to Plan Meaningful AI Integration in eLearning Products

Published: – Updated:

Everyone’s talking about AI. Few know where to begin, especially when it comes to e-learning products that are already running at scale.

If that sounds familiar, you’re not alone. We’ve had these conversations with education providers who are growing fast, updating legacy systems, or simply trying to understand where AI fits into their learning ecosystem. The questions are often the same: Where do we start? Will it deliver value for students and educators? Can our current system even support it? And if we bring in a vendor, will they truly understand our product, or just try to push forward generic AI tools?

As a provider of education software development services, we’ve noticed that most education companies don’t struggle with AI implementation itself. They’re stuck before that, somewhere between curiosity and uncertainty.

To help organizations in the educational sector navigate that stage, we’ve developed an AI integration framework rooted in product thinking, system analysis, and delivery constraints. And this article shows how that thinking applies before a single model is trained or a prompt is written, when decisions still shape outcomes. From practical use cases to hidden fears and a step-by-step planning approach, we’ll walk through what meaningful AI integration in e-learning actually looks like — before you build.

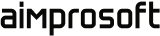

Where AI helps in education: 5 use cases that deliver ROI

The promise of AI in education is everywhere, from chatbots to personalized content to predictive analytics. But too often, these ideas stay abstract or disconnected from actual product needs. That’s why it’s important to focus not just on what’s possible, but on what’s practical.

Below are some of the most effective AI use cases in education, based on what we’ve seen in the field. Each one reflects a clear opportunity to improve the services you provide, streamline internal operations, and increase impact, not in theory, but inside the real systems educators and learners rely on every day. Whether you’re planning your first AI integration or reevaluating existing features, these are the areas worth your attention.

AI use cases in edtech

Personalized content and learning paths

Static learning journeys don’t work for everyone. Students progress at different paces, have different learning preferences, and need support tailored to their goals. AI in software development enables platforms to dynamically adjust course sequences, recommend next steps, and surface resources based on engagement, performance, and preferences.

This level of personalization helps reduce learner dropout, improve outcomes, and keep users engaged without relying on hardcoded logic or static rule sets, which is especially valuable in asynchronous or self-paced platforms.

Automated feedback and grading

Manual grading eats up hours, especially when assignments are open-ended or require detailed feedback. With generative AI, platforms can support teachers by suggesting formative feedback, auto-evaluating quizzes, and highlighting areas where students struggle most.

Used responsibly, this doesn’t replace educators — it helps them focus on what matters most: guidance, support, and course improvement. It’s one of the most practical generative AI use cases in education that balances efficiency with educational value.

Improved accessibility with adaptive interfaces and content

Not every student interacts with content the same way. AI can help dynamically adjust language complexity, reformat materials for screen readers, or generate visual/audio alternatives on demand. This supports diverse learning needs and improves accessibility for students with disabilities or different language backgrounds.

It also helps education platforms meet compliance goals and improve user satisfaction without heavy manual effort, turning accessibility from a blocker into a built-in advantage.

Enhanced search and discovery with semantic understanding

Most educational platforms rely on keyword-based search. But students often search like they think, with incomplete phrases, vague questions, or context-driven queries. AI-powered semantic search helps surface relevant materials faster by understanding intent, not just keywords.

This boosts engagement, reduces time wasted on navigation, and helps students and instructors find exactly what they need, even in large, complex course libraries.

Streamlined internal development and delivery workflows

Behind every student-facing feature, there is your team constantly managing tickets, deployments, QA, and technical debt. AI can streamline internal processes like test generation, documentation drafting, planning support, and even code review assistance.

By applying AI to the delivery layer (not just the product interface), you can shorten release cycles, reduce bugs, and onboard new team members faster. This is one of the most overlooked AI use cases in education, but it’s often where the biggest delivery gains come from.

Want to see what this looks like in practice? We helped one of the largest eLearning platforms integrate AI across their development lifecycle, from documentation to testing and delivery acceleration. Read the full case to see how we made it happen.

The benefits are clear. But knowing where AI fits isn’t always the hard part. It’s figuring out how to start. In the next section, we’ll look at the fears and doubts that may slow you down when planning AI integration and show you how to move past them with confidence.

AI sounds like the next step, but where do you begin?

We get it. AI is no longer a distant concept. It’s in your competitors’ roadmaps. It’s in market trend reports. It’s in every second keynote on the future of education. And it’s probably already come up in your internal discussions, whether you’re building LMS platforms, certification engines, or campus-wide career planning tools.

Wanting to explore AI isn’t a bad instinct. It’s the right one. But knowing it’s time to act doesn’t mean it’s easy to start. Especially when you’re working with a long-running education solution, have limited in-house AI expertise, or aren’t sure what kind of outcomes matter for your users.

Over the years, we’ve helped e-learning companies of all sizes, from universities to private education providers, unpack the same core doubts. They’re valid. And they’re solvable once you name them.

5 biggest AI integration fears business owners have

“We want to stay competitive, but we don’t want to break what already works”

In the education sector, stability isn’t just a technical metric. It’s a reputation issue. If your platform supports universities, training providers, or certification programs, even minor disruptions can have ripple effects. Missed assignments. Confused learners. Frustrated administrators.

When you’ve spent years building a dependable system, one that aligns with academic calendars, funding cycles, and strict compliance rules, introducing AI feels like threading a needle. One poorly scoped feature can trigger unexpected behavior, slow performance, or damage the user experience for thousands of students and faculty.

This fear is valid, but the solution isn’t to avoid AI; it’s to start with analysis, not assumptions. Mapping where AI can safely enhance what’s already working (without replacing it) is what prevents disruption and preserves trust while moving your product forward.

“We don’t have in-house AI experts. Does that mean we’re not ready?”

Most education companies don’t. You have curriculum designers, product owners, full-stack developers, and maybe a couple of data-savvy engineers, but no dedicated AI team. And with the pace of AI innovation, the idea of outsourcing AI and education software development services, or upskilling fast enough, can feel out of reach.

But a lack of in-house AI expertise doesn’t mean you’re not ready. It means you need a partner who provides enterprise AI development services and can do more than execute. You need someone who can translate product logic into AI logic. Someone who throws models at your backlog, but one who understands how AI can support educational outcomes, reduce delivery friction, and respect the way your platform already works.

That kind of collaboration bridges the gap. It turns “we’re curious, but unsure” into a structured roadmap, not an experiment. And more importantly, it ensures that AI doesn’t become another siloed feature. It becomes part of how you evolve your platform.

Not sure if your product is even ready for AI? Read our expert-backed guide to assessing AI integration readiness. It answers the most common questions and helps you evaluate where you stand.

“We’re not sure what AI could even do in our case”

Edtech platforms solve highly specific problems for students, educators, administrators, and institutions. That makes them powerful, but also harder to map against generic AI playbooks. What exactly should you automate, predict, or optimize without risking relevance or functionality?

It’s a common dilemma. We’ve seen platforms with rich data, multiple user roles, and mature workflows, but there is still no clear entry point for AI. That’s not because the opportunities aren’t there. It’s because they’re buried in day-to-day operations: fragmented documentation, inconsistent planning, testing blind spots, or even duplicated support effort.

Without a structured analysis, these opportunities stay invisible, or worse, get replaced by “shiny” AI features that sound impressive but solve nothing. The value comes from finding the right fit, not forcing AI into places it doesn’t belong.

“We’re worried a vendor will push a one-size-fits-all solution”

You’ve likely seen it before. A vendor drops in with prebuilt AI models or fancy demos, namely, tools that look impressive but don’t match your student data, delivery model, or educational workflows. Suddenly, you’re being sold a chatbot no one asked for, or a recommendation engine that ignores how your users actually move through the platform.

In education products, these mismatches aren’t just frustrating — they’re costly. They create integration headaches, add tech debt, and leave internal teams maintaining AI software development tools they never scoped or wanted.

That’s why a real AI partner doesn’t start with tools. They start with context, your product’s architecture, your pain points, and your roadmap. AI should serve the system, not the other way around.

“We can’t afford a long, expensive AI experiment”

Educational platforms already operate under pressure: strict timelines, user expectations, and tight budgets. Committing to an open-ended AI initiative without knowing the outcome? It’s a risk few product owners or delivery teams can justify. Especially when the promise of ROI is vague, and the process feels like a black box.

This fear isn’t just about cost. It’s about control. You don’t want guesswork. You want to know where AI fits, what it solves, and when you’ll see results.

That’s why AI should be approached through modular steps. For instance, we use practical software development AI tools that are applied in small phases to solve current problems. We break AI exploration into clear, low-risk steps. Each step is tied to a specific challenge that you face, with measurable outcomes and minimal disruption to your team. No overcommitment. No wasted sprints. Just practical, incremental progress.

Overall, the mentioned concerns aren’t red flags. They’re proof you’re asking the right questions. In fact, the best AI integrations don’t start with software. They start with context. That’s why software development education should include not just technical skills, but also an understanding of how your product works, where it’s headed, and what’s worth automating.

That’s why our approach doesn’t begin with models or tools. It begins with the system — your roles, your workflows, your goals. Before writing a single line of code, the key questions should be asked: How can AI support what already works and push what’s possible, without adding noise? Let’s look at what that process involves.

What makes or breaks AI in e-learning products

A promising use case. A few weeks of exploration. Maybe even a working demo. And then… nothing.

We’ve seen this pattern before across industries, but especially in edtech. AI initiatives begin with excitement but stall before they scale. Not because the technology isn’t viable, but because the approach wasn’t grounded in the realities of product, process, and delivery.

So why do smart teams with great intentions end up with AI features no one uses or roadmaps that never leave the backlog? The answer usually comes down to 5 things we explore below.

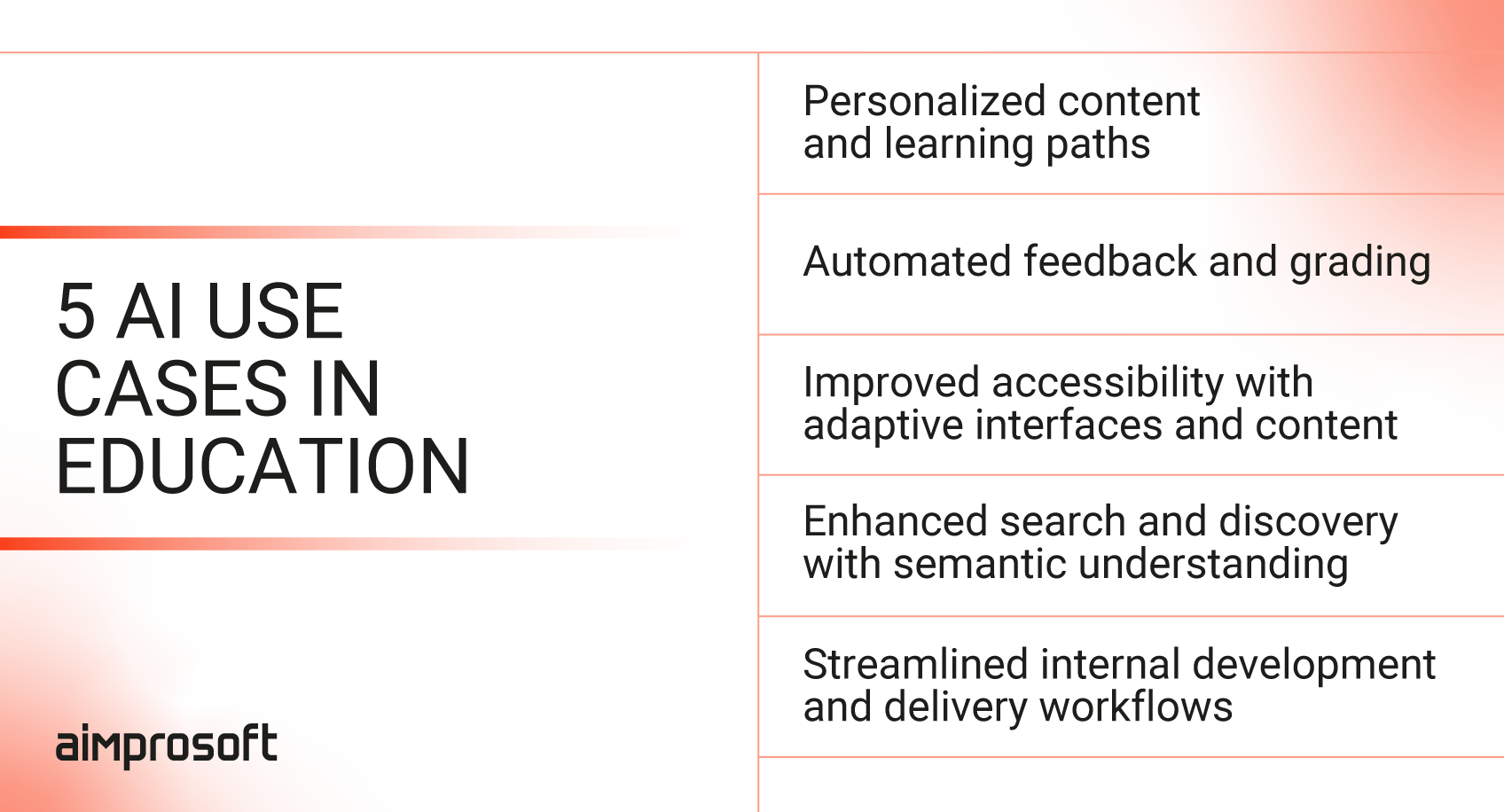

AI integration risks to avoid

Treating AI like a feature, not a system-level decision

AI in education software development isn’t just a new tab on the dashboard. It touches how data is processed, how logic flows, and how teams work behind the scenes. When companies treat it as a standalone feature (a chatbot here, a recommender there), they miss the deeper system alignment.

That’s when friction happens: workflows break, data isn’t ready, and AI outputs feel disconnected. Instead of enhancing the product, AI becomes a side project that quietly dies in staging.

How to avoid it: You need to approach AI in education technology software development as part of the system, not a layer on top. That means analyzing architecture, delivery workflows, and internal bottlenecks before building anything. The goal is to integrate AI where it makes sense, not just where it’s visible.

Starting with models, not use cases

Jumping straight to tools, e.g., ChatGPT, vector databases, RAG systems, might feel like momentum. But without a grounded use case, technical excitement quickly becomes scope creep.

We’ve seen teams spend months on integrations only to realize the AI wasn’t solving a real problem. Or worse, it created new ones, such as slowing performance, confusing users, or duplicating manual work in new forms.

How to avoid it: Start with pain points, not models. For instance, using our AI integration framework, we map product needs to enable practical enhancements, prioritizing clarity, relevance, and delivery fit over technical novelty.

Skipping architecture and delivery constraints

Even the best AI idea can fail if it doesn’t fit how your team develops the product. Does your CI/CD pipeline support rapid testing? Can your data be accessed securely and consistently? Will the AI service scale with usage spikes?

Ignoring these questions leads to fragile rollouts, ones that aren’t reproducible, break under pressure, or require manual patching every time something changes.

How to avoid it: It’s crucial to always assess delivery pipelines, observability tools, and modularity before proposing any AI enhancement. If it can’t be tested, monitored, or maintained, it’s not worth building.

Focusing only on user-facing features

It’s tempting to focus AI efforts on the visible, user-facing layer, such as smarter recommendations, automated tutors, or personalization. These features sound impressive and can demo well, but they’re often the hardest to maintain and scale.

Meanwhile, internal workflows, like planning, QA, documentation, or release coordination, are full of high-impact, low-risk opportunities. But they get overlooked because they aren’t flashy.

How to avoid it: It’s important to surface invisible wins. Using AI to streamline internal delivery often yields faster ROI, stronger adoption, and cleaner roadmaps, all without changing the user experience upfront.

Trying to “do AI” all at once

AI in education software solutions development isn’t a one-time initiative. Trying to launch multiple assistants, models, or tools in parallel is a fast way to overload your team. Integrations stall, goals blur, and progress becomes hard to measure.

This approach turns AI into a burden rather than a benefit, especially in teams that are already managing legacy code, complex roles, and tight delivery timelines.

How to avoid it: We recommend sequencing integrations around your roadmap. One use case at a time. Each should be scoped to fit within the delivery cycle and validated before expanding. That way, AI adoption builds momentum instead of chaos.

Underestimating team adoption

Even a perfectly scoped AI integration can underdeliver if no one uses it. Engineers default to old habits. PMs skip prompts. QA doesn’t trust auto-generated tests. Without buy-in, AI tools become shelfware.

How to avoid it: Focus on proper onboarding, not just building. That means running internal demos, surfacing usage patterns, and adjusting based on team feedback. AI tools need to fit how people already work, not force them to change overnight.

These risks are the reasons why many AI initiatives stall before they deliver value. But when addressed early, they become manageable. In the next section, we’ll walk through what a structured AI software development process looks like. One that fits into the reality of your product, your team, and your roadmap.

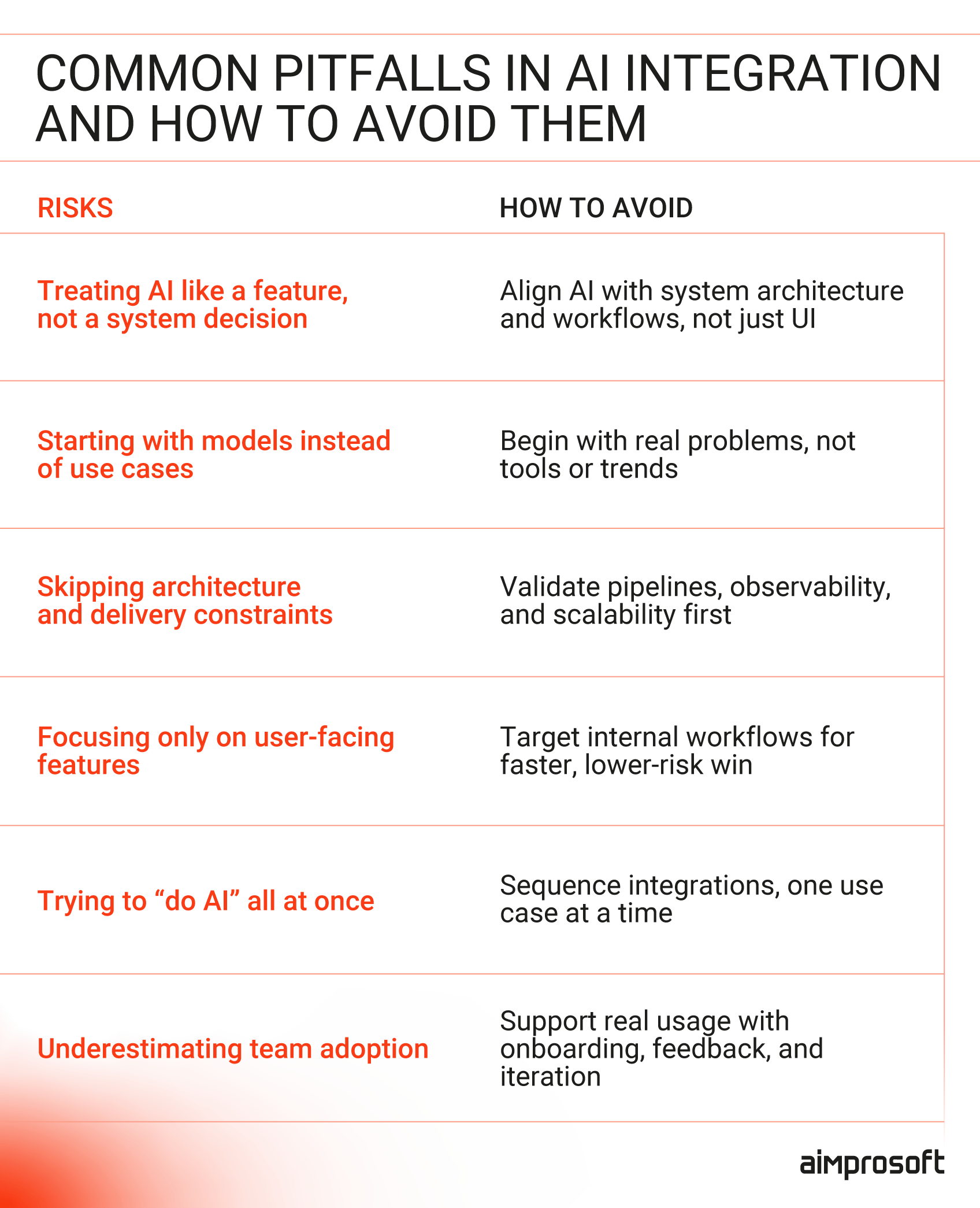

What the AI integration process should look like: Aimprosoft step-by-step flow

AI driven software development shouldn’t begin with choosing models. It should begin with asking the right questions about your system, your team, and your goals. Over the years, we’ve seen how rushed pilots or disconnected tools can drain resources without delivering value. That’s why we approach AI in education as a product-level decision.

Below is the framework we use with clients in the educational sector — a step-by-step AI for application development process designed to identify the right opportunities, validate readiness, and ensure every integration supports both delivery and long-term growth.

Pre-integration of AI & AI readiness check

1. Platform audit and opportunity mapping

We start with a system-level review of your e-learning product, how data flows between components, where logic resides, and how different user roles interact with the platform. This isn’t just about spotting generative AI for software development opportunities. It’s about understanding how the system is built and where AI can enhance delivery without introducing new challenges. We look at modularity, handoffs, and repeatable patterns that may benefit from automation, prediction, or optimization.

2. AI readiness check: architecture, data, and scalability

Not every platform is immediately ready for predictive or generative AI in software development, and that’s okay. Our next step is to evaluate the technical foundation, including infrastructure, data structure, and orchestration layers, to see what’s in place and what may need adjustment. This helps ensure that any AI solution we recommend is actually deployable in a stable, scalable, and secure way.

3. Use case proposal mapped to delivery workflows

Once the system and readiness are understood, we shift to use cases. But not theoretical ones; we focus on value-generating scenarios that fit directly into your current workflows. Whether it’s accelerating QA, improving documentation, or supporting personalization, each proposed use case includes clear inputs, outputs, and success metrics aligned to your product goals.

4. AI-powered enhancements to the SDLC

Finally, when providing AI education software development services, we define how AI will integrate into your delivery lifecycle, not as a separate process, but as a natural layer on top of your existing stack. That means the correct application of AI tools for software development and a thorough process of embedding prompting, summarization, test suggestion, or code generation directly into your CI/CD, planning, or QA systems. This reduces learning curves, avoids disruption, and allows the value of AI to scale with your team.

Wrapping up: where smart AI planning starts

We’ve covered a lot: impact of AI on software development, use cases, fears, risks, and the integration flow we use to help edtech platforms make AI work without breaking what already works. But if there’s one takeaway, it’s this: AI-based software development isn’t a feature decision. It’s a product decision. One that affects delivery, architecture, and long-term growth. The companies that succeed aren’t the ones that move fast; they’re the ones that move with focus. Here’s what that focus helps you avoid and unlock:

- Chasing flashy features instead of solving real problems

- Burning time on tools without a clear use case

- Building things your platform isn’t ready to support

- Missing low-risk, high-impact areas like QA, planning, or documentation

- Overcomplicating integration instead of aligning it with your SDLC

At Aimprosoft AI and software development company, we help edtech teams move from curiosity to clarity. We guide AI integration through product logic, not buzzwords, and we know how to embed it into platforms that are already live, scaling, and under pressure to perform. Let’s talk about what that could look like for your system.

And if you’re curious how this plays out in delivery, check out our next article on practical AI integration to edtech solutions. We’ll walk you through an AI integration we completed for a large-scale educational platform and demonstrate the actual impact it had.

How do we know which AI use cases are right for our product?

Start with your platform’s bottlenecks, and not with tools. The best AI in software development use cases are tied to real delivery pain: manual test creation, onboarding delays, unclear planning, or repeated content workflows. A proper system audit should reveal where AI in software development can save time, reduce friction, or boost quality, without adding unnecessary complexity.

Can AI still bring value if our user base is relatively small?

You don’t need millions of users to make AI-augmented software development worthwhile. Many of the highest-impact use cases are internal: documentation automation, planning support, and QA workflows. These can streamline delivery for any size of team and often pay off faster than user-facing features.

What’s the risk of starting without a long-term AI strategy?

The biggest risk is building something no one maintains. AI augmented development initiatives often fail when they’re treated as one-off experiments. If you want AI to stick, you need a delivery-aware plan. One that aligns with your roadmap, your infrastructure, and your team’s capacity. We recommend starting with a scoped audit and mapping value before committing to any build.