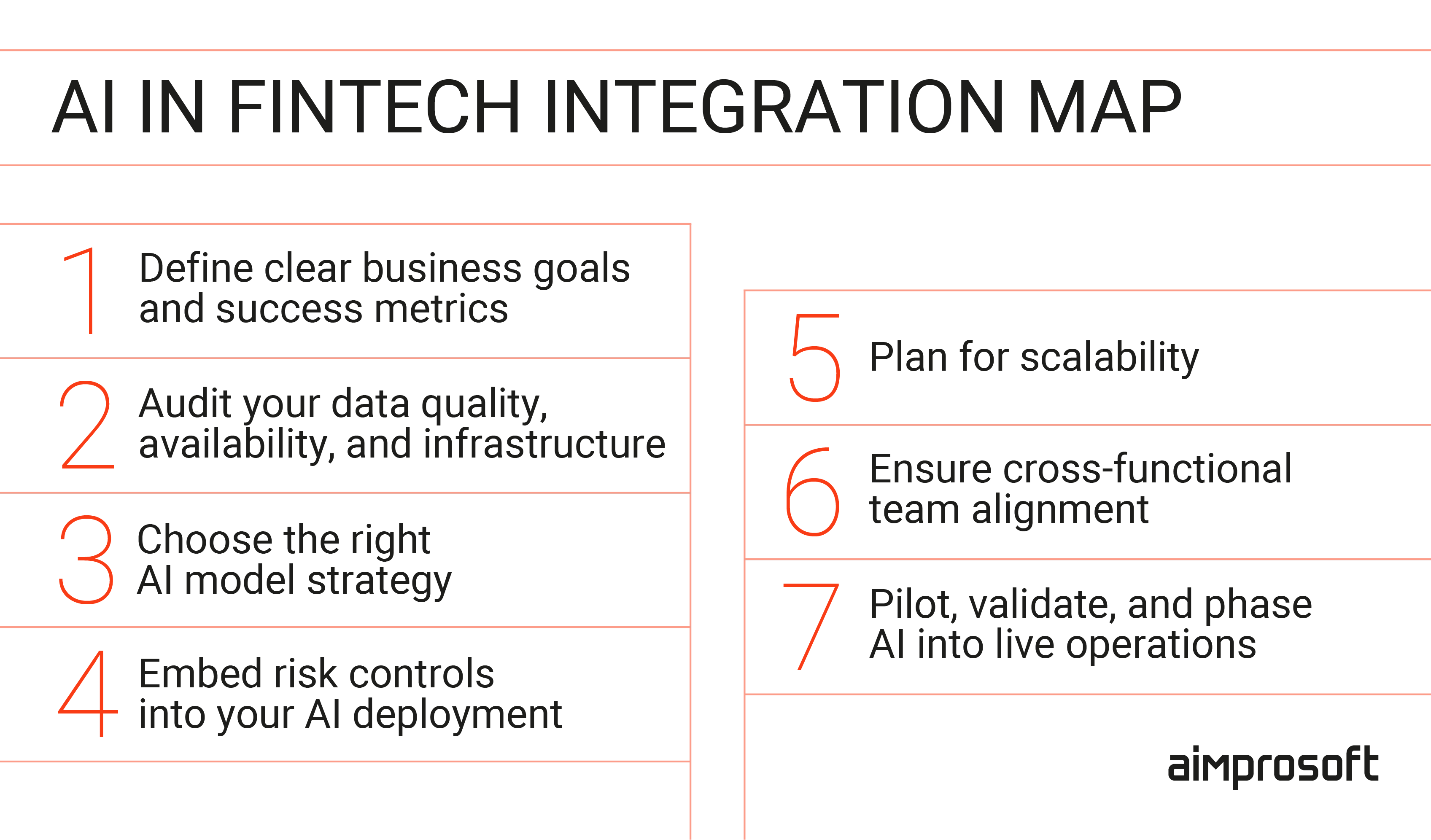

Fintech AI Integration: 7 Simple Steps to Succeed

Published: – Updated:

Artificial intelligence in fintech is moving faster than most companies can govern it. Early wins: smarter fraud detection, faster underwriting, leaner customer service are easy to celebrate. But scaling those successes across live operations, while keeping compliance, security, and user trust intact, is where you may stumble. Small gaps in readiness quickly become systemic problems once AI shifts from pilot project to production infrastructure.

If you’re serious about integrating AI into your fintech business, not just experimenting with it, you need more than a technical blueprint. You need an AI framework that connects technology to business outcomes, embeds risk management from day one, and aligns engineering, product, compliance, and leadership around measurable goals. Whether you’re building in-house or exploring external AI integration services, this article breaks down the critical steps you must take to implement AI successfully.

You’ll learn how to integrate AI into your fintech business securely and strategically, from setting business-driven goals to embedding risk controls, designing scalable architecture, and aligning your teams for sustainable growth. No hype, no shortcuts, just the structure required to make AI a real asset inside your company.

Why successful AI integration in fintech requires more than just technical skills

It’s easy to assume that if your engineers can build a model, your company is ready for AI at scale. However, artificial intelligence in fintech is not just a technical challenge. It’s a business-wide shift that affects how decisions are made, risks are managed, and trust is maintained. In a space where every decision impacts real money, regulatory scrutiny, and customer confidence, technical skills alone aren’t enough.

Companies that succeed with fintech artificial intelligence integration treat it as a business transformation, not a technical add-on. Those that fail usually scale too fast without building the processes, governance, or cross-functional ownership needed to keep AI systems safe, effective, and aligned with business goals.

AI integration into fintech operations

At a minimum, effective implementation of AI in fintech requires:

- Clear linkage between AI initiatives and business outcomes. Every model must have a measurable impact tied to revenue, efficiency, customer satisfaction, or risk reduction.

- Cross-functional collaboration from day one. Your engineering, product, compliance, and leadership teams must work together, not in isolated handoffs.

- Defined ownership of AI systems post-deployment. Someone must be responsible for monitoring, retraining, validating, and escalating when outputs drift or fail.

- Built-in risk and compliance controls. Governance, explainability, and auditability must be designed into AI systems, not patched on after launch.

- Scalable infrastructure that can evolve with the business. AI shouldn’t just work for today’s use case. It must be built to grow and adapt as your company scales.

If you overlook these structural foundations, you don’t just risk technical failure. In the fast-moving world of AI and fintech, you risk scaling flawed decisions into live operations, where the consequences multiply fast. Building the right foundation starts with something deceptively simple but often neglected: defining exactly what your business expects AI to achieve and how success will be measured.

Step 1. Define clear business goals and success metrics

Every AI deployment must be accountable, not just for technical performance, but for business relevance. When defining goals for your fintech AI project, you should focus on:

- Business-driven KPIs, not just technical metrics. For example, reducing manual KYC review times by 30%, increasing fraud detection rates without raising false positives, or improving loan approval rates for qualified applicants.

- Granularity in impact measurement. Instead of setting vague objectives like “improve customer service,” define what success looks like in hard numbers, e.g., cut average case resolution time by 20% within six months.

- Alignment across teams. Risk, compliance, product, and engineering must agree on what “success” means for the AI system before models go into production.

- Built-in failure tolerance. Not every model will work perfectly on the first try. Setting acceptable performance ranges and fallback mechanisms from day one reduces operational surprises later.

Ultimately, the value of AI in financial software development isn’t in making predictions. It’s in making better business decisions, faster and at scale. If you can’t measure how your AI is improving core business functions, you’re not managing a technology project; you’re gambling on a black box.

Setting clear goals and KPIs is the first real checkpoint in building a stable AI foundation. But once the goals are set, you may face a harder question: is the data you have, and the infrastructure behind it, good enough to support those ambitions?

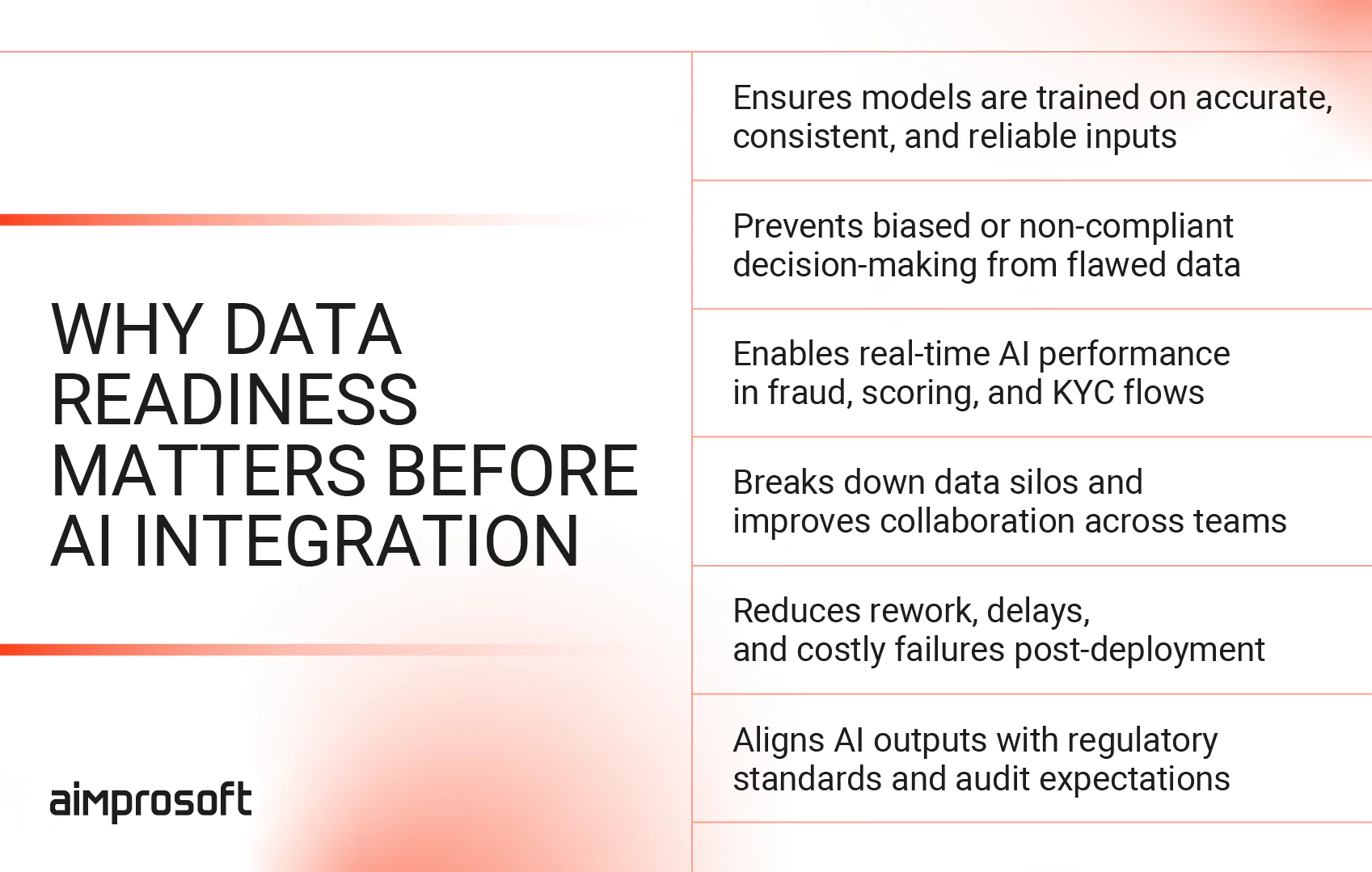

Step 2. Audit your data quality, availability, and infrastructure

Every custom fintech software is only as good as the data feeding it. AI in financial technology powers critical decisions, from fraud detection and credit scoring to KYC verification and customer engagement. In this context, bad data isn’t just an inefficiency; it’s a liability. Even the most sophisticated models will underperform, drift, or violate compliance standards if they’re built on incomplete, biased, or siloed datasets.

Before starting fintech solutions development with AI, you need to conduct a hard, honest audit of your data pipelines and supporting infrastructure. No model (no matter how powerful it is) will succeed without structured, compliant, and real-time-ready data systems behind it.

Preparing data for AI integration

Data quality: accuracy, completeness, and compliance

AI models amplify whatever data they are trained on and run on. If customer profiles are outdated, transaction logs are incomplete, or risk indicators are inconsistently captured, AI-driven decisions will be unreliable at best and discriminatory or non-compliant at worst. As a fintech company, you should focus on:

- Ensuring data accuracy and consistency across sources. Mismatched fields, outdated records, or unvalidated inputs undermine model performance immediately.

- Verifying completeness. Sparse data (e.g., missing transaction histories, partial KYC info) limits the ability to generate reliable outputs.

- Auditing for regulatory compliance. GDPR, CCPA, and PSD2 require strict controls over how personal and financial data is collected, stored, and processed. Non-compliant training or inference data exposes fintechs to heavy fines and operational shutdowns.

Data availability: breaking down internal silos

Many fintechs have strong datasets, but they’re fragmented across systems, teams, or business units. AI needs integrated, accessible data to learn, predict, and act in real time. Best practices include:

- Building unified data lakes or real-time accessible warehouses. Isolated SQL databases and legacy CRM systems create friction that slows or distorts AI output.

- Standardizing data schemas across departments. Fraud, credit, customer service, and risk teams must align data fields and definitions.

- Enabling secure data sharing under strict governance. Cross-team collaboration should not compromise PII protection or suitability.

Infrastructure readiness: scale and security for AI workloads

AI integration isn’t just about models. It’s about the systems that train, deploy, monitor, and scale them securely. Critical areas to assess:

- Cloud readiness and elastic scalability. Modern AI workloads demand dynamic scaling, something rigid on-prem infrastructure often can’t handle efficiently.

- MLOps pipeline maturity. Automated versioning, retraining, and monitoring pipelines are essential for production-grade AI, especially under fintech’s regulatory demands.

- Real-time or near-real-time access to data and models. Fraud detection, credit scoring, and KYC systems often can’t afford batch-processing delays.

Auditing your data and infrastructure doesn’t guarantee AI success, but skipping it almost guarantees failure. Once you understand the true state of your data foundation, the next strategic question becomes: should you build proprietary AI models, customize existing ones, or integrate trusted third-party ai software development solutions?

Step 3. Choose the right AI model strategy: build, customize, or integrate

Once your goals are clear and your data foundation is stable, the next strategic decision is deceptively simple: do you build your own models, customize open-source ones, or integrate third-party solutions?

Each path has its advantages and its risks. The right choice depends on the complexity of your use case, the sensitivity of your data, your regulatory obligations, and your in-house AI maturity. Here’s how you can evaluate the options:

Build (proprietary models)

Best for companies with strong in-house AI talent, unique datasets, or use cases requiring tight control and full explainability.

- Pros: Full ownership, model transparency, easier auditability, tailored performance;

- Cons: Longer time to market, higher resource investment, need for continuous maintenance;

- When it fits: Proprietary scoring systems, niche fraud detection, and regulatory-sensitive workflows.

Customize (open-source or pre-trained models)

Best for teams that want control and speed. Fine-tuning existing models (e.g., language models or classifiers) balances flexibility and efficiency.

- Pros: Faster delivery, reduced R&D costs, some explainability maintained;

- Cons: Requires skilled AI/ML team, dependencies on open-source updates, potential IP concerns;

- When it fits: Chatbots, document classification, and personalization engines.

Integrate (third-party AI services)

Best for companies that need to move fast or lack the resources to build or customize. Often used for non-differentiating but essential services.

- Pros: Speed, minimal development effort, scalable APIs;

- Cons: Black-box risks, less control over model updates, explainability, and compliance challenges;

- When it fits: ID verification, KYC, payment fraud monitoring, support automation.

No path is inherently better, but choosing the wrong one can introduce long-term friction, from vendor lock-in to compliance gaps. The key is to match your strategy to your core value proposition: build where you differentiate, integrate where you don’t, and customize where speed and flexibility matter most.

And once your model strategy is defined, the next challenge is embedding the risk controls and governance needed to run it responsibly, right from day one.

Step 4. Embed risk controls into your AI deployment from day one

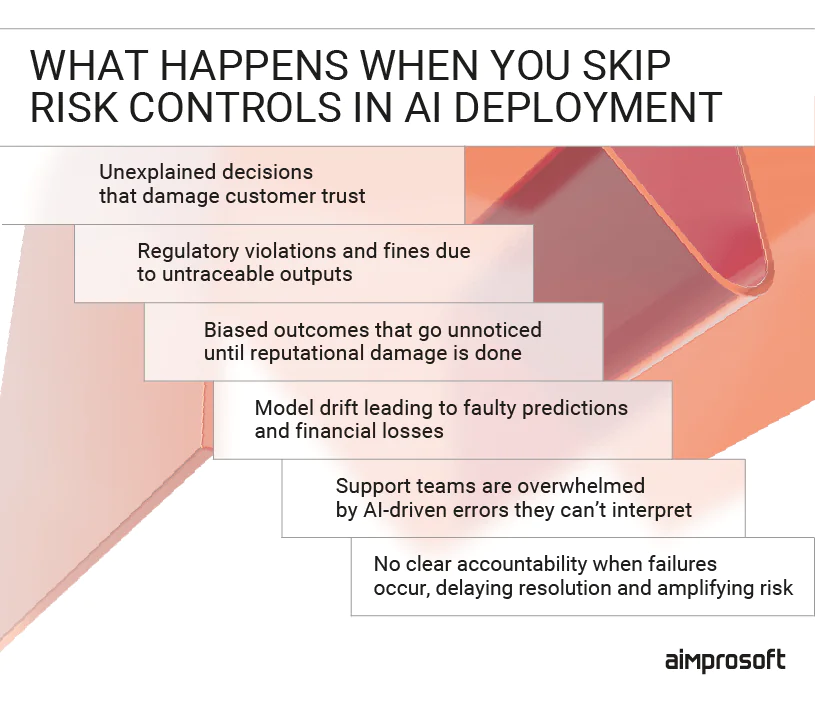

Artificial intelligence in fintech integration doesn’t fail in a vacuum; it fails in production, where poor decisions directly impact users, compliance, and your brand. Once a model is live, issues like bias, drift, or misclassification don’t stay theoretical; they become real operational and legal problems. That’s why every successful artificial intelligence in fintech deployment starts with a simple rule: build for control from day one.

You must treat risk as part of the system design, not something to layer on once the model is “working.” Whether you’re building models in-house or integrating third-party ones, risk mitigation needs to be woven into how those systems are trained, monitored, and governed.

Importance of risk controls in AI deployment

Build monitoring into production, not as a post-launch patch

AI for fintech is not a “set-and-forget” technology. As data shifts, fraud patterns evolve, or customer behavior changes, models degrade. Without real-time monitoring, these failures will go undetected until business KPIs or regulatory red flags start signaling trouble. What to implement:

- Performance monitoring dashboards tied to business outcomes (false positives, approval rates, etc.)

- Drift detection for both input data and model outputs

- Alerting thresholds that trigger human review or automated rollback paths.

Make explainability non-negotiable

In fintech, decisions need to be justified, whether to a customer, an auditor, or a regulator. Black-box models may perform well, but if no one can explain why a user was flagged or denied, trust erodes fast. What to implement:

- Model-agnostic explainability tools (e.g., SHAP, LIME) in live systems;

- Audit trails for key decisions tied to customer identity, transaction, or scoring outcome;

- Internal playbooks for customer support and compliance teams on interpreting AI outputs.

Define escalation paths before issues appear

Every AI system will fail at some point, and the question is how quickly and effectively you catch and fix it. Risk controls must include clear routes for intervention, override, and investigation. What to implement:

- Ownership: assign model responsibility across engineering, risk, and compliance;

- Override capability: allow humans to halt or correct decisions in high-risk cases;

- Failure response plans: define how issues are triaged, logged, and resolved across teams.

AI solutions for fintech don’t just need to work; they need to work responsibly. The more critical the decision, the tighter the controls must be. Embedding these systems from the start ensures your AI isn’t just innovative, but trustworthy and sustainable. But as your business grows, the real challenge is scaling everything around that AI, namely controls, infrastructure, and decision logic, without losing reliability or speed.

Step 5. Plan for scalability: modular architecture and future-proofing

It’s one thing to make AI work during a pilot. It’s another way to ensure it keeps working under pressure across markets, products, and compliance environments. As the AI in fintech market matures, scalability can no longer be an afterthought. Without it built into your architecture from the start, AI becomes a bottleneck instead of an advantage. That’s why technical design choices must be driven by long-term growth, not just short-term delivery. Here’s what to prioritize when future-proofing AI in fintech:

- Design modular pipelines that allow model updates, swaps, or retraining without full system rebuilds;

- Decouple AI components from your core application logic so they can evolve independently;

- Ensure cloud-agnostic deployment to avoid vendor lock-in and optimize for cost and latency;

- Use containerization and orchestration (e.g., Docker, Kubernetes) for scaling workloads dynamically;

- Build with compliance in mind, so audits and policy changes don’t require architectural overhauls;

- Log everything (input, output, decision rationale, etc.) to enable retraining, audits, and model explainability at scale;

- Anticipate multi-model operations, plan how multiple models (fraud, scoring, personalization) will interact and be governed across systems.

Scaling AI isn’t just about system load. It’s about architectural flexibility, governance, and readiness for real-world volatility. If you build for scale from the start, you’ll avoid the painful (and expensive) rewrites that come when AI use cases begin to multiply.

Step 6. Ensure cross-functional team alignment: AI isn’t an engineering-only project

Too many AI projects stall or backfire because they’re built in silos. A model may perform perfectly in development, but without input from compliance, product, or customer support, it creates more problems than it solves once it goes live. In fintech, where decisions impact trust, regulation, and money, AI integration must be a shared responsibility from the start. Here’s what strong cross-functional alignment looks like and what happens when it’s missing:

- Product & Engineering disconnect:

When product teams don’t understand model limitations, they overpromise features that can’t be delivered responsibly or launch tools that underperform in production.

How to fix: Align roadmap planning with model capability reviews and risk input. - Risk & Compliance left out until launch:

Teams often treat compliance as a final checklist. The result: models that violate regulatory boundaries, use unvetted data, or trigger audit failures.

How to fix: Embed legal and risk teams into the model design and review loop early. - No clear ownership post-launch:

Once a model is live, who’s watching it? Without named owners, performance issues, customer complaints, or fairness concerns get lost.

How to fix: Assign accountability across technical and non-technical teams, not just engineering. - Customer support teams are kept in the dark:

If the AI rejects a user or flags an issue, support staff need to understand why and how to explain it. Without visibility, trust erodes quickly.

How to fix: Create internal documentation and workflows for support and CX teams to handle AI-driven outcomes. - Lack of a shared success metric:

Each team defines “AI success” differently: engineering sees precision, compliance sees risk avoidance, and product sees engagement.

How to fix: Set unified success KPIs that reflect both business goals and operational risk tolerance.

The deeper AI goes into your product, the more essential collaboration becomes. Alignment is a structural requirement for scaling AI that won’t break your business from the inside.

Step 7. Pilot, validate, and phase AI into live operations

Launching an AI model into production isn’t the end of the project. It’s the beginning of accountability. Too many fintech companies treat deployment like flipping a switch: the model clears testing, so it goes live across the board. However, in regulated, high-stakes environments, even a high-performing model can fail if rolled out without control. The goal isn’t just to launch but rather to launch without creating chaos.

Successful fintech AI teams treat go-live as a phased process, where each step is designed to test not just model performance, but real-world behavior, customer response, and operational impact.

Stage 1: Controlled pilot in a low-risk environment

- Deploy the model in a restricted environment: a limited user segment, non-critical flow, or internal sandbox.

- Monitor for real-world edge cases that didn’t show up in training or validation, e.g., unusual user behavior, unexpected rejection patterns, or latency issues.

- Use this phase to test supporting systems: alerting, overrides, logging, and support workflows.

- Validate that non-technical teams (compliance, support, risk) are ready to engage with the model in production.

Stage 2: Gradual rollout with A/B or shadow testing

- Run the new model in parallel to existing rules-based or legacy systems (shadow mode) to compare decisions.

- Use A/B testing to expose a percentage of users to the AI system and evaluate business KPIs vs. baseline.

- Actively monitor for performance drift, fairness issues, or increased support ticket volume as exposure increases.

- Keep rollback mechanisms and override paths active; this is where many fintech teams catch silent failures early.

Stage 3: Full-scale deployment with post-launch governance

- Once the model proves itself at a limited scale, expand to full production, but don’t remove governance layers.

- Maintain retraining schedules, performance benchmarks, and compliance audits post-launch.

- Conduct structured postmortems on the pilot and rollout process, what worked, what broke, and how teams responded.

- Document everything: auditors, regulators, and future teams will need to understand how the system evolved over time.

A controlled, structured rollout allows you to validate not just the model’s math, but your company’s ability to support it, monitor it, and course-correct when reality doesn’t match predictions. With the system live and stabilized, the next challenge is keeping it relevant, compliant, and performant as your fintech business evolves, but that’s where ongoing governance and continuous improvement come in.

How Aimprosoft supports fintechs in secure, scalable AI integration

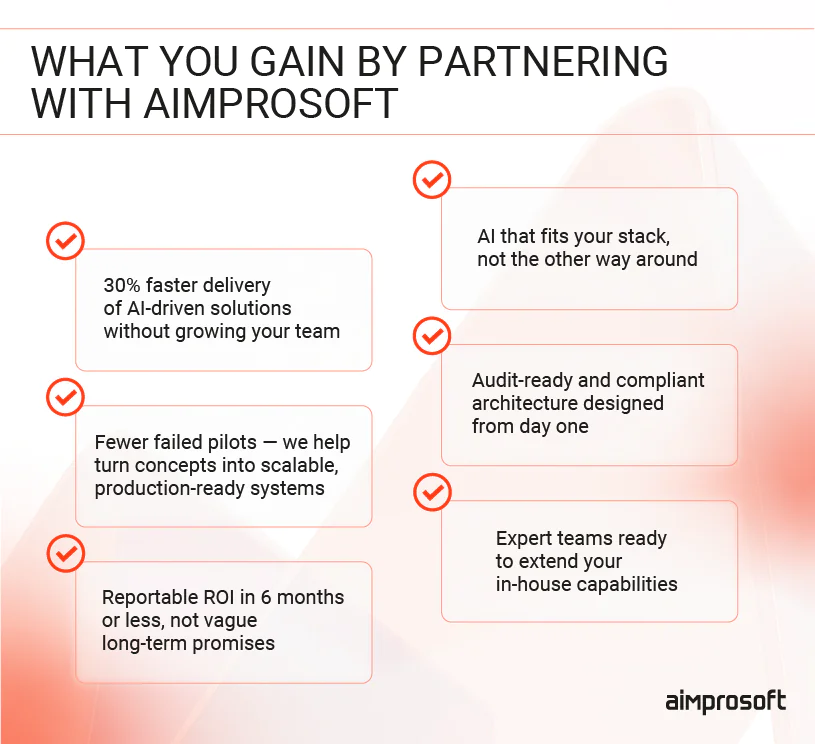

You’ve just seen what it takes to integrate AI into fintech operations, from aligning goals and auditing data to embedding risk controls and managing rollout. These aren’t theoretical best practices. They’re the exact areas we help our clients navigate every day.

At Aimprosoft, we approach AI integration not just as a development task, but as a cross-functional transformation. Our AI framework is designed to address common barriers to AI adoption, helping fintech companies overcome data silos, regulatory complexity, and technical debt while accelerating value across the product lifecycle.

Benefits of partnering with Aimprosoft AI fintech software development company

Here’s how we’ve supported clients in real-world AI integration projects:

Case study 1: Automating document processing for improved OCR accuracy

- Challenge:

A fintech company opted for our fintech software development services to improve the accuracy of its OCR pipeline for processing scanned financial documents and reduce the manual overhead of validating extracted data.

- Our approach:

We developed an AI-powered pipeline that combined OCR with automated document validation and correction. This included building a REST API to process scanned PDFs, extract structured data, and return results in JSON format. We also restructured the data storage layer — replacing MySQL with MongoDB — to better support unstructured financial data.

- Impact:

With the help of fintech custom software development, our partner significantly reduced manual review time while increasing document processing accuracy. Automated validation and correction made financial workflows faster, more scalable, and fully auditable, improving both operational efficiency and data quality.

Case study 2: Enhancing precision and usability for an object measurement platform

- Challenge:

Our client chose our fintech app development services since their platform needed higher measurement accuracy and improved user experience to better serve insurance agents and field workers relying on image-based object assessments.

- Our approach:

We designed and implemented a custom machine learning module for automatic object detection and measurement. The solution used computer vision techniques (OpenCV) and Python-based image analysis models to process input from the mobile device camera and return accurate dimensions in real time. The module was fully integrated into the web and mobile app architecture, ensuring seamless communication between the frontend and backend, and enabling precise 3D model rendering. To support real-time collaboration, we also improved in-app messaging, video calling, and data sharing, and optimized the platform’s scalability using AWS S3 and GCP services.

- Impact:

The AI-driven measurement engine dramatically increased accuracy while reducing manual input. Insurance professionals and field agents now perform faster, more confident assessments using a feature-rich, user-friendly interface, all backed by a high-performance, scalable infrastructure.

Final thoughts: AI integration is a continuous journey, not a one-time project

Successful integration of artificial intelligence in financial sector doesn’t end with deployment. That’s where the real work begins. Models drift, business goals evolve, and regulations shift. What matters is not just how quickly you adopt AI, but how consistently you adapt it to changing conditions without compromising control, trust, or performance.

Fintech companies that treat AI as infrastructure, not an experiment, are the ones that benefit most. Understanding how AI is used in fintech today, not just in pilots but in real-world, scalable systems, means planning beyond initial deployments: embedding risk controls early, aligning teams, validating in production, and staying ready to scale or recalibrate as needed.

Here’s what to keep in focus as you move forward:

- Start with measurable business goals, not technical ambitions;

- Prepare your data and infrastructure to support AI under real-world pressure;

- Choose the right model strategy for your use case, speed, and risk tolerance;

- Design risk and compliance into your AI fintech workflows, not as afterthoughts;

- Build alignment across technical and non-technical teams from day one;

- Roll out in phases, validating not just model accuracy but operational impact;

- Keep governance in place as you scale, AI is never truly finished.

If you’re ready to move from isolated experiments to AI that delivers real value at scale, our AI implementation consultants are here to help. Contact us to explore how our AI integration services support fintech companies with secure, scalable implementation, from strategy to execution.

FAQ

How long does AI integration typically take in a fintech product?

It depends on the complexity of the use case, data readiness, and your current infrastructure. A well-defined AI implementation strategy can accelerate the process significantly. For relatively contained use cases like KYC automation or fraud scoring, integration can take 3–4 months with the right foundation. More complex, cross-functional applications, such as credit risk modeling with explainability layers, may require 6–12 months. The biggest AI implementation challenges that delay AI integration usually stem from poor cross-team alignment or unprepared data systems, not from the model itself.

Should we build AI models in-house or rely on third-party vendors?

It depends on what you’re solving for and how prepared your organization is to support it long-term. Building in-house makes sense when your use case is core to your business model, highly specialized, or requires full control and explainability, such as proprietary scoring algorithms or compliance-critical decision flows. However, this approach also means facing the full weight of AI adoption challenges, from data engineering and model retraining to MLOps and audit-readiness.

For most fintech companies, partnering with a third-party software vendor like Aimprosoft offers a more strategic balance. We, for instance, help clients integrate, customize, or extend AI solutions without overloading internal teams, especially for non-core but high-value tasks like fraud detection, ID verification, KYC automation, or support chatbots. In reality, most successful fintechs combine both strategies: they build where they differentiate, and rely on an expert fintech software development company where speed, scale, and compliance matter most.

What are the biggest risks if we scale AI without proper governance?

Unmonitored models can drift, introduce bias, or make decisions that can’t be explained, which is a major regulatory and reputational risk in fintech. Lack of ownership post-deployment can lead to customer complaints, audit failures, or even model-driven financial losses. Embedding explainability, monitoring, override paths, and escalation processes early is what separates responsible artificial intelligence in fintech integration from operational liability.