Cost to Host and Scale a Private Large Language Model (LLM) in 2025

Published: – Updated:

In part one of our LLM series, we explained why enterprises are shifting toward private deployments. In part two, we outlined what it takes to build a private LLM stack. The next question is the hardest one: what does it actually cost to host and scale a private large language model in 2025?

The short answer is: it depends. Costs shift dramatically with model size, concurrency, hardware choices, storage needs, networking bandwidth, licensing terms, and team size. A 7B model fine-tuned on a single GPU looks nothing like a 70B+ model spread across a multi-node cluster. Add storage, bandwidth, redundancy, and retraining cycles, and the numbers shift again. But this doesn’t make the question unanswerable. Treat cost as a range shaped by trade-offs, not a fixed quote. In this article, we map the drivers, share realistic monthly expenditure ranges for common scenarios, and show how deployment choices, such as cloud, hybrid, or bare metal, change the math.

Our goal isn’t to hand you a one-size-fits-all calculator. It’s to outline the main levers that shape cost to host LLM, so you can plan budgets, weigh architecture choices, and avoid surprises as usage grows.

Key factors affecting the cost to host a private LLM

There’s no single price tag for running a private LLM. Costs depend on a mix of technical and business choices: the model you select, the infrastructure you run it on, and how you plan to scale. Some of these costs are visible on your cloud invoice, others hide in team time, retraining cycles, etc.

Together, they decide whether your deployment stays manageable or turns into a budget sink. Here are the main factors that consistently shape the bill:

| Factor | What it impacts | Cost context (2025) |

|---|---|---|

| Model size & parameter count | Memory footprint, training time, inference efficiency. Larger models need more GPUs and interconnect bandwidth. | 7B–13B models (e.g., LLaMA 4 8B, Mistral 7B, Qwen 3 14B, Gemma 3 9B) often fit on a single A100 80GB (~$3–5/hr). 30B–70B (e.g., LLaMA 4 70B, Qwen 3 32–72B, Gemma 3 27B, Mixtral 8×7B) typically need 4–8 GPUs. 70B+ commonly requires 8–16 H100s, pushing into $10Ks/month. |

| Compute requirements (GPU/TPU/VRAM) | Primary driver of latency, throughput, and safe concurrency. In private LLMs, GPU class and VRAM dictate how many tokens per second you can generate, which directly impacts efficiency and the effective cost per request. | A100 40GB: ~$2–3/hr; A100 80GB: ~$3–5/hr. H100 80GB: ~$7–12/hr on specialized GPU clouds; $20–40/hr on hyperscalers/managed platforms. Clusters of 8–16 H100s can exceed $50K+/month. |

| Storage & networking | Affects how fast models and embeddings load and how much you pay to store and move data; multi-node setups add interconnect costs. | Example sizes: 7B checkpoint ~15–20 GB; 70B often 150–200 GB+ (per checkpoint). Typical costs: SSD/NVMe $0.08–$0.12/GB/month; global egress $0.05–$0.12/GB — at scale, bandwidth can rival storage. |

| Licensing model | Sets whether you pay ongoing license fees and how freely you can tune, host, and migrate the model over time. | Open-weight families (LLaMA 4, Mistral, Qwen 3, Gemma 3) carry no license fees but require in-house staff and infrastructure. Commercial licenses for closed-weight or enterprise editions can range from tens of thousands per year for small-scale rights to well into the high six figures when bundled with redistribution rights, compliance guarantees, and enterprise support. |

| Deployment environment (cloud/bare metal/hybrid) | Changes cost predictability, elasticity, and vendor dependence; impacts how easily you meet compliance and data-residency needs. | On-demand cloud GPUs are easy to start, but expensive in the long run. Bare-metal rentals ($2–3K/month per A100 server) can be cheaper for steady workloads. Hybrid setups often balance cost and compliance. |

| Team & operational overhead | Continuous monitoring, retraining, debugging, and compliance. The “people layer” keeps systems running. | Even a small setup may need 2–3 engineers; an enterprise-scale setup requires 10+ AI ops staff. Annual payrolls often surpass infrastructure spending over time. |

| Scaling & concurrency | The number of simultaneous users/requests you must serve; drives GPU fleet size and networking, so cost can grow faster than traffic. | Internal tools may run on 1–2 GPUs; public-facing workloads at thousands of QPS need clusters, adding tens of thousands/month. |

Notes:

- Latency: time to return a response.

- Throughput: number of responses per second/minute.

- Concurrency: how many requests you can handle at the same time while staying within latency targets.

- TPU: Google’s Tensor Processing Unit accelerator (v5e/v5p) is available mainly on Google Cloud. While it offers strong training throughput with JAX/TF (and PyTorch via XLA), the ecosystem for private LLM inference is narrower than for GPUs. In practice, GCP LLM hosting pricing for TPU workloads can differ significantly from standard GPU rates, so cost modeling should account for that.

- VRAM: GPU memory that holds model weights/activations during inference.

- Token: a chunk of text the model reads/writes; many costs are measured “per 1k tokens.”

Model size and parameter count impact on hosting cost

The number of parameters in a model is the single biggest lever on cost to host LLM. Every extra billion parameters adds weight files to store, memory to load them, and bandwidth to move them around. The jump isn’t linear: as soon as a model no longer fits on a single GPU, you’re paying not just for more GPUs but also for the overhead of keeping them in sync. That’s why parameter count often decides whether hosting is a manageable monthly bill or a budget shock.

Examples (2025 ballparks): Smaller models in the 7B–13B range (e.g., LLaMA 4 8B, Mistral 7B, Qwen 3 14B) can fit comfortably on a single A100 80 GB (~$3–$5/hr in cloud). Move up to a 70B model like LLaMA 4 70B, and suddenly you’re looking at 8–16 GPUs working together, pushing total spend into the tens of thousands per month.

Compute requirements: GPUs, TPUs, and VRAM needs

Hardware choice is just as critical as model size. Even if two models share the same parameter count, the accelerator you run them on can dramatically change throughput, latency, and ultimately cost. High-end GPUs with more VRAM not only support larger models but also handle greater concurrency, reducing queueing delays. That capability comes at a steep price, which is why GPU time almost always ends up being the single largest cost driver in hosting private LLMs.

For context, a 7B model such as Mistral 7B can run on a single NVIDIA A100 40GB (~$2–$3/hr in cloud), while upgrading to an H100 80GB (~$25–$40/hr depending on provider and region) delivers faster inference and higher throughput. At production scale, a 70B model like LLaMA 4 70B can require 8–16 H100s, driving approximately $5k–$15k per day for continuous use. Bare-metal rentals shift the economics again: leasing a single A100 80GB server at ~$2k–$3k/month can become more cost-efficient than cloud when workloads are steady.

While GPUs are the default choice, TPUs (such as Google’s v5e or v5p) are also viable for transformer inference and training in some regions. They can offer competitive performance and pricing, but availability, ecosystem maturity, and networking options vary. For that reason, most enterprises treat TPUs as an alternative worth piloting before making large-scale commitments.

Storage, bandwidth, and networking costs

Private deployments are not just about GPUs. Model weights, fine-tuned variants, embeddings, logs, and monitoring data accumulate quickly, requiring high-performance SSD or NVMe storage for production use. Multi-node setups also rely on fast interconnects (e.g., 100 GbE or InfiniBand), while serving users across regions adds bandwidth cost for LLM hosting that often catches teams by surprise. These hidden items can quietly rival compute if not planned for.

For example, a 7B checkpoint (e.g., Mistral 7B, Gemma 3 7B) is usually 15–20 GB, while a 70B model such as Qwen 3 72B can exceed 150–200 GB just for weights. Cloud SSD/NVMe typically runs $0.08–$0.12/GB/month. Cross-region data egress charges at $0.05–$0.12/GB mean that high-traffic deployments can end up paying as much for bandwidth as for storage, unless caching, colocating compute, or using CDNs are built into the architecture.

Licensing fees: open-source vs. commercial large language models

Licensing defines both your recurring costs and the amount of control you retain over the model. Open-weight models, such as LLaMA 4, Mistral, Qwen 3, or Gemma 3, are generally free of direct license fees, but they’re not cost-free. You still pay for integration, fine-tuning, infrastructure, and the teams that keep them running. Within this group, licenses vary: some are fully permissive (e.g., Apache 2.0, MIT), while others come with custom terms (e.g., Meta’s LLaMA license, Google’s Gemma license) that may limit redistribution or large-scale commercial use.

By contrast, commercial private LLMs introduce explicit licensing costs. These may take the form of annual contracts, per-query fees, or per-seat pricing. In exchange, enterprises often gain dedicated vendor support, indemnification, and compliance assurances that aren’t guaranteed with open-weight models. At scale, however, these contracts can easily outpace infrastructure costs, ranging from tens of thousands of dollars per year for limited deployments to millions for enterprise-wide use.

This makes licensing a strategic fork in the road: start with open-weight models for flexibility and lower upfront expense, or commit to commercial licensing when regulatory, legal, or operational needs demand stronger vendor backing.

Team and operational overhead

Infrastructure doesn’t maintain itself; teams do. Running a private LLM means budgeting not only for GPUs but also for the human layer, including monitoring, retraining, debugging, compliance reviews, and incident response. As deployments grow, this layer often becomes the single largest recurring expense, surpassing even hardware costs.

For instance, a modest setup might be covered by 2–3 engineers handling DevOps, MLOps, and security. An enterprise-scale deployment, however, can require 10+ AI-ops staff, with annual payroll exceeding infrastructure spend in many regions. Ignoring this factor leads to brittle systems and surprise budget overruns.

Scalability and concurrency demands

The number of users and requests your system must handle is another factor that multiplies costs. An assistant serving one department is inexpensive; serving thousands of concurrent customer queries is an entirely different scale. Concurrency requires GPU clusters, low-latency networking, and regional load balancing. Unlike linear scaling, GPUs must be added in chunks, not fractions.

For example, hundreds of requests per minute might run comfortably on a few A100s, while thousands of QPS typically require entire clusters, adding tens of thousands to monthly spend. This is why most organizations scale in stages: launch small, measure demand, and expand only when usage justifies the cost.

Costs can spiral quickly without the right architecture and planning. Our team has guided enterprises through model selection, infrastructure design, and cost optimization for private LLM deployments. Let’s discuss how we can help you build a scalable setup without breaking your budget.

Taken together, these drivers set the floor (and the ceiling) of your private LLM budget. Next, we’ll translate them into realistic 2025 cost tiers so you can see where your deployment is likely to land.

Approximate private large language model pricing ranges in 2025

In the previous section, we examined the individual drivers of private large language model pricing, including model size, hardware, storage, licensing, team and operational overhead, and scalability. Each pushes the budget in different ways, and we use price points to show how quickly expenses can escalate. This section serves as a practical LLM cost comparison, grouping those drivers into real-world deployment tiers.

Here, we group those drivers into practical tiers of deployment. A tier is not a single factor but a common bundle of model size, hardware, concurrency, and hosting strategy that organizations adopt in practice. Framing costs this way makes it easier to see where your needs might land, whether you’re experimenting with a single GPU or serving global-scale inference across clusters.

We’ll also examine hosting strategy on its own, since cloud, bare metal, and hybrid setups shift the total cost just as much as model size does.

Entry-level (small private LLMs, single GPU)

This tier covers models in the 7B–13B range, such as LLaMA 4 8B, Mistral 7B, Qwen 3 14B, or Gemma 3 9B. They typically run on a single high-memory GPU, making them attractive for experimentation and departmental tools.

Best for: early-stage prototypes, internal copilots, PoCs, or low-traffic assistants where uptime and latency are not mission-critical.

Hosting options: At this scale, teams often start in the cloud for maximum flexibility, for example, an A100 40 GB at $2–3/hr or an H100 80 GB at $4–12/hr, depending on the provider. That translates to approximately $1K–5K/month if the instance runs continuously. For steady workloads, bare-metal rentals (e.g., a dedicated A100 80 GB server at $2K–3K/month) become more cost-efficient. Many teams adopt a hybrid usage pattern: cloud for spiky dev/test, bare metal for stable inference.

Mid-tier (multi-GPU, enterprise-ready)

As workloads move into the 30B–70B range (e.g., LLaMA 4 70B, Mixtral 8×7B, Qwen 3 32B), hosting requires multiple GPUs and higher-bandwidth networking. Multi-node orchestration and concurrency handling become necessary, and costs rise accordingly.

Best for: enterprise copilots, production deployments with moderate concurrency, or customer-facing tools with defined SLAs (service-level agreements).

Hosting options: Many organizations pilot on the cloud for speed but migrate to bare metal once demand stabilizes. In cloud, 4–8× H100s typically cost $15K–40K/month depending on concurrency and region. Equivalent bare-metal setups (Vultr, Crusoe, Lambda, etc.) are usually $8K–20K/month and deliver better economics for steady usage. Hybrid strategies are common: stable inference on bare metal, cloud for overflow or testing cycles.

Enterprise (70B+ parameters, high concurrency)

Global-scale workloads serving thousands of concurrent users fall into this category. Models in the 70B–100B+ range, or high concurrency inference, often demand 8–16+ GPUs plus specialized ops teams.

Best for: SaaS platforms, enterprise-scale assistants, and regulated sectors (finance, healthcare, telecom) where latency, compliance, and reliability are non-negotiable.

Hosting options: Cloud elasticity can absorb peaks but becomes expensive for continuous loads, for example, 8–16 H100s at $7–12/hr each can total $50K–150K/month when running 24/7. Many enterprises anchor on bare metal for predictable demand, leasing long-term clusters (e.g., from Lambda or Crusoe) at six figures annually with better per-unit economics. Hybrid is used more selectively here, mainly for geo-distribution or handling overflow.

While each tier has nuances, the core trade-offs are easiest to compare side by side. The table below summarizes the typical model sizes, hardware, LLM hosting pricing ranges, and best-fit use cases for each scale.

| Tier | Model size | Typical hardware | Monthly active cost (2025) | Best for |

|---|---|---|---|---|

| Entry-level | 7B–13B | 1× A100/H100 GPU | ~$1.5K–$5K | Prototypes, PoCs, internal copilots |

| Mid-tier | 30B–70B | 4–8× H100 GPUs | ~$15K–$40K | Enterprise copilots, moderate-concurrency apps |

| Enterprise | 70B–100B+ | 8–16× H100 GPUs (clusters) | ~$50K–$150K+ | SaaS platforms, regulated large-scale workloads |

To grasp these tiers in real-world context, take a look at the following hypothetical scenarios and see how infrastructure choices and workload scale influence overall budgets:

- Startup scenario: A small AI productivity startup fine-tunes a 7B model on a single A100 GPU in the cloud for internal copilots. Continuous 24/7 uptime runs about $2K / month, but costs drop by roughly a third when moving the same workload to bare metal.

- Mid-size enterprise: A software company deploying an internal support assistant on a 32B model uses 4 × H100 GPUs, paying around $20K–$25K / month on cloud infrastructure. After six months, they shift inference to a hybrid model (bare metal for steady load, cloud for spikes) and cut spending by ~30 %.

- Global SaaS provider: A large platform running 16 × H100 GPUs for multilingual customer service operates at roughly $100K–$120K / month. Most GPUs are leased through a long-term bare-metal contract, while the cloud covers overflow capacity during regional demand peaks.

As you can see, costs scale not only with model size but also with where and how the model is hosted. Let’s look at how cloud, bare-metal, and hybrid setups compare in practice.

Bare metal vs. cloud hosting vs. hybrid

The cost to host large language model isn’t only shaped by the model tier you choose; it also depends on where you run it. The same GPU can cost radically different amounts depending on whether it’s rented on-demand in the cloud, provisioned as bare metal, or split across a hybrid setup. Most enterprises now weigh all three options.

- Cloud shines for flexibility: you pay only when you use it, ideal for experiments, PoCs, and irregular usage patterns. The trade-off is a higher per-unit cost and bills that balloon if workloads become steady.

- Bare metal needs more operational expertise and longer commitments, but once workloads stabilize, it cuts per-GPU costs by ~20–40% and delivers predictable performance.

- Hybrid is increasingly common: organizations start in the cloud, migrate stable inference to bare metal, and keep cloud capacity for spikes, global distribution, or retraining jobs. This mix balances agility with long-term cost control.

| Hosting model | Pros | Cons | Best for | Average 2025 costs |

|---|---|---|---|---|

| Cloud | Flexible, on-demand, no upfront hardware | Higher hourly rates, risk of ballooning bills, vendor lock-in | Prototypes, PoCs, irregular usage patterns, global reach | A100 40 GB $2–3/hr; H100 80 GB $4–12/hr |

| Bare metal | Lower cost per GPU long-term, predictable performance, less lock-in | Requires setup/ops expertise, less elastic scaling | Stable production inference, cost-sensitive deployments | A100 80 GB server $2K–3K/month; 4–8x H100 $8K–20K/month |

| Hybrid | Cloud agility + bare-metal economics, supports scaling & compliance | More complex to manage/orchestrate | Enterprises balancing experimentation with steady load | Often 20–30% lower TCO (total cost of ownership) vs pure cloud (mature setups) |

While these hosting models differ in flexibility and long-term efficiency, the actual spend can still vary dramatically within the same category. Cloud providers use different pricing formulas, discounts, and regional rates, which means the same GPU may cost significantly more or less depending on where and how it’s provisioned. Comparing these options helps clarify which platform offers the best balance of performance and predictability for your workload.

Below is a comparative snapshot of typical 2025 pricing across major cloud providers to illustrate these differences:

| Provider | Example GPU | Typical hourly rate (2025) | Notes |

|---|---|---|---|

| AWS | NVIDIA H100 80 GB | $4.5–$12/hr | Flexible scaling but higher AWS LLM hosting cost under steady load |

| Azure | NVIDIA H100 80 GB | $3.8–$9/hr | Strong enterprise integration; Azure LLM hosting cost varies by region and reservation term |

| GCP | NVIDIA H100 80 GB | $4–$10/hr | Often paired with TPUs; region-based pricing similar to AWS/Azure |

The overall pattern remains consistent: most teams start in the cloud to move fast, later shift stable workloads to bare metal for efficiency, and maintain hybrid capacity for flexibility. This progression has become the default path for private LLM adoption.

Understanding the large language model cost ranges, both by hosting model and by cloud provider, is only the first step. To make budgets predictable, you also need to know how providers actually calculate bills.

How costs are calculated

Understanding cost ranges by tier and hosting model is only the starting point. To make budgets predictable, you need to know how those costs are actually calculated. In private LLM deployments, three dimensions dominate the bill: GPU-hour usage, infrastructure overhead, and engineering operations. Together, they form the true total cost of ownership (TCO).

GPU-hour pricing and usage

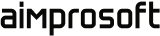

GPU time is the single biggest cost driver. Whether you rent from the cloud or lease bare metal, billing is tied to the number of hours your GPUs are active. In 2025, typical rates are:

- A100 40GB: $2–3/hr

- A100 80GB: $3–5/hr

- H100 80GB: $7–12/hr (provider/region dependent)

A single GPU running 24/7 translates into $1–1.5K/month for A100s and $1.5K–2.5K/month for H100s, assuming ~720h/month and 60–70% utilization. At the cluster scale, 8–16 H100s can push total spend into the $40K–150K/month range, depending on concurrency and workload efficiency.

GPU hour pricing and usage

The key variable here is utilization. If workloads do not fully occupy the GPU (for example, if inference jobs are sporadic or scheduled inefficiently), then effective costs rise sharply. A system running at 10% utilization can end up costing ten times more per processed request than one that keeps its hardware consistently busy. This makes GPU-hour management one of the most critical aspects of private LLM budgeting.

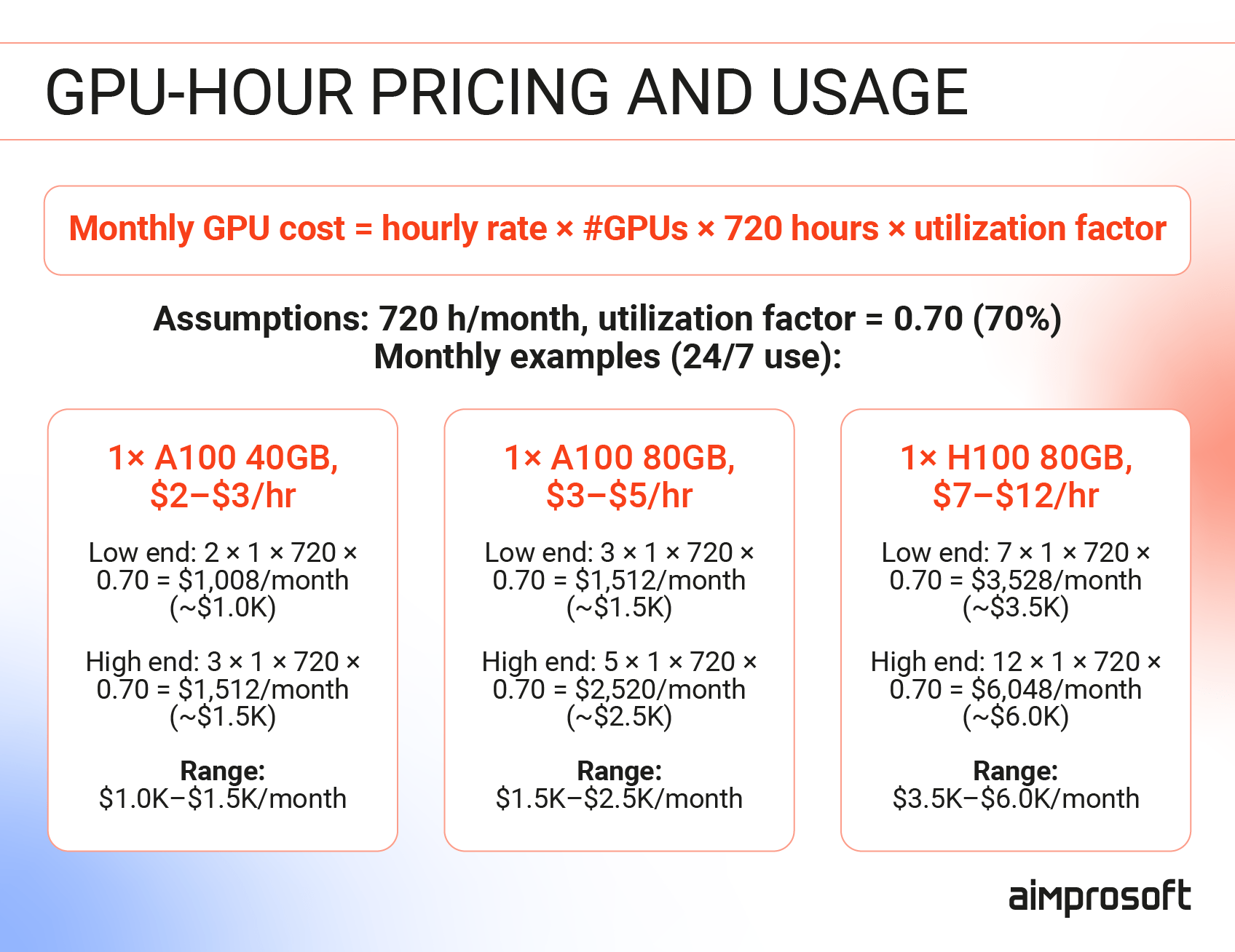

Infrastructure and deployment overheads

GPU time is only the baseline. Private large language model hosting also brings supporting infrastructure costs that can easily add thousands per month. When estimating the large language model infrastructure cost, storage often becomes one of the biggest factors: checkpoints, fine-tuned variants, and logs accumulate quickly. A 7B model often takes 15–20 GB, while a 70B+ can exceed 200 GB. With cloud SSD/NVMe priced at $0.08–0.12/GB/month, just storing models and logs can run into hundreds of dollars.

Networking adds another layer. Global deployments face egress charges of $0.05–0.12/GB. At scale, serving millions of requests can make bandwidth bills rival or even surpass storage costs.

Then comes orchestration — the invisible glue. Monitoring, autoscaling, redundancy, and failover systems are not included in GPU-hour billing, but without them, production setups often fail. These layers are why real-world budgets often overshoot once a project leaves the prototype stage.

Cost of LLM infrastructure and deployment

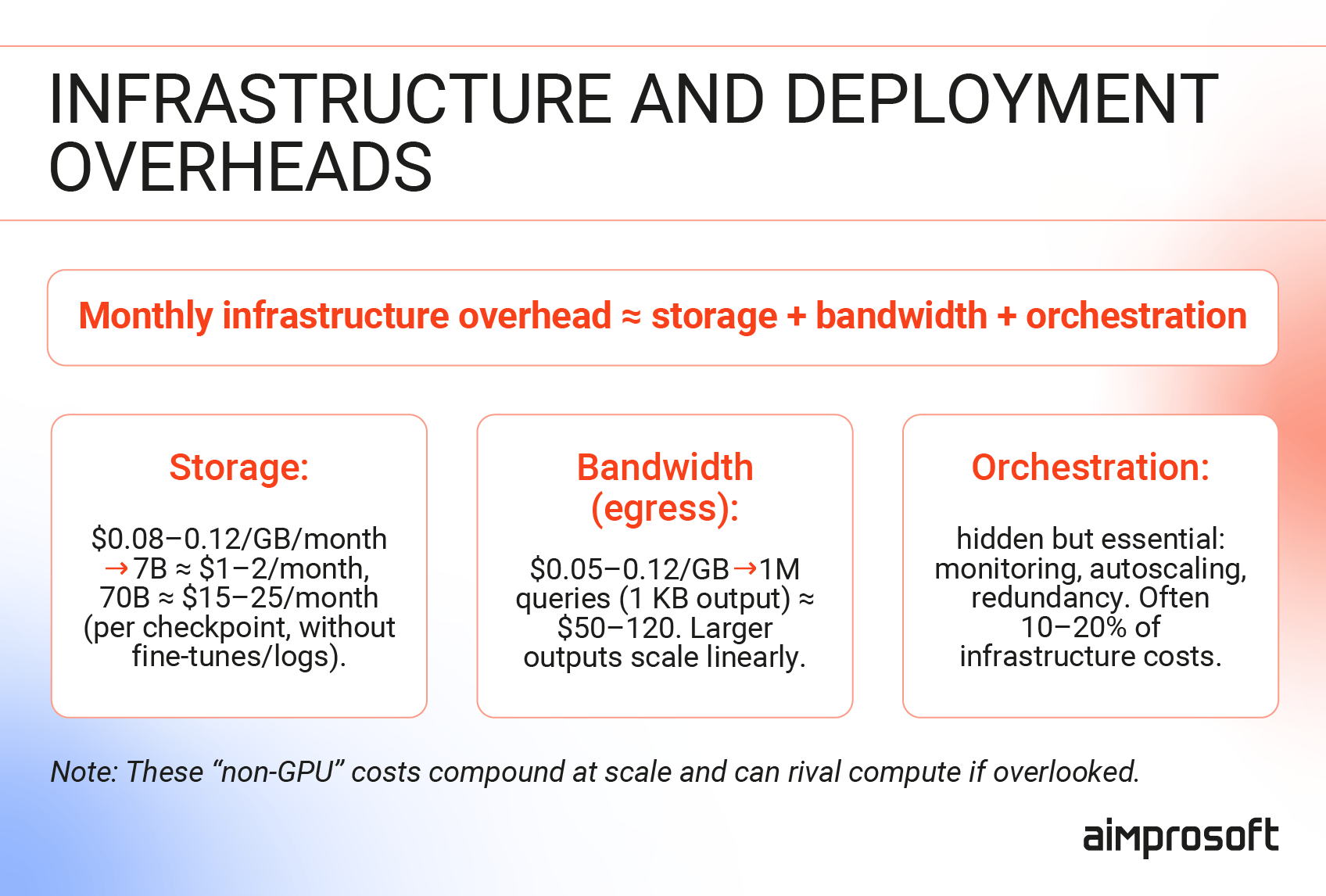

Engineering and operational overhead

Finally, hardware doesn’t keep itself running. Private deployments require people (DevOps, MLOps, and security staff) to manage retraining, updates, monitoring, and compliance.

- Small deployments: 2–3 engineers may be enough.

- Enterprise deployments: 10+ full-time staff is common.

Unlike GPUs, you can’t “switch off” engineers during idle time. Over the long term, salaries often outstrip infrastructure costs, making people the largest line item in TCO.

Cost of hiring LLM developers

From cloud billing to GPU-hour accounting, the math gets complex fast. Our experts can help you forecast realistic budgets, compare deployment models, and avoid hidden cost traps.

In short, GPU time, supporting infrastructure, and the human layer of operations are what truly determine the bill. The next question is who carries that burden: your own team with self-hosted infrastructure, or a managed provider.

Self-hosting vs managed hosting: cost & strategy

Once you understand how custom LLM deployment pricing is calculated, the next decision is operational: should your team run the model in-house, or rely on a managed provider? The choice between self-hosting and managed hosting defines not only your budget but also how much control and flexibility you retain. Both paths are viable, but they fit very different business contexts.

Self-hosting private LLMs

Running your own infrastructure gives you full control over the environment and data. You decide how models are deployed, which optimizations are applied, and how compliance requirements are met. This is often the only acceptable path for highly regulated industries such as healthcare, finance, or telecom, where data residency and auditability are non-negotiable.

The trade-off is complexity. Self-hosting requires a dedicated operations team, significant upfront investment in hardware or long-term rentals, and continuous monitoring. Yet for organizations with steady, predictable workloads and a need for deep customization, the long-term economics can be more favorable. Costs per GPU drop, vendor lock-in risks are reduced, and infrastructure can be fine-tuned to specific business needs.

Managed hosting for private LLMs

Managed hosting shifts the operational burden to an external IT partner. Instead of building an in-house platform team, you rely on vendor specialists to design, deploy, monitor, and scale the system. This includes infrastructure planning, compliance support, and performance optimization. The trade-off is higher ongoing spend and less freedom to adapt everything to your exact standards, but you gain faster time-to-market, reduced staffing requirements, and smoother scaling.

For many organizations, managed hosting is the pragmatic starting point. It allows them to experiment, prove value, and scale early use cases without heavy upfront commitments. However, managed LLM hosting pricing can add up as workloads grow, prompting teams to later migrate stable, high-volume workloads to self-hosted clusters for better cost control.

| Aspect | Self-hosting | Managed hosting |

|---|---|---|

| Cost structure | Higher upfront CapEx (capital expenditure) for hardware purchase or long-term rentals. Lower OpEx (operational expenditure) once workloads stabilize, but payroll, monitoring, and maintenance stay in-house. | Vendor fees cover expertise, operations, and support. Costs are more predictable and reduce the need to hire a full in-house platform team. |

| Control | Full control over infrastructure, tuning, and data handling. | Shared control: provider manages deployment, updates, and optimization; customization depends on the contract. |

| Compliance | Strong fit for regulated industries where residency, auditability, and certifications must be enforced internally. | Compliance depends on the vendor’s certifications (e.g., GDPR, HIPAA). This reduces in-house burden but ultimate accountability stays with the enterprise. |

| Flexibility | Highly customizable; can integrate tightly with internal systems and workflows. | Faster deployment and scaling thanks to vendor frameworks and approaches, but flexibility is limited to the provider’s supported tools and practices. |

| Data privacy | Full internal control over sensitive data. | Dependent on the vendor’s policies, practices, and SLAs. Some offer zero-retention and strict guarantees. |

| Best for | Regulated sectors, long-term stable workloads, and deep customization needs. | Early pilots, teams without infrastructure capacity, or enterprises preferring to outsource platform operations. |

In practice, many companies blend both approaches: starting with managed hosting to get projects off the ground, then moving stable workloads to self-hosted clusters while keeping external capacity for overflow or experiments. Choosing the right path isn’t always straightforward. Some organizations partner with external vendors to evaluate trade-offs, design hybrid strategies, and avoid costly missteps.

Whichever route you take, the next challenge is the same: keeping costs sustainable. In the following section, we’ll look at proven strategies to optimize spend without sacrificing performance.

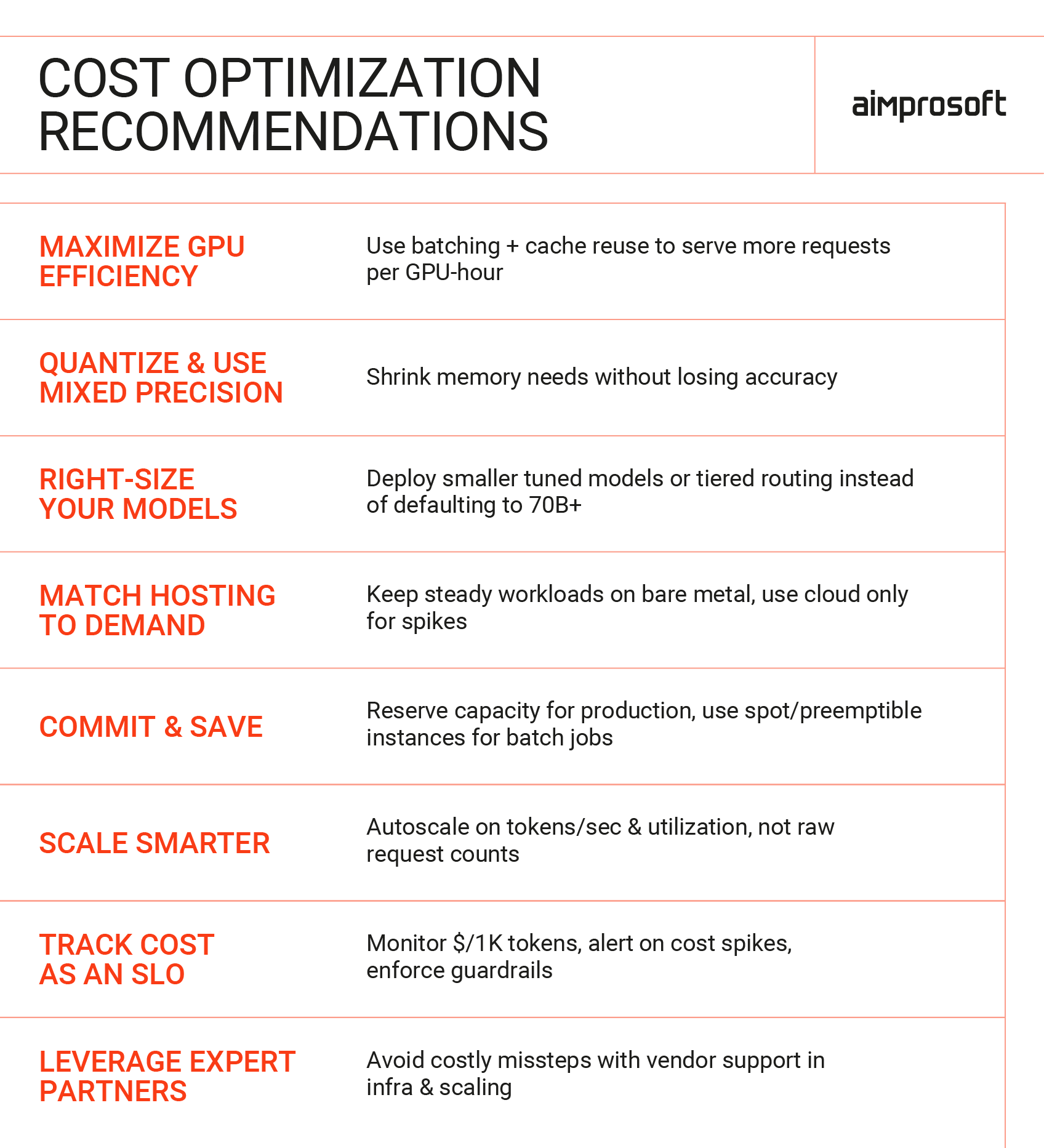

Cost optimization strategies

Effective cost optimization for LLM hosting starts with understanding where the money actually goes. Before diving into tactics, let’s briefly recap the baseline ranges we outlined earlier. These numbers reflect the monthly operational costs of running private LLMs (compute, storage, networking, licensing, and infrastructure overhead), not one-time development.

- Entry-level (7B–13B, single GPU): ~$1.5K–$5K/month

- Mid-tier (30B–70B, 4–8 GPUs): ~$15K–$40K/month

- Enterprise (70B–100B+, 8–16 GPUs): ~$50K–$150K+/month

Across all tiers, the dominant cost drivers are consistent: GPU time, storage and bandwidth, licensing, and operational overhead. With that in mind, here’s how you can bring costs under control without sacrificing performance or reliability.

Private LLM cost optimization

1. Get more out of each GPU

Dynamic batching allows multiple requests to be processed together, increasing throughput without breaking latency targets. Paired with KV-cache reuse for multi-turn chats and prefix caching for repeated prompts, it avoids recomputing the most expensive steps. Together, these techniques let each GPU handle more work per hour, lowering the effective cost per token.

2. Use quantization and mixed precision wisely

Reducing precision (e.g., INT8, FP16, FP8) cuts memory use and speeds up inference while often maintaining accuracy. With a smaller footprint, the same workload fits on fewer GPUs. For fine-tuning, approaches like QLoRA allow low-cost adaptation without retraining full weights. Always validate accuracy against domain-specific data. If INT8 holds up, the savings are essentially free.

3. Right-size models instead of defaulting to 70B+

A massive model isn’t always the best answer. In many cases, a fine-tuned 13B or 30B model can match the accuracy of a generic 70B model while costing a fraction to run. Some teams also route queries intelligently: smaller models handle routine tasks, escalating only complex or high-stakes requests to larger ones. This keeps quality where it matters while lowering the average cost per request.

4. Match hosting to workload patterns

Keep steady workloads on reserved or bare-metal GPUs, where per-unit cost is lowest. Use cloud for spikes, pilots, or regional overflow. Hybrid strategies are now common: stable inference runs on dedicated hardware, with cloud capacity added only during surges. Once workloads stabilize, this approach can reduce costs by 20–40%.

5. Commit to reserved capacity and use spot instances safely

Cloud providers price on-demand GPUs for convenience, but that flexibility comes at a premium. For predictable workloads, reserved contracts significantly reduce hourly rates. For non-critical tasks like batch inference or embeddings, spot/preemptible instances can be much cheaper, provided you use checkpointing and retry logic to handle interruptions. Splitting workloads this way (reserved for production, spot for batch) can shave tens of percent off monthly bills.

6. Scale on smarter signals

Naive autoscaling on request counts wastes capacity. Instead, scale on queue depth, tokens per second, and GPU utilization, with guardrails for latency. Grouping similar sequence lengths avoids wasted compute on padding. Smarter signals keep GPUs consistently busy while protecting against tail latency spikes, making scaling far more efficient.

7. Make cost part of your service objectives

Costs should be tracked as carefully as reliability. Monitor cost per 1,000 tokens, cost per request, and cost per successful task alongside latency and accuracy. Set alerts for unusual spikes (e.g., 20% week-over-week increases) and apply guardrails such as context length caps or per-tenant rate limits. What gets measured gets optimized, and what isn’t tracked usually drifts upward.

8. Use external expertise to avoid costly missteps

Even with the best practices above, one of the most effective ways to control spend is to avoid mistakes early. Partnering with experienced IT vendors helps navigate trade-offs in model selection, hosting architecture, and long-term scalability. Teams that have already deployed and optimized private LLM stacks can save you from common traps like overcommitting to cloud or underestimating GPU needs. For many enterprises, this guidance, often delivered through a private LLM setup service, is what keeps costs sustainable and predictable.

Final thoughts

Private LLM cost estimate isn’t about chasing the lowest number, but about aligning technology choices with business realities. Across our blog posts on private LLM development, we’ve shown that key factors affecting LLM hosting cost are model size, hardware, concurrency, and hosting approach, and that the optimal balance varies for each organization. What matters is clarity: knowing your range, planning for growth, and avoiding surprises as workloads move from pilot to production.

Private deployments are demanding, but they don’t have to be unpredictable. With the right strategy, you can keep control of your data, tailor performance to your needs, and scale in a way that makes financial sense.

And let’s not forget, beyond financial efficiency, sustainability is becoming an equally important part of large-scale AI operations. Every optimization, from quantization and dynamic batching to smarter autoscaling, not only reduces cost but also energy use and environmental impact. As the industry matures, responsible AI infrastructure design will mean balancing performance, budget, and carbon footprint alike.

If you’d like expert support in evaluating the cost to host LLM, shaping architecture, or building a roadmap that keeps budgets sustainable, our team can help.

FAQ

How much does an LLM cost?

As of 2025, entry-level deployments (7B–13B models on a single high-memory GPU) usually cost between $600 and $3,000 per month. Mid-tier setups (30B–70B models on 4–8 GPUs) typically run from $15,000 to $40,000 per month. At the enterprise scale (70B–100B+ models or high-concurrency workloads), costs often exceed $50,000 to $150,000 per month. The exact figure depends on concurrency, hosting model (cloud vs. bare metal), and whether you include supporting systems such as retrieval, monitoring, and logging.

Is hosting a large language model worth the cost compared to API access?

It can be, depending on your use case. Private hosting makes sense when you handle sensitive data, face strict compliance requirements, or serve high and steady traffic that makes per-token API fees unpredictable. Hosting in-house gives you control over data and infrastructure and can reduce costs at scale. However, for low or irregular workloads where compliance is less critical, API access often remains the cheaper and faster option. A break-even analysis is the best way to decide.

How do I calculate the cost to host a large language model?

Start with your workload: estimate the number of requests per day and tokens per request to calculate tokens per second. Based on your latency target, determine how many tokens per second a single GPU can handle with batching. Divide your total tokens per second by this figure to estimate the number of GPUs required. Multiply by the hourly GPU rate and 720 hours for 24/7 usage. Then add storage, networking, monitoring, and a buffer for overhead. Running this calculation for both cloud and bare metal will highlight your break-even point.

What is the cost per 1,000 tokens for a private LLM?

Private AI cost typically ranges from $0.01 to $0.15 per 1,000 tokens. The exact number depends on GPU type and price, batching efficiency, precision settings (such as INT8 or FP16), and average sequence length. Additional costs for retrieval, storage, and monitoring can increase the effective rate.

What hardware do I need to self-host a large language model?

For smaller models (7B–13B), you will generally need one high-memory data-center GPU with around 80GB of VRAM, supported by NVMe SSD storage and at least 128–256GB of system RAM. Larger models (30B–70B) require 4–8 GPUs with fast interconnects and 10–25–100 GbE networking in multi-node setups. Production environments should also include redundant storage, observability tools, and secure isolation (such as VPC or on-premises deployments). If fine-tuning is part of your plan, additional GPUs or job scheduling are needed to avoid affecting inference performance.

What is the total cost of ownership for a private LLM in 2025?

The total cost of ownership (TCO) combines compute, storage, and networking with licensing (if applicable), infrastructure tooling, and staffing. While monthly LLM infrastructure pricing typically falls within the ranges already outlined, long-term expenses also include MLOps cost for LLMs, DevOps, security, and compliance teams, which can eventually rival GPU costs. You can reduce TCO through techniques like batching, quantization, right-sizing models, hybrid hosting, and committing to reserved or spot capacity.