The Best Way to Move Data for RAG: A Strategic Migration Framework for Business and AI Leaders

Published: – Updated:

Data is the lifeblood of enterprises. Documents, procedures, tickets, reports, and internal guidelines shape daily work. Yet when decisions need to be made, teams often operate with incomplete or hard-to-access information, as critical content remains fragmented across different drives, tools, and legacy systems.

For years, companies have tried to reduce knowledge fragmentation and silos through centralized knowledge bases and governance practices. But teams change, information drifts, and old habits persist – people rely on tribal knowledge or outdated documents, even when better data technically exists.

The rise of AI has made this gap impossible to ignore. AI systems require high-quality, accessible data to perform effectively, but as IBM research states, only 26% of organizations are confident that their data can support AI-enabled revenue streams. This reveals a core constraint: the problem isn’t a lack of information but a lack of access.

This is why many organizations are considering retrieval-augmented generation (RAG)— not to introduce yet another AI layer, but to unlock the value of their existing, fragmented knowledge.

RAG helps capture institutional knowledge before it walks out the door, keep it current as systems evolve, and ground AI systems in a verified enterprise context. However, RAG’s success hinges on one critical factor: how well teams prepare data for RAG matters more than the model itself.

So, in this article, we walk through a practical roadmap for getting your data RAG-ready — from migrating structured and unstructured sources to avoiding common setup traps. At the end, we also provide a hands-on guide to help you evaluate your RAG system’s effectiveness.

Why are enterprises adopting RAG?

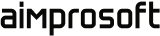

RAG is not just another AI chatbot. General-purpose LLMs have their limitations, including hallucinations, limited industry knowledge, and eventual degradation as their training data becomes outdated. In environments where accuracy and compliance matter, such uncontrolled AI outputs bring more risk than value. That is why more and more companies are turning to retrieval-augmented generation (RAG).

Instead of relying on off-the-shelf models with generic data, RAG allows organizations to ground LLM outputs in proprietary enterprise knowledge — internal documents, policies, records, and historical context that reflect how the business operates.

Industry data supports this shift. According to Databricks, 70% of companies using Gen AI augment base models with retrieval systems, vector databases, and supporting tooling, rather than deploying them as-is.

For a deeper dive into how RAG overcomes generative AI’s limitations in enterprise settings, read our full analysis.

As a result, RAG is increasingly adopted across regulated and knowledge-intensive industries such as financial services, healthcare, and legal. It transforms internal knowledge into an actionable decision-making tool — reducing manual searches, ensuring compliance, and standardizing responses across teams.

However, this same strength introduces new complexity. As organizations move from experimentation to production, the focus shifts from model performance to operational readiness

This is where many initiatives slow down. The benefits of RAG are clear, but so are the risks. Without careful data preparation, governance, and cross-team alignment, RAG systems can amplify existing data issues rather than resolve them.

The difference between success and stagnation? Addressing these challenges before they derail your initiative.

A strategic framework for migrating enterprise data to RAG

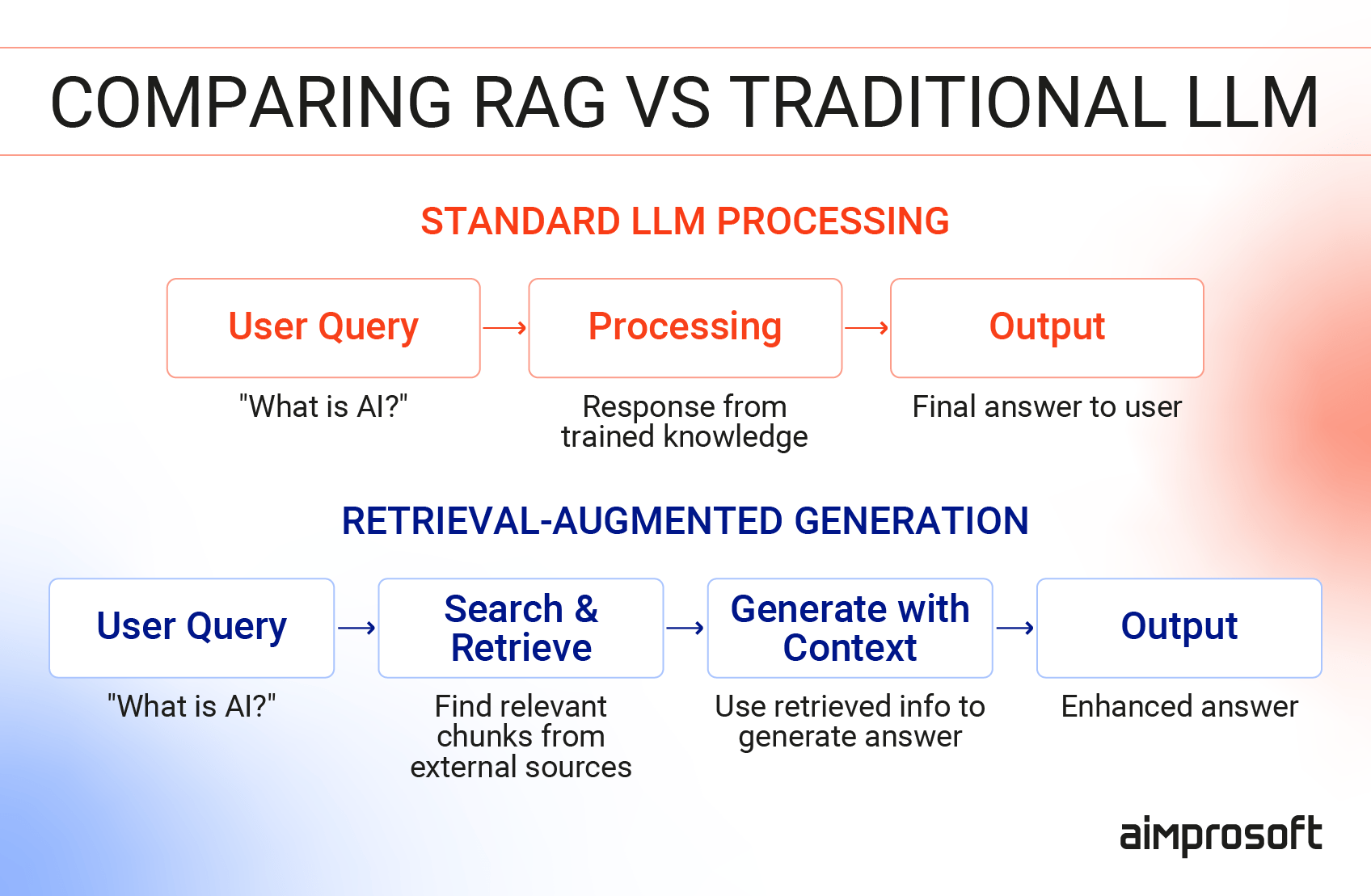

At a high level, RAG for enterprise data retrieval works through four key layers:

- Knowledge sources (documents, databases, tickets, communications)

- Indexing (preparing, embedding, and storing content for retrieval)

- Retrieval (identifying the most relevant context for a query)

- Generation (crafting a response grounded in that context, with quality checks)

On top of these layers, access controls, governance policies, and operational workflows ensure the system remains secure, compliant, and adaptable. Without these, even the best RAG system can become a liability.

Most teams focus on the visible parts of RAG, like embeddings, vector databases, and model outputs. But in reality, its success depends on how enterprise data for RAG is prepared, governed, and moved across these layers. This is why RAG data migration isn’t a one-time ingestion task — it’s an ongoing governance and architectural commitment.

To avoid the pitfalls that derail most RAG projects, we’ve developed a framework that covers not just the technical layers, but the operational and governance layers that determine long-term success.

Step 1: Audit and classify data sources

Before designing retrieval logic, organizations must decide what data will power RAG and how to categorize it. A common pitfall is assuming that all enterprise data — from structured databases to messy PDFs — can be indexed and retrieved in the same way. In reality, RAG for structured data and RAG unstructured data require distinct strategies. Classification isn’t just a best practice; it’s the dividing line between a functional RAG system and a failed experiment.

1. Structured data (Systems of Record)

Where it lives: Databases, ERP platforms, CRMs (rows-and-columns data)

Challenge: LLMs struggle with raw rows and columns because they lack narrative context. For example, a “Status_ID: 04” means nothing to an AI without a text description. Furthermore, if you index raw tables, you might bypass the security layers built into the original app or expose data that users shouldn’t query directly.

Solution: RAG with structured data should expose read-only, descriptive fields (e.g., status explanations, metadata) and preserve access controls. If users can’t see it in the CRM, RAG shouldn’t either.

2. Unstructured and Operational data

Where it lives: PDFs, wikis, Jira tickets, emails, Slack logs.

Challenge: Because anyone can edit a wiki or open a ticket, formats vary and taxonomies “drift” over time. Which brings us to “knowledge rot” — outdated instructions or three different versions of the same process. Worse, sensitive details (e.g., passwords in chat logs, private ticket comments) often lurk unnoticed in the text.

Solution:

- Flag outdated content and define expiration rules

- Assign ownership to keep information accurate

- Use automated scans to remove duplicates and PII before embedding

Key takeaway: The best way to prepare data for RAG is not to ingest everything, but to curate what actually belongs in retrieval. This is what reduces hallucinations, embeds governance, and makes RAG viable in production.

Step 2: Define retrieval objectives before designing the system

RAG systems are not general-purpose search engines — they’re built for specific workflows. The use case dictates the architecture: what RAG data sources get indexed, how retrieval is structured, and whether the system optimizes for speed or precision.

In our work with RAG development for enterprises, we see many organizations skip this step and end up with technically sound systems that fail under real usage. Before building, you must select which of these three retrieval modes your workflow requires, as each demands a different technical setup.

1. Search and reference lookup

Goal: Find the right document, section, or record (e.g., “Where is the file?”). This mode focuses on orientation and traceability, not answer generation.

Pitfalls:

- Over-reliance on semantic similarity can surface conceptually related but incorrect content

- Over-chunking loses source context (e.g., a paragraph without its document or policy reference)

- Missing metadata (recency, authority) allows outdated drafts to rank above approved versions

What to prioritize:

- Strong metadata preservation (source, version, owner)

- Ranking that favors authoritative and current content

- Clear links back to the original document

2. Question answering with grounded responses

Goal: Provide direct, fact-based answers (e.g., “What’s the compliance rule for X?”, “What is the required approval step?”).

Pitfalls:

- Retrieving only part of a policy can result in answers that sound correct but miss critical conditions or exceptions

- If documentation is incomplete, the model may answer based on general knowledge rather than your organization’s actual rules

What to prioritize:

- Precise context selection over broad recall

- Clear citation of source material

- The ability to decline or defer when information is missing or ambiguous

3. Summarization and synthesis

Goal: Identify patterns or themes across multiple sources (e.g., “What were the top support issues last month?”, “What risks appeared most often in audits?”).

Pitfalls:

- “Version contamination” AI blends old and new data into a single summary, leading to incorrect conclusions

- Loss of traceability leaves users without a clear way to check sources or validate results

What to prioritize:

- Strict source filtering and time boundaries (time, relevance, version)

- Make aggregation logic transparent

- Clearly label outputs as syntheses, not single-source truths

Key takeaway: RAG in production succeeds when the retrieval mode matches the workflow. Misalignment leads to frustration, inaccurate outputs, and wasted effort — even when the underlying system is technically sound.

Step 3: Choose RAG stack components

Technology choices in RAG are never neutral; they carry hidden assumptions about your data, scale, and security. Most teams fail here by evaluating components in isolation, such as picking the “fastest” database or the “smartest” model, without realizing how these choices constrain each other.

The goal is to build a cohesive RAG data pipeline where every part supports the final workflow, not the other way around.

1. Embeddings: Quality vs. Control

Embeddings turn text into numerical “coordinates” based on concepts rather than keywords. This lets a search for “yearly earnings” find “annual revenue” because they’re conceptually linked. The choice of embedding model usually comes down to two competing priorities:

Cloud-based:

- Pros: Superior “conceptual maps,” always up-to-date

- Cons: Data leaves your environment (can be a dealbreaker for regulated industries)

Local/On-Premises:

- Pros: Full data residency, no third-party exposure

- Cons: May require extra tuning for precision; needs your own computing resources

Bottom line: decide early whether data sensitivity or retrieval quality is your binding constraint. Teams often default to cloud models for convenience, only to discover mid-deployment that governance policies block this approach.

2. Vector Databases: Scale and integration

Vector databases store embeddings and enable similarity search. Your choice depends on query volume, concurrency (parallel requests), and whether you need advanced filtering.

- Lightweight/Local (e.g., FAISS): Excellent for proofs-of-concept or small, single-user tools. They are fast to set up but difficult to scale or manage across a whole company.

- Enterprise/Distributed (e.g., pgvector, Dedicated Stores): These handle thousands of users and support complex “filtering” — such as searching only for documents from the HR department written in 2024.

Bottom line: Don’t just pick the “fastest” database. Pick the one that integrates with your existing security and audit logs. If you need production-grade access controls, you need a distributed system from day one.

3. Chunking: Matching strategy to content

Chunking determines what the retrieval layer can surface and how much context the model sees. Poor data chunking for RAG leads to fragmented answers, missed dependencies, or instructions taken out of sequence.

Different content types require different chunking strategies for RAG:

- Semantic chunking (reports, articles, meeting notes): Groups content by meaning, which works well for narrative or analytical text.

- Structural chunking (manuals, SOPs, procedural guides): Preserves steps, headers, and ordering so instructions aren’t scrambled.

- Hybrid approach (contracts by clause, manuals by procedure): Applies different logic based on document type to avoid breaking legal or technical context.

If you work with scanned PDFs, the quality of text extraction (OCR) sets the ceiling. When extraction is noisy, no amount of tuning will fix retrieval — chunking data for RAG is only as effective as the underlying text.

Bottom line: Avoid blind automation when chunking. Customize the logic, so the system retrieves a complete thought, not a fragmented sentence.

4. Retrieval enhancement: Precision and safety

The first “search” is rarely perfect. Your system might surface 20 potentially relevant documents, but only 3 actually answer the question. This is where reranking comes in — re-scoring candidates using signals beyond semantic similarity: metadata, recency, user roles, or domain-specific rules.

What to put in place:

- Reranking: Re-score retrieved results using metadata, recency, user role, or domain rules — not semantic similarity alone.

- Confidence thresholds: Allow the system to respond with “I don’t know” when retrieved context is weak or inconclusive.

- Domain filters: Block entire classes of questions before generation (e.g., medical advice, investment guidance).

Bottom line: The technical implementation varies, but the principle doesn’t: if your RAG system can’t say “I don’t know” or “That’s outside my scope,” it’s not ready for production.

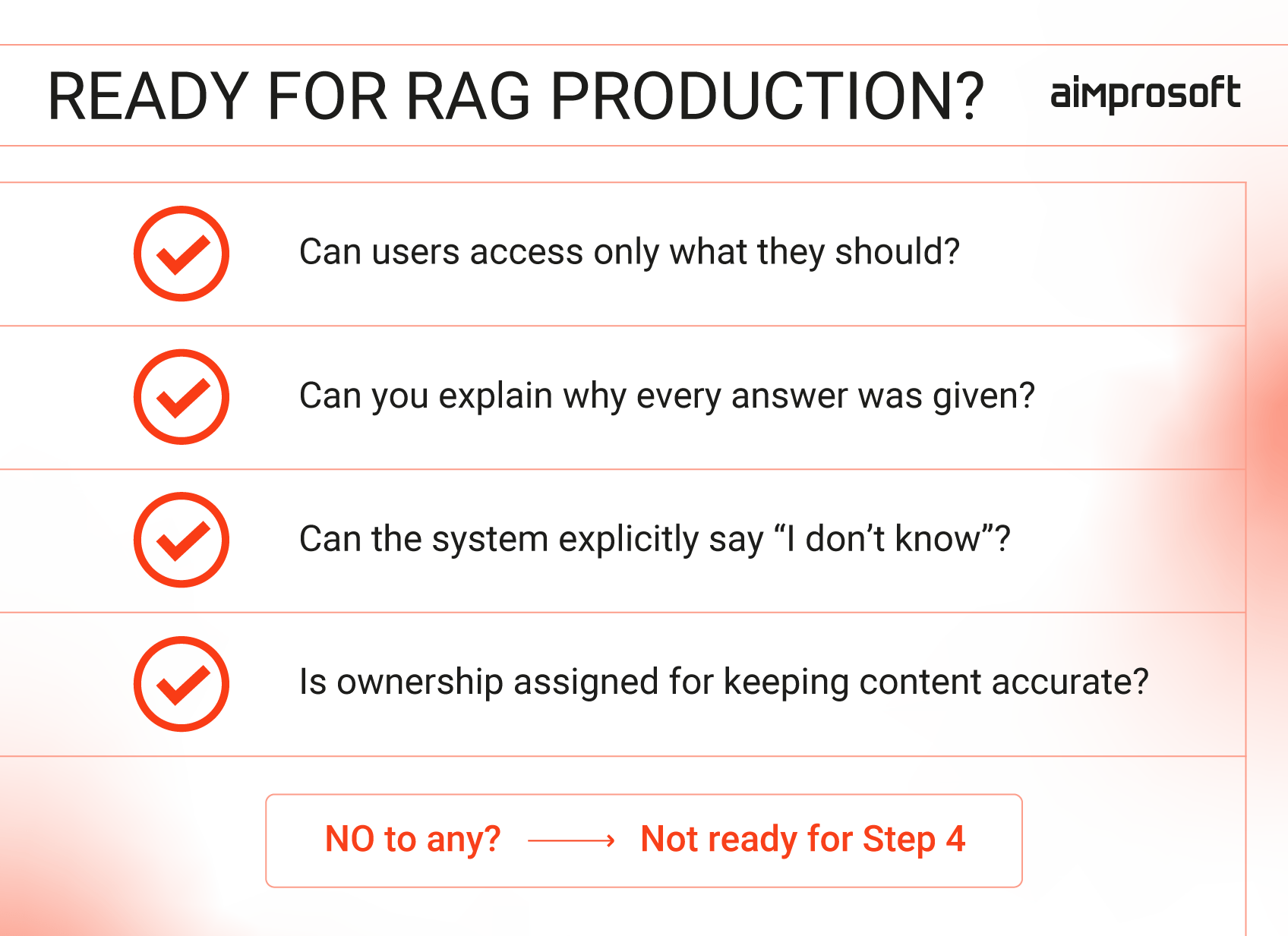

Step 4: Governance and operational readiness

RAG systems rarely fail at the demo stage. They fail in production when permissions are inconsistent, audits begin, and users expect correct answers every time. At this point, governance and operational ownership determine whether RAG remains useful or becomes a risk.

- Retrieval-level security: RAG data security must be enforced during search. If a user can’t see a document in the source system, the RAG system must not “see” it either. Every answer must be traceable to a specific document and version for auditability.

- Knowledge lifecycle ownership: Systems decay as content dates. You need clear ownership to remove obsolete files from your retrieval-augmented generation data sources and monitor “I don’t know” signals, which often point to gaps in documentation rather than model failure.

- Explicit compliance boundaries: Domain filters are mandatory governance controls, not just “nice-to-have” features. In regulated fields, the system must be hard-coded to refuse specific classes of questions (e.g., medical or legal advice).

Bottom line: Security and data residency must be verified end-to-end in your RAG architecture for enterprise deployment. If you cannot explain why the system produced an answer, it will not pass a professional governance review.

Wrapping up

Preparing data for RAG is not about moving documents into a vector database. It is about deciding what knowledge should be retrievable, how it should be interpreted, and under which constraints it can be used.

These aren’t one-time decisions. They require continuous validation as your system evolves, your data grows, and usage patterns shift. Without a structured way to evaluate these dimensions, teams discover problems only after they’ve caused damage — when a user accesses restricted data, an outdated policy drives a bad decision, or Legal asks questions you can’t answer.

This is why assessment matters. Our RAG evaluation guide provides a systematic framework to verify that your decisions around retrievability, interpretation, and constraints are actually working in production. It covers the four dimensions that determine whether RAG remains trusted infrastructure or becomes a liability.