Top LLM Use Cases: How Enterprises Are Applying Large Language Models Across Industries

Published: – Updated:

It’s getting hard to find an enterprise that hasn’t started using AI and ML services to do more with the same resources. According to Statista, 88% of companies used AI in at least one business function in 2025, rising from 78% the year before. At this stage AI clearly has clearly moved from the “experimental” phase into the heart of regular business.

BUT such rapid adoption has exposed critical security gaps.

A 2024 Cobalt report shows that LLM-specific issues now account for nearly 38% of all AI security findings. The most common problems weren’t about model accuracy, but how LLMs integrate into real systems. Top risks included insecure output handling (14.5%), prompt injection (11.5%), and sensitive data exposure (4.2%).

For enterprises working in regulated industries like finance and healthcare, these aren’t just technical glitches. They’re dealbreakers. While public LLM APIs offer convenience, they require companies to send sensitive data outside their security perimeter, increasing the risk of breaches, compliance violations, and loss of control.

As a result, many regulated organizations are moving toward private LLM deployments. Running LLMs within their own infrastructure allows them to benefit from AI while keeping data, access, and governance under enterprise control.

So, what private LLM use cases deliver the most value to industries?

We’ll tell you all about them below.

How private LLMs work inside enterprise systems

Private LLMs are language models isolated from public internet services. They operate on-premises, in private clouds, or in hybrid environments using internal information. This architectural choice addresses core risks of public models, such as data exposure, unpredictable external dependencies, and lack of auditability.

Running inside controlled boundaries enables businesses to:

- Maintain data locality – information never leaves approved systems

- Achieve predictable behavior – no external API calls or model updates mid-workflow

- Establish audit trails – every input, output, and data source can be logged and reviewed

These capabilities explain why more businesses are choosing private settings over public ones, especially in regulated industries, where public LLM services would introduce unacceptable risk.

Need clarity on the key differences between public and private LLMs, and which is right for your enterprise? Our detailed guide breaks it all down

The most common use cases for Large Language Models

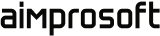

Companies get the most consistent value from private LLMs when they let them do what they’re built for: analyzing and interpreting information at scale. While the workflows can vary by industry, these roles recur wherever data sensitivity and operational control matter.

But first, a reality check — LLMs don’t replace human expertise. They enhance it, helping people make better decisions in data-sensitive workflows through strict access controls and full traceability. Let’s have a closer look at these LLM applications.

Now that we’ve covered the core roles, we can look at LLM use cases for business.

LLM use cases in healthcare

Healthcare teams work with large volumes of clinical notes, policies, and compliance documentation daily. For every hour of face-to-face clinical work, physicians spend close to 2+ hours interacting with electronic systems, often outside regular working hours (JAMA Network Open). At the same time, they operate under strict privacy rules where mistakes carry serious consequences: the average cost of a data breach in healthcare organizations is $7.42 million.

These challenges go beyond inefficiency. They contribute to clinician burnout, increase compliance risk, and slow down decision-making in care. And these are exactly the areas where large language models in healthcare can make a difference.

Private LLMs run inside HIPAA-compliant environments — on-premises or in private clouds — ensuring that protected health information never leaves controlled infrastructure. But this point is only one of many that make private LLMs suitable for healthcare workflows.

Clinical documentation structuring

Business impact: lower compliance risk, faster audits, and reduced operational cost.

LLM-assisted drafting reduces clinical documentation time by 60–70% — reclaiming 1-2 hours per physician daily and directly addressing burnout from after-hours charting. This shifts documentation from a manual burden to background automation, without changing clinical workflows.

Private LLMs take clinician-created notes — consultations, dictations, discharge summaries — and organize them into standardized formats for billing and audits. The system automatically extracts key fields while operating entirely within HIPAA-compliant environments. As a result, organizations gain structured, audit-ready data that meet regulatory requirements without ever leaving secure infrastructure, eliminating the compliance gaps inherent in external API-based solutions.

Internal patient query handling

Business impact: faster decision-making, reduced training time for new staff, and fewer escalations.

Healthcare staff save 43 minutes daily on information retrieval tasks when using AI-powered tools as natural-language interfaces to internal systems (UK NHS). Instead of manual searches or waiting for senior staff to answer protocol questions, nurses and care coordinators get approved procedures through simple queries.

To achieve that level of accuracy, LLMs use retrieval-augmented generation (RAG) and surface relevant protocols from internal repositories while enforcing existing access permissions. When a nurse asks, “What’s the consent procedure for outpatient MRI with contrast?”, the system retrieves the exact policy section, shows which document it came from, and confirms the user has authorization to view it. Healthcare LLMs don’t replace clinical judgment whatsoever — they just make institutional knowledge accessible to everyone who needs it and when they need it.

Compliance and audit navigation

Business impact: lower compliance risk, faster audits, and reduced operational effort.

Regulatory compliance is critical in healthcare, where violations carry significant legal, financial, and reputational risk. Yet compliance work remains heavily documentation-driven, slowed by fragmented systems and manual processes. AI-powered compliance systems reduce regulatory violations by up to 87% and cut compliance costs by 42%, according to Research and Metrics — making compliance one of the strongest LLM use cases in healthcare.

Private LLMs serve as intelligent compliance navigation layers. The system retrieves information from internal repositories when staff need to verify HIPAA requirements, locate policy sections, or prepare audit documentation. The best part isn’t just about efficiency, but traceability. Every retrieval is logged, every source is cited, and every access follows existing permission rules. This creates the audit trail that regulators expect, without the manual overhead that traditionally requires dedicated compliance staff.

LLM use cases in finance and banking

In finance, the hardest part of decision-making is rarely the lack of data. It’s the need to explain why something was done — often months or years later — across layers of regulation, internal policy, and historical precedent.

Reconstructing context for AML reviews, transaction reconciliations, or GDPR audits is a major time drain. Most teams lose hours simply trying to pull together data from disconnected internal systems.

LLMs in finance and banking support internal analysis and policy interpretation, and secure access to knowledge. Their biggest value lies in explaining and contextualizing financial information, not in executing transactions or making autonomous risk decisions.

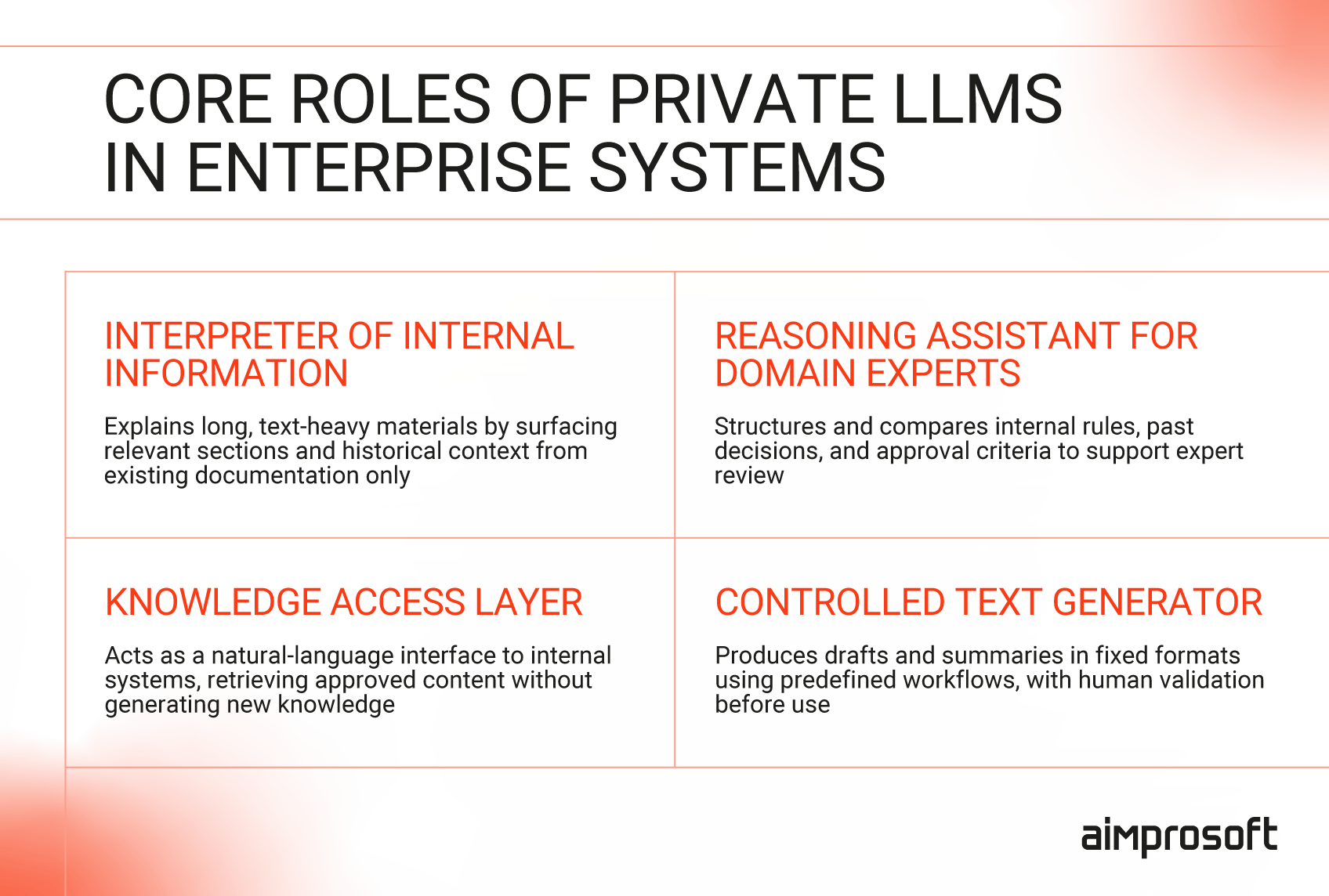

Regulatory and policy interpretation

Business impact: lower compliance risk, reduced penalty exposure, and defensible audit trails.

In 2025, banks across the UK and Europe faced over $200 million in compliance penalties. Beyond financial losses, violations damage institutional reputations and regulatory trust. This fact shows that compliance work is not just about efficiency but avoiding failures that carry extreme consequences.

When new regulations emerge — such as updates to capital requirements or revised AML screening protocols — teams traditionally spend hours cross-referencing them against internal policies. Even minor inconsistencies can trigger regulatory scrutiny. In contrast, financial institutions using LLM for finance workflows in AI-driven compliance platforms for Basel III report 40% faster processing and 30% higher accuracy.

Private LLMs retrieve regulatory guidance, internal policies, and historical decisions from internal repositories with full source citations. Using RAG, teams can see exactly which policy sections apply, making decisions easier to defend during audits. Every retrieval is logged, and every source is traceable — creating the audit trail that reduces violation risk.

Internal financial data explanation

Business impact: improved decision quality, earlier problem detection, and reduced analytical errors.

Financial teams spend 70% of their time on data collection and only 30% on value-added analysis, according to Deloitte. What makes this job even harder is that analysts manually search through historical reports, past quarters with similar patterns, operational changes, and cross-departmental data. By the time they piece together the full story, the window for corrective action has often closed.

This is where Large Language Models in finance show tangible operational value. Advanced LLM-RAG pipelines have shown over 70% analyst time savings on complex data assembly tasks — transforming multi-week investigations into multi-day analyses.

Earlier detection creates time for corrective action. If analysts identify a concerning trend in week 2 instead of week 4, leadership can adjust lending policies, rebalance portfolios, or investigate operational issues before losses compound. The LLM doesn’t recalculate metrics; it accelerates context assembly, which determines how quickly problems are escalated to decision-makers.

Risk analysis support

Business impact: faster credit decisions, more consistent policy application, and reduced underwriting cycle times.

Underwriters repeatedly ask: “Should we approve this loan?” Answering requires comparing applications to credit policies, historical cases, and precedents — a process that often takes longer than the analysis itself.

This is one of the most practical LLM use cases in banking today. Large language models in banking retrieve relevant context automatically: similar deals from the past 2-3 years and their outcomes, applicable policy exceptions, and relevant market conditions. According to KPMG’s 2024 Future of Risk report, 98% of executives say digital acceleration has improved their organization’s approach to risk management, particularly in identification, monitoring, and mitigation.

Analysts still evaluate risk and approve deals — the LLM for banking environments just eliminates the manual document hunting that previously slowed every case. For teams processing 200+ deals annually, reducing preparation time by 50-70% means faster borrower responses and more competitive time-to-commitment.

LLM use cases in manufacturing

Manufacturing environments generate vast amounts of technical documentation: equipment manuals, machine logs, maintenance records, and evolving SOPs. When issues arise on the production floor — an unexpected machine alert, a quality deviation, a supply chain disruption — operators and engineers need to understand the situation quickly. Instead of discovering new insights, they need to make sense of what already exists.

LLMs in manufacturing address this by making technical knowledge instantly accessible. Running within manufacturing IT infrastructure, large language models for manufacturing retrieve and explain information from internal documentation without sending proprietary production data or equipment specifications to external services — critical for maintaining competitive advantage and operational security.

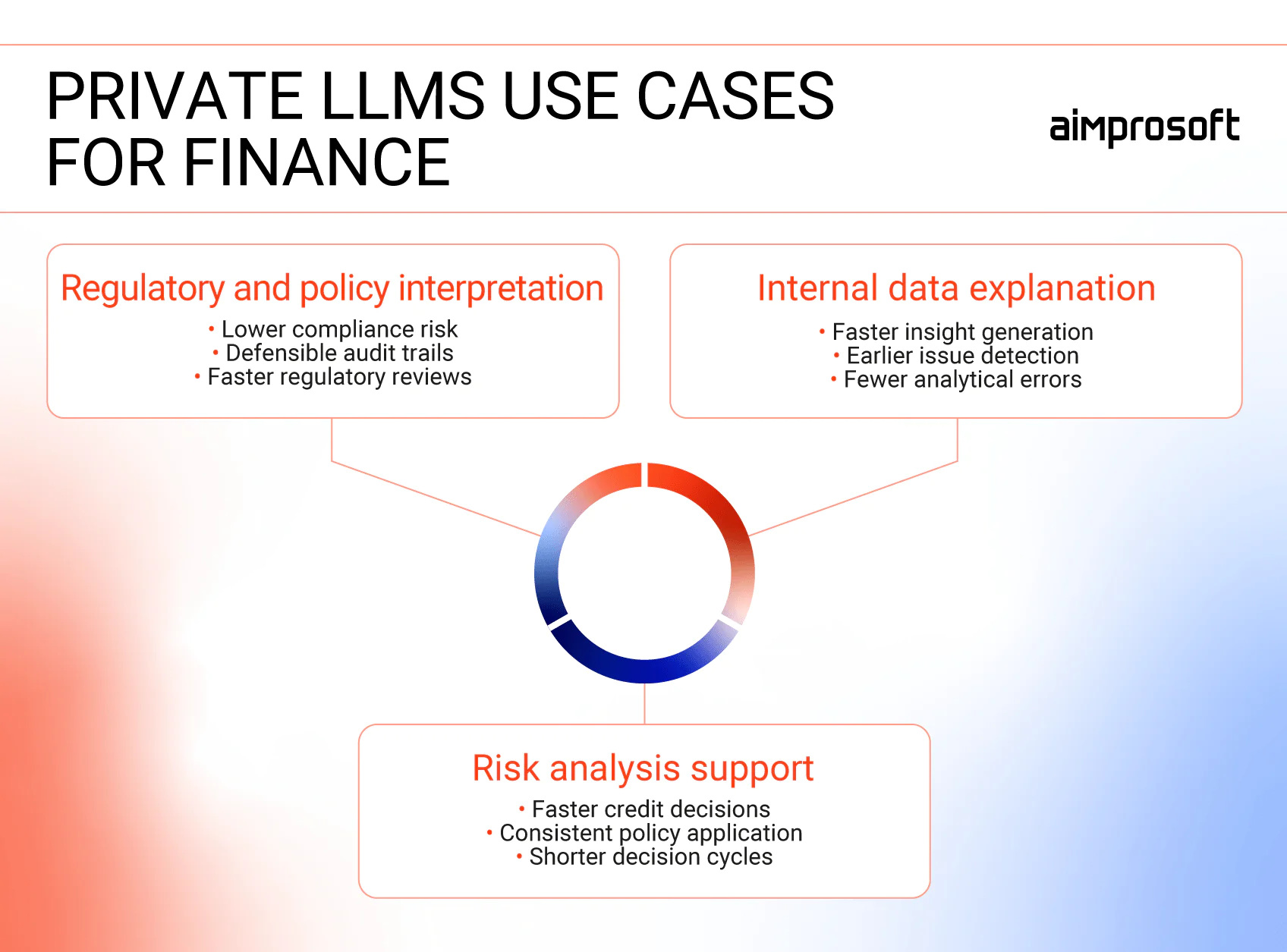

Equipment and incident explanation

Business impact: reduced downtime, faster issue resolution, and less dependence on senior engineer knowledge.

Unplanned downtime costs automotive manufacturers $2.3 million per hour. Equipment alerts are straightforward for experienced engineers but opaque to newer staff. As teams face turnover and expertise becomes harder to retain, understanding past incidents has become a critical bottleneck that extends costly downtime.

Manufacturing LLMs surface relevant explanations directly from internal manuals, historical incident reports, and maintenance records. When a machine throws an error code, the system retrieves similar past incidents, troubleshooting steps that resolved them, and relevant manual sections. Operators access institutional knowledge instantly rather than waiting for senior staff’s availability, reducing the time from alert to resolution.

Supply chain issue analysis

Business impact: reduced emergency procurement costs, avoided production losses, and faster recovery from disruptions.

Supply chain disruptions force manufacturers into costly decisions: pay 2-3x premiums for last-minute orders and expedited shipping, or miss customer commitments and trigger penalty clauses. During the pandemic, supply-chain delays accounted for roughly 40% of manufacturers’ expected unit cost increases — demonstrating how logistics disruptions directly erode margins when response times are slow.

When component delays occur, teams need rapid answers about alternative suppliers and affected production lines. LLMs for manufacturing accelerate access to supplier history, disruption of precedents, and production context — enabling faster decisions before delays to cascade into premium procurement costs and lost profitability.

Quality documentation and SOP interpretation

Business impact: reduced scrap costs, prevented audit failures, and avoided production shutdowns.

Poor quality costs most manufacturers 15-20% of total revenue (industry benchmark) — yet the root cause is often preventable: inconsistent adherence to SOPs. When workers can’t quickly find the right procedure, they improvise, creating defects that trigger non-conformances. These accumulate into failed quality audits, which can force production shutdowns until compliance is restored.

LLM applications in manufacturing make approved procedures instantly accessible. When investigating a non-conformance, the system surfaces applicable SOP sections, regulatory requirements, and similar past resolutions. Teams significantly reduce audit preparation time — often from weeks to days — while more consistent access to approved procedures helps reduce repeat non-conformances and strengthen overall quality control.

What private LLMs don’t do?

Private LLMs turn internal data into something teams can actually use — not to run business processes on their own or replace core systems.

That boundary is intentional. Not every function belongs to an LLM. Here’s where private LLMs stop:

- They don’t make decisions. Clinical diagnoses, credit approvals, and engineering specs — these require human judgment and accountability. LLMs surface insights; experts make the call.

- They don’t execute actions. They’re a knowledge layer, not an execution engine. Scoring, forecasting, and transactions still run through your BI platforms, risk engines, and ERP systems.

- They don’t expose data externally. All processing happens inside your compliant infrastructure (HIPAA, SOC 2, ISO 27001). No customer data, PHI, or proprietary information leaves the perimeter. Every interaction is logged for full auditability.

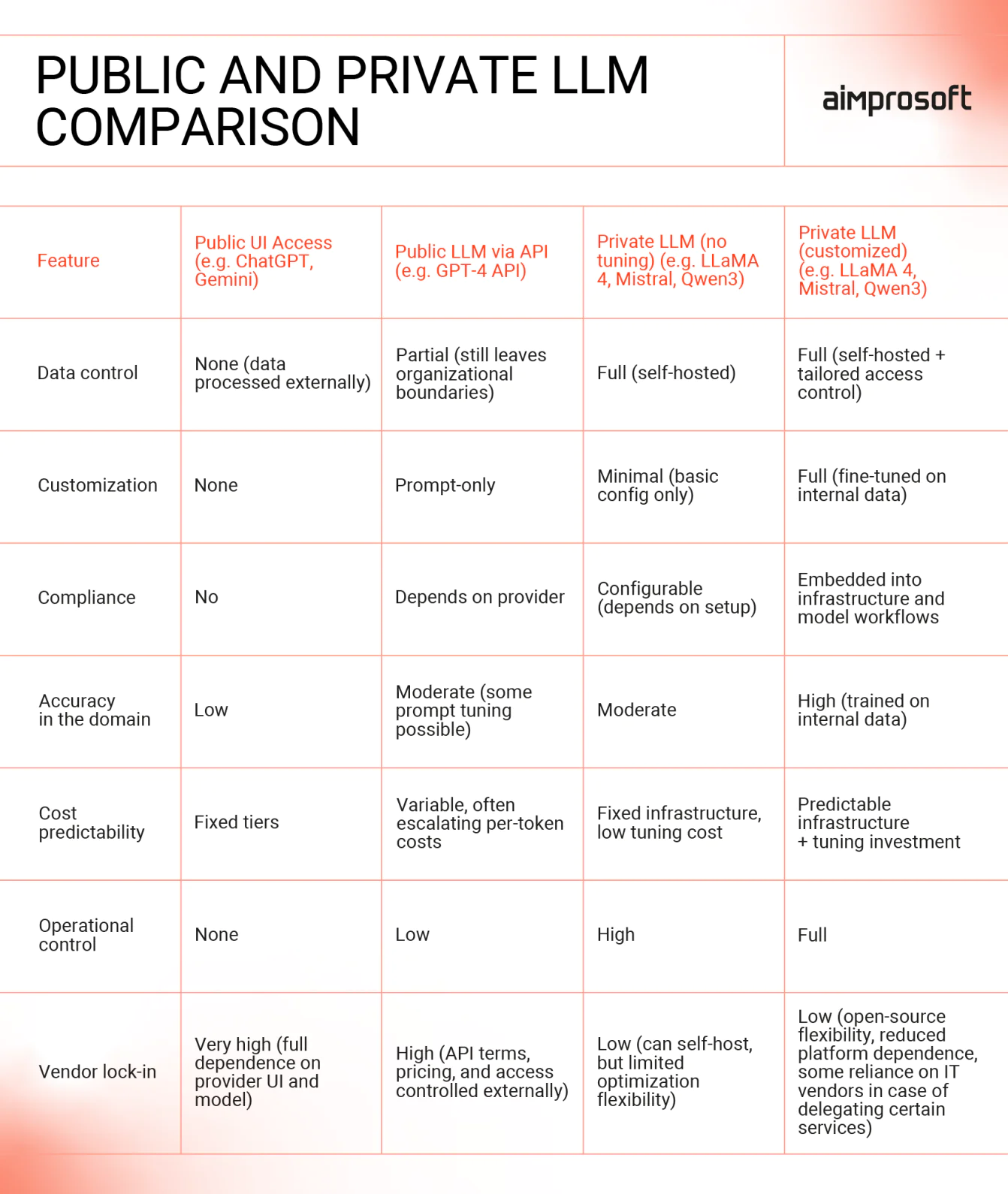

Public vs private LLMs: where each makes sense

By now, you might be asking: why not just use ChatGPT or Claude with a paid subscription?

For lightweight tasks, a $20/month subscription will do the trick. That includes drafting emails, brainstorming ideas, and summarizing public content. But the moment AI touches regulated data, operational workflows, or decisions that need to be explained in an audit, the requirements change.

Yes, private LLMs require upfront investment. You’re paying for infrastructure, deployment, and integration, not just API credits. But that investment buys you something public models can’t offer: full control over your data, complete audit trails, and the ability to tune the system to your exact business logic.

The real question comes down not to a matter of cost but risk and readiness.

Want to learn more about how much it costs to host a private LLM?

Private LLMs aren’t magic — they’re infrastructure. They don’t replace your experts, your systems, or your judgment. But in the right context, they unlock speed, compliance, and control that public models simply can’t deliver.

If you’re ready to explore what that looks like for your organization, let’s talk.