How to Select and Build Your Own Private LLM Stack: Strategic Guide for Tech Leaders

Published: – Updated:

In the first part of our LLM series, we examined why enterprises are shifting away from public LLM APIs toward private deployments. That move is rarely about novelty. It’s usually driven by hard limits with public APIs: compliance audits that enterprises fail to meet, models that don’t fully capture the company’s domain knowledge, sensitive data ending up outside company control, or monthly bills that grow faster than actual usage. The decision to go private is obvious; the hard part comes next.

Building a private LLM stack is not a matter of “pick a model and deploy.” You’re suddenly deciding how to host it, how to fine-tune it, how to connect it to your data, how to keep latency predictable, and how to ensure every request is secure and auditable. The options are endless, and so are the ways to get it wrong.

Our article is for the people behind the technical foundation, the CTOs, AI architects, and engineering leads who will choose, integrate, and maintain the stack. We’ll explain factors to consider to build private LLM stack, explore how to avoid the traps teams keep falling into, and equip you with a checklist to help you assess whether your company is ready to build and run a private LLM. The aim isn’t to hand you a universal blueprint, because one doesn’t exist. Instead, we’ll show you proven building blocks and trade-offs that will guide you toward a stack fit for your business.

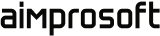

Defining your private LLM: the critical dimensions

When you design a private LLM, you’re not choosing a single “type.” You’re defining it across several independent dimensions, such as training approach, modality, licensing, and specialization. Each decision shapes control, performance, cost, and compliance. Your final deployment is the result of how these dimensions intersect, so clarifying them early helps avoid costly redesigns down the line.

How to define your private LLM

1. Training approach: how the model learns and adapts to your data

Private LLM AI deployments almost always need some level of domain alignment, either through lightweight tuning or smarter retrieval. The right approach depends on your data availability, GPU budget, and how frequently your domain changes. Start lean, prove value, and only then invest in deeper customization.

Pre-trained base

Host an open-weights model and evaluate it as-is. This gives you a fast, low-cost baseline for experimentation and RAG pipelines.

Fine-tuned

Train the base model further on proprietary datasets to capture domain-specific terminology, formats, and workflows. There are several approaches to fine-tuning, most of which fall under the umbrella of parameter-efficient fine-tuning (PEFT). These methods update only a small portion of the model instead of retraining everything. Two of the most widely used for fine-tuning private LLMs are:

- LoRA (Low-Rank Adaptation): inserts small trainable matrices for efficient domain alignment.

- QLoRA (Quantized LoRA): a memory-optimized variant that makes fine-tuning large models feasible on smaller GPUs.

These methods balance accuracy, cost, and speed, which is why they dominate private deployments.

Instruction-tuned

Adapt the model to follow natural-language instructions and multi-step prompts more reliably. This improves usability for business teams who want the model to “just work” without prompt engineering. Instruction tuning is often combined with PEFT so the model both understands your domain and responds in the right workflow style.

2. Modality: what types of data the model supports

Most private enterprise use is still text-only, which simplifies serving and cost. If your workflows include visuals (reports, scanned docs, forms), a multimodal stack can pay off, but expect added engineering and GPU requirements. Validate the ROI before committing.

- Text-only

Powers chat, drafting, classification, and RAG over internal knowledge bases. - Multimodal (text + image / audio / video etc.)

Useful for document understanding, visual QA, audio transcripts, video inputs, diagrams, forms, etc. Some open-weights / self-hostable models include: LLaVA, IDEFICS-2, Phi-4-Multimodal-Instruct, Qwen2.5-VL, Qwen2.5-Omni, Pixtral-12B, Molmo, InternVL 2.5.

3. Licensing & access: the boundaries of control

For private stacks, open-weights with commercial-friendly licenses (e.g., Apache 2.0, MIT, BSD, OpenRAIL-M) are generally preferred, as they allow you to host, adapt, and redistribute internally without vendor lock-in. Closed-source models are faster to deploy and require less in-house expertise but create ongoing vendor dependency and limit customization. In either case, read the license fine print, which covers usage rights, redistribution rules, and safety requirements, and confirm it fits your compliance posture. Favor projects with clear governance, active maintenance, and predictable long-term availability.

Examples of recent state-of-the-art best private LLM models suitable for private use include:

- Qwen2.5-72B (Apache 2.0, multilingual, long-context)

- Mistral Large Instruct 2407 (Apache 2.0, near-GPT-4 performance, enterprise-friendly)

- Phi-4 (MIT, lightweight, efficient for smaller GPUs)

- Falcon 180B (Apache 2.0, strong at factual QA)

- Gemma 3 (Apache 2.0, efficient for smaller-scale or edge AI deployments)

4. Specialization: general-purpose vs. domain-specific

Decide whether you need breadth or depth. General-purpose models fine-tuned with PEFT often beat bespoke vertical models on total cost and maintainability; still, domain models can be valuable accelerators in highly specialized contexts. The best path is usually to start from a strong general base and then specialize where it measurably improves outcomes.

General-purpose (as base)

Flexible across tasks; ideal starting point for PEFT/LoRA.

Domain-specific (open)

Useful for vocabulary, benchmarks, and patterns in specialized fields. Some of the best private LLM models include:

- Finance: FinGPT, InvestLM, FinMA-ES, FinTral — tuned for sentiment analysis, earnings call summarization, forecasting, and financial reasoning.

- Healthcare & Biomedicine: BioGPT, ClinicalGPT, PMC-LLaMA, MedAlpaca — focused on medical Q&A, literature summarization, and clinical note understanding.

- Legal: LexLM — trained on legal corpora for contract analysis and legal reasoning.

- Science & Research: SciGPT — fine-tuned on scientific papers for research summarization and hypothesis generation.

Defining your LLM across these dimensions (training approach, modality, licensing, and specialization) is less about labeling and more about setting the right boundaries for the stack you’ll build. Each choice has downstream effects: the hardware you’ll need, the orchestration you’ll rely on, and the compliance guardrails you’ll enforce. With this groundwork in place, we can now shift to the technology layers that turn these decisions into a functioning private LLM stack.

LLM stack overview: core components

Before building or customizing your own private LLM deployment, it’s essential to understand the major components that form the backbone of any production-grade enterprise AI stack. While individual setups vary depending on goals, budget, and technical maturity, most enterprise-grade systems involve several key layers, from model selection to hardware, orchestration, and post-deployment serving. Below is a reference table covering these core layers and the technologies most commonly used across the industry necessary to build private LLM stack.

| Layer/Function | Technology options (common) | What to pay attention to & Why it matters |

|---|---|---|

| Model family | Qwen2.5, Gemma 3, Phi-4, Mistral/Mixtral, Pixtral, InternVL | Model family affects licensing terms, GPU requirements, and how easily the model adapts to domain data through PEFT/LoRA. Favor projects with clear governance and commercial-friendly licenses. |

| Hosting environment | On-premises, Private VPC, Hybrid setups with managed inference over private networking (e.g., Amazon SageMaker endpoints via AWS PrivateLink/VPC endpoints; Azure Machine Learning via Azure Private Link; Google Vertex AI via Private Service Connect) | Impacts compliance, data sovereignty, and cost. Match hosting to regulatory rules, e.g., GDPR/HIPAA workloads often require you to deploy LLM on premise or private VPC. |

| Containerization | Docker, Podman | Keeps environments reproducible across air-gapped, regulated, or multi-cloud setups. Crucial for audits and smooth CI/CD. |

| Orchestration | Kubernetes, Nomad | Manages scaling and fault-tolerance for inference/training. Choose tooling your team knows, orchestration delays can block compliant LLM rollouts. |

| Hardware (Compute) | NVIDIA A100/H100, AMD MI300 | Determines speed, concurrency, and cost. GPU scarcity or budget caps may force smaller models, quantization, or hybrid CPU/GPU pipelines. |

| Fine-tuning frameworks | Hugging Face Transformers, DeepSpeed, LoRA, QLoRA, PEFT, Axolotl, unsloth | Enables domain-specific adaptation. Parameter-efficient methods cut GPU hours, which matters if infra is shared or regulated. |

| Embeddings | BGE-M3, NV-Embed-v2, Nomic-Embed-Text-v1, Conan-Embedding-v2 | Embedding model choice strongly influences retrieval quality in RAG pipelines and semantic search. For private deployments, prefer open-weight models with good benchmark performance (e.g. BGE-M3, Nomic-Embed) that also support long contexts. |

| Vector DBs | Qdrant, Weaviate, Milvus, PostgreSQL (pgvector), Cassandra, OpenSearch | Vector databases drive retrieval speed and relevance in RAG flows. For private deployments, choose self-hostable versions to keep full control over sensitive data. |

| Retrieval (RAG) | LangChain, LangGraph, LlamaIndex, Haystack, RAGFlow | Improves factual accuracy and reduces retraining costs. Beware of over-complex RAG graphs that slow latency-sensitive apps. |

| Monitoring & observability | Prometheus + Grafana, OpenTelemetry, Weights & Biases (self-hosted) | Needed to catch drift, performance drops, or bias in production. You must track both infrastructure and model-level metrics for audits. |

| Security | OIDC, OAuth2, Kubernetes RBAC, AWS Secrets Manager, Azure Key Vault, HashiCorp Vault | Protects sensitive training and inference data. Use secret managers to rotate credentials, enforce least-privilege access, and secure endpoints within private networks to reduce risk of misuse. |

| API gateway & serving | FastAPI, BentoML, vLLM, Ollama, Triton Inference Server, TorchServe, Seldon Core, OpenLLM | Controls access, scaling, and versioning. Some tools make multi-model routing or A/B testing much easier, which is key for iterative LLM tuning. |

| CI/CD & deployment | GitHub Actions, Jenkins, ArgoCD, Helm | Automates reproducible rollouts. In regulated contexts, CI/CD must log every model change for traceability. |

| Logging | Fluent Bit, ELK Stack, Loki | Centralizes inference and system logs for debugging, performance tuning, and audit trails. Ensure logs stay in trusted regions, since exporting them can break compliance. |

| Inference optimization | ONNX Runtime, TensorRT, Hugging Face Optimum, OpenVINO | Reduces latency and hardware load. Especially valuable when GPUs are scarce or running multiple private LLMs in parallel. |

Additional insights

While the table above provides a quick reference of available options, some choices carry trade-offs that aren’t obvious at a glance, especially in the context of private LLM deployments where compliance, security, and infrastructure constraints shape every decision. The notes we provide below expand on those categories to help you evaluate not just what to use, but why it matters for your long-term strategy.

Model family

Choosing a model family shapes your deployment’s performance ceiling, hardware footprint, and licensing flexibility. Recent open-source options like Qwen2.5, Gemma 3, Phi-4, Mistral/Mixtral, Pixtral, and InternVL are widely used in private LLM setups because they can be fully hosted, fine-tuned, and audited in-house. Mistral and Mixtral remain strong choices for efficiency on limited GPUs, while families like Qwen and Gemma scale well for larger workloads. Your choice should reflect both current needs and the long-term cost of switching if your data volume or compliance scope grows.

Hosting environment

Private LLMs typically run in on-premises data centers, isolated virtual private clouds (VPCs), or cloud-native environments with strict isolation policies (e.g., AWS Sagemaker private endpoints, Azure ML with private link). The hosting choice is usually driven by compliance (GDPR, HIPAA, SOC 2), operational maturity, and long-term cost modelling. For highly regulated workloads, the options to deploy LLM locally (on-premises) or on tightly controlled VPCs remain the safest bet. Still, many organizations weigh local LLM vs cloud to find the right balance between control and scalability, while hybrid models can help with burst capacity (temporarily scaling computing resources when demand spikes). When evaluating providers, leaders often ask about the best cloud for LLM deployment—the answer depends on specific compliance requirements, regional availability, and cost efficiency.

Orchestration

Kubernetes remains the most common choice for orchestrating private LLM workloads thanks to its autoscaling, fault tolerance, and ecosystem maturity. Nomad is valued for its simpler footprint in smaller or latency-critical deployments. Orchestration isn’t just about managing containers, it’s about ensuring inference jobs consistently meet latency and uptime targets, fine-tuning runs finish on schedule, and compliance logging is integrated into every execution.

Fine-tuning frameworks

Hugging Face Transformers is still the default toolkit for custom model development, offering the broadest model library and integration support. DeepSpeed and LoRA are critical for resource-efficient fine-tuning, reducing GPU hours and cost. PEFT and Axolotl simplify adapter-based approaches that preserve the base model while adding domain knowledge, a method often preferred when compliance demands a clear lineage of training data and model versions.

Retrieval-augmented generation (RAG)

RAG isn’t always mandatory for private LLMs, but it’s often the fastest path to boosting factual accuracy without retraining the model. By using embeddings to retrieve knowledge stored in a vector database and feeding that text into the LLM’s context, you can keep responses aligned with your internal knowledge base while avoiding data leakage to third parties. However, RAG adds architectural complexity and can increase latency, so it’s best suited for use cases where the cost of incorrect answers is high (e.g., legal, healthcare, fintech) or where knowledge updates are frequent. In some workflows, a fine-tuned domain model can replace or simplify RAG, but in most enterprise contexts, the two approaches complement each other.

Want a deeper dive into RAG? Check out our article on how RAG-powered AI solutions work in practice to see real-world usage examples and business benefits.

A private LLM stack isn’t just a technology list. It’s a tightly coupled system of choices that define how secure, compliant, scalable, and adaptable your AI will be. The trade-offs you make now will affect how easily you can upgrade models, integrate new data sources, or pass audits later. Selecting each component with both present needs and future flexibility in mind is key to avoiding expensive re-architecture. In the next section of this local LLM deployment guide, we’ll dive into the critical factors influencing private LLM selection, from matching architecture to compliance rules to aligning hardware with operational demands.

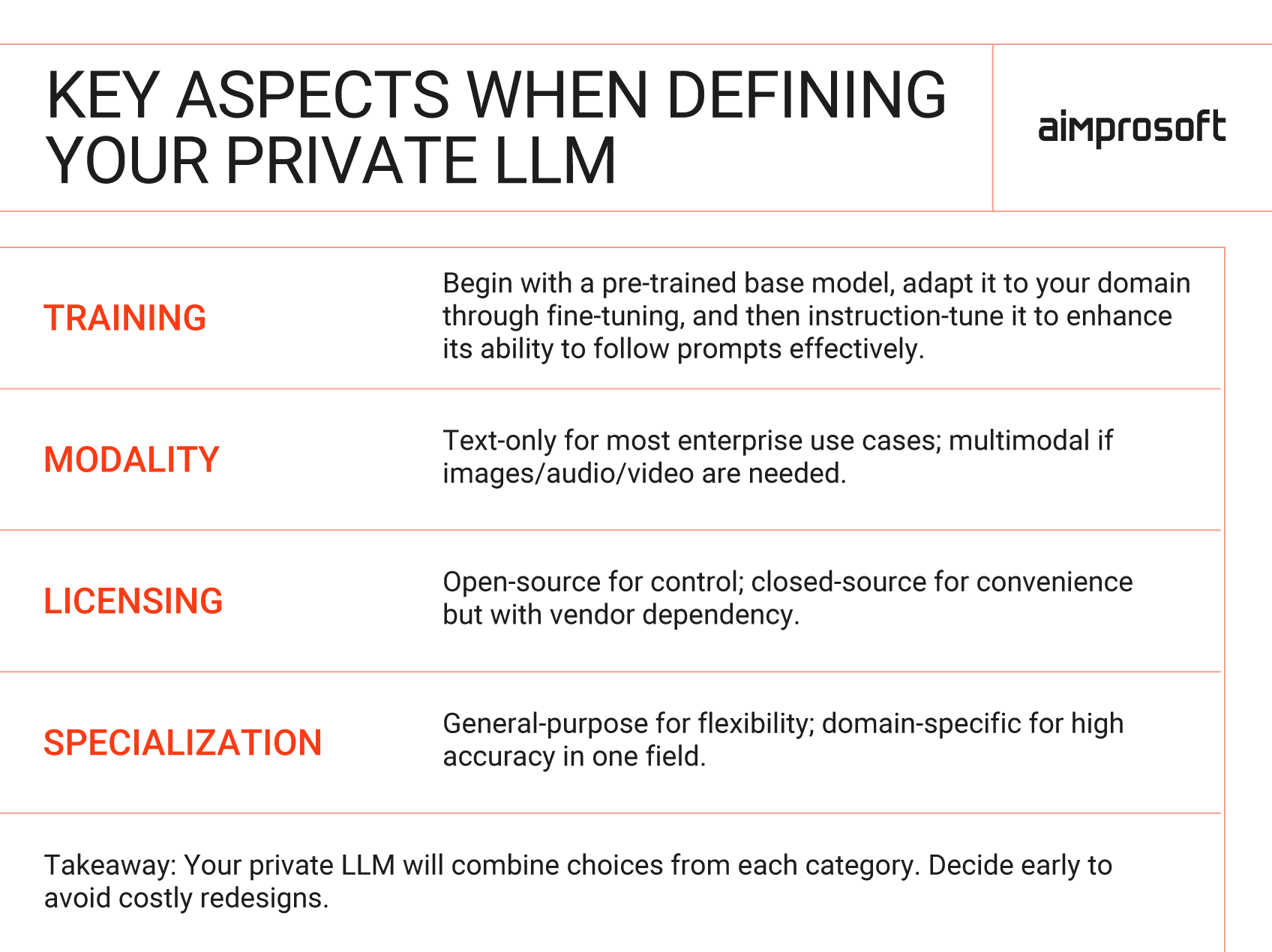

Model selection & infrastructure decision factors

Choosing the right model and infrastructure for a private LLM stack isn’t about chasing the latest benchmark winner. It’s about aligning technical capabilities with business needs, compliance rules, and the realities of your operational environment. These considerations are central to any enterprise LLM deployment guide and frame the five factors discussed below.

Choosing a private LLM

- Data sensitivity & regulatory alignment

If you work with regulated or confidential data (such as patient records, financial transactions, or legal documents), deployments must prioritize isolation, auditability, and compliance. Running the system in your own data center or within a private cloud environment often becomes mandatory for meeting requirements like GDPR, HIPAA, or SOC 2. Model choice should also account for how easily privacy measures, such as anonymization, encryption, or strict access controls, can be applied during both training and inference. - Accuracy vs. versatility

Some models excel at accuracy on narrow, domain-specific tasks once fine-tuned. Others trade that precision for broader versatility, handling a wide range of prompts without extra training. If your risk tolerance for incorrect or “hallucinated” answers is low, lean toward smaller models fine-tuned for your domain. If your workflows demand flexibility across many tasks, prioritize larger general-purpose models optimized for strong prompt understanding and reasoning. - Hardware requirements

Larger models with billions of parameters often require high-end hardware (e.g., multiple A100/H100 GPUs) to run efficiently. If your infrastructure is limited, focus on smaller, more efficient architectures like Mistral or LLaMA, Gemma, Phi, and use optimization methods such as quantization (reducing the precision of model weights to use less memory and run faster). Deployment frameworks like vLLM or TGI can improve throughput and reduce latency, helping you maximize performance without overprovisioning hardware. - Licensing, ecosystem & roadmap

Open-source models vary widely in licensing terms and long-term support. Some restrict commercial use, while others may lack active maintenance or a clear governance model. Always verify license conditions and ensure compatibility with your existing tooling (e.g., Hugging Face, DeepSpeed). Choosing a model backed by a strong ecosystem and active development roadmap reduces integration effort and lowers the risk of costly migrations later. - Total cost of ownership

Private LLMs require upfront investment in hardware and setup, but they can reduce long-term operating costs compared to paying per request. To make a sustainable decision, model your expected usage, estimate infrastructure and maintenance costs, and calculate when the investment will break even. Hybrid LLM deployment infrastructure setups, where most requests are handled locally while occasional overflow goes to a cloud fallback, can help balance costs with flexibility.

Our AI experts can help you evaluate models, architecture, and infrastructure for your unique business needs.

These factors rarely exist in isolation. To build a private LLM stack, you need to balance speed with control, compliance with cost, and customization with operational complexity. Once the right model and infrastructure are in place, the next challenge is designing the data pipeline for LLM and training flows that make those choices work reliably in production.

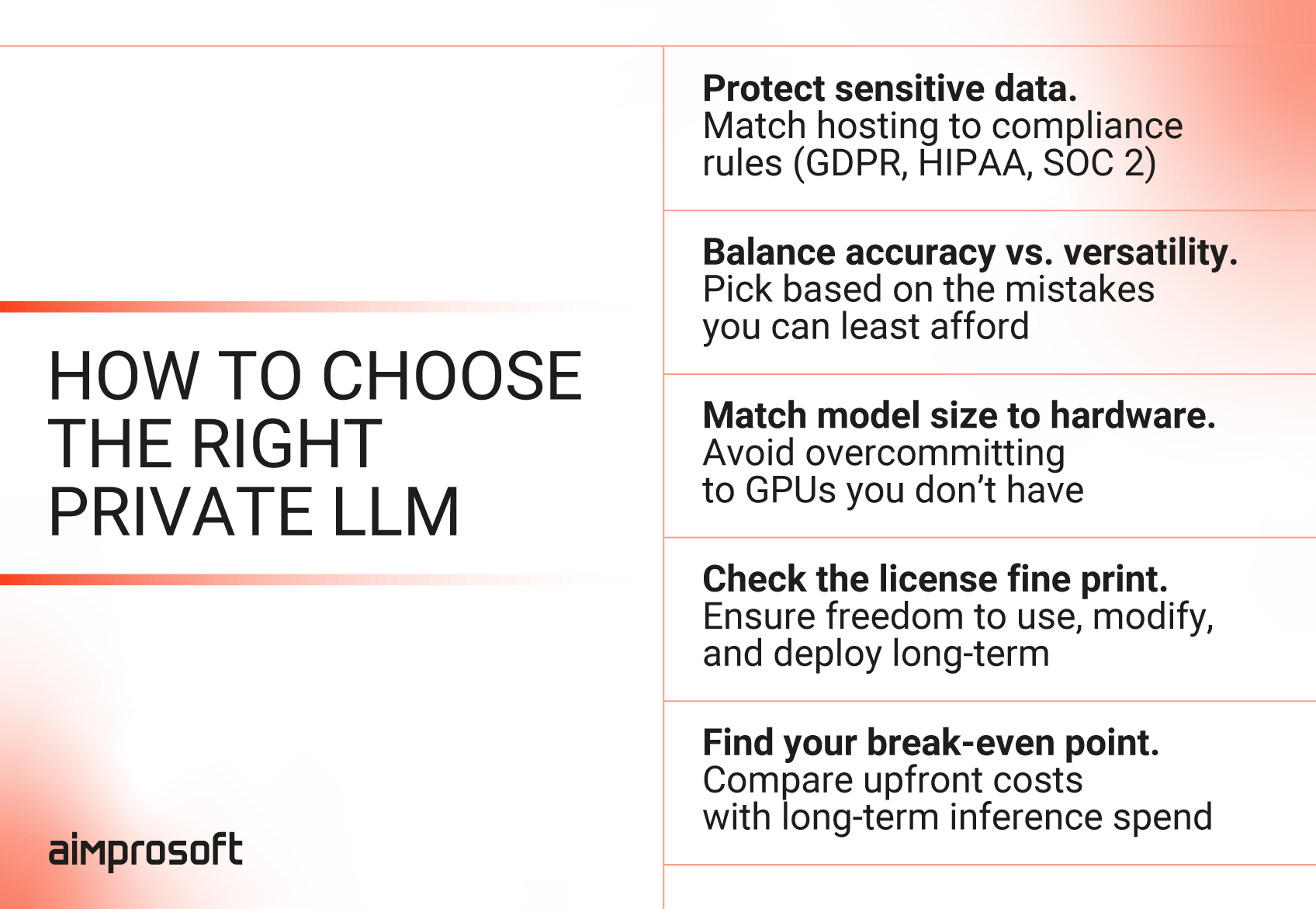

Data pipeline & training flow design

The foundation of a reliable private LLM lies not only in the model you choose, but in how your data is ingested, processed, and validated at every stage. Even the strongest architecture will underperform if it’s trained on poorly prepared datasets or lacks a feedback loop for ongoing refinement. A typical private LLM training flow includes the following stages:

Private LLM training flow

Data ingestion & preprocessing

The pipeline begins with collecting datasets such as internal documents, logs, knowledge bases, or chat transcripts. In some cases, external or public datasets may also be useful, provided their licenses allow commercial use and redistribution. Raw data is rarely usable as-is, so preprocessing is critical.

- Remove irrelevant, outdated, or duplicate entries.

- Normalize formats (dates, units, currencies) and perform text cleanup (e.g., removing formatting artifacts, fixing encoding issues).

- Apply redaction or masking for sensitive data (PII).

- Tag or group documents by context to support structured fine-tuning and, if needed, later retrieval.

- Ensure preprocessing aligns with the intended fine-tuning task (e.g., instruction following, domain specialization, retrieval augmentation).

A strong preprocessing phase reduces noise, improves training outcomes, and leads to more predictable model behavior.

2. Fine-tuning method

Fine-tuning adapts a base model to your domain. The choice depends on goals, budget, and available hardware.

| Method | Description | Best for |

|---|---|---|

| Full fine-tuning | Retrains all model parameters, producing a deeply customized version aligned with proprietary data. Requires massive computing resources, long training cycles, and large curated datasets. | Large enterprises in regulated industries (e.g., fintech, legal, healthcare) where compliance risk is high, accuracy must be near-perfect, and budgets allow heavy infrastructure spend. |

| LoRA | Inserts low-rank adapters into the model and updates only those, reducing computing cost while still tailoring the model to your data. | Mid-sized companies or enterprise teams needing domain-specific improvements (e.g., retail, logistics, HR tech) without retraining the full model. |

| QLoRA | A memory-efficient variant of LoRA that combines adapters with quantization, enabling fine-tuning of very large models on smaller GPUs. | Teams with limited GPU resources or startups experimenting with large open models on modest infrastructure. |

| PEFT (Parameter-Efficient Fine-Tuning) | A broader family of approaches (including LoRA, QLoRA, adapters, and prefix-tuning) that update only small portions of the model instead of retraining the whole network. Supports maintaining multiple lightweight “specialized” versions from a single base model. | Product companies, SaaS vendors, or consultancies that need multiple tailored versions for varied workloads without operating many full-scale models. |

| Instruction / Supervised Fine-Tuning (SFT) | Fine-tunes the model on carefully designed prompt–response pairs, improving its ability to follow instructions, style, and tone. Often combined with PEFT for domain specialization. | Companies improving model usability, safety, or customer-facing assistants where alignment and natural interaction matter more than deep domain expertise. |

For many teams, a practical balance is combining lightweight methods like QLoRA with broader PEFT strategies, sometimes layered with instruction tuning. This approach provides strong performance while keeping resource usage and maintenance manageable, especially when implemented with widely adopted toolkits such as Hugging Face, Axolotl, or DeepSpeed.

3. Validation and benchmark loop

After fine-tuning, performance must be measured on data that reflects your domain. Some best practices include:

- Evaluate using held-out datasets and carefully designed synthetic/adversarial test prompts.

- Measure not only accuracy, but also hallucination rate, relevance, and robustness.

- Automate evaluation as part of your CI/CD pipeline, integrating frameworks like Giskard, DeepEval, or TruLens in self-hosted mode to avoid data leakage.

- Incorporate human-in-the-loop review for critical tasks during early production stages.

Public benchmarks such as HELM or frameworks like EleutherAI’s lm-evaluation-harness (the foundation behind OpenLLM leaderboards) can provide broad comparisons, but they won’t capture how well the LLM performs on your specific use cases. For production decisions, validation should be based on datasets aligned with your business KPIs (e.g., customer support accuracy, compliance errors caught, contract clauses interpreted correctly).

A strong validation loop ensures that improvements are measurable, repeatable, and aligned with business outcomes, not just generic benchmark scores.

Versioning and feedback

Private LLMs for enterprises are evolving systems that require version control, monitoring, and structured feedback to remain reliable. Some of the best practices we recommend are:

- Version datasets and model checkpoints (e.g., with DVC or Hugging Face Hub)

- Track performance drift over time to spot degradation early

- Collect structured user feedback through rating systems or ranked outputs

- Build retraining loops that adapt to both data quality and changing business needs

Designing the right data pipeline isn’t just technical hygiene; it directly determines how useful, safe, and scalable your private LLM becomes. Skipping steps like proper preprocessing, or validation leads to unreliable outputs and mounting technical debt. In the next section, we’ll explore how to overcome the architectural and operational challenges that emerge once your private LLM stack starts taking shape.

Common implementation challenges & solutions

Even well-designed private LLM stacks face practical roadblocks during implementation. Below are some of the most common technical, operational, and compliance-related issues, and actionable strategies we recommend applying to handle them.

1. GPU resource bottlenecks

- Challenge: Access to top-tier GPUs is often limited or costly, which slows training and reduces the size of batches you can process. Teams evaluating the best GPU for LLM training quickly realize that hardware choices are constrained not only by budget but also by the overall GPU requirements for LLM workloads.

- Solution: Use parameter-efficient methods like QLoRA (quantization + lightweight fine-tuning) to reduce GPU load. Where possible, rent temporary “spot instances” (discounted cloud GPUs available on-demand) or use shared GPU clusters to stretch capacity.

2. Prompt drift and inconsistency

- Challenge: Over time, prompts that once worked well lose effectiveness as business requirements or workflows evolve.

- Solution: Treat prompts like code, namely, version them, document changes, and store them in libraries. Review usage data regularly and update prompts to reflect new terminology, processes, or compliance constraints.

3. Security vulnerabilities in model access or data exposure

- Challenge: In private deployments, risks include unauthorized model access or accidental exposure of sensitive training data. These are among the most critical private LLM security considerations that you must address.

- Solution: Enforce Role-Based Access Control (RBAC), encrypt data both when stored and transmitted, isolate workloads in private environments (e.g., VPCs or containers), and add inference guardrails (filters that prevent the model from outputting restricted or unsafe content).

4. Regulatory pressure and audit readiness

- Challenge: Proving compliance with frameworks like GDPR, HIPAA, or SOC 2 is complex without transparency in your pipeline.

- Solution: Build audit logging into every step of the data pipeline, document how training and inference data are handled, and retain logs of retraining or reprocessing so regulators can verify compliance.

5. Model degradation over time

- Challenge: LLM performance declines if the model isn’t refreshed with updated data that reflects current business reality.

- Solution: Schedule regular evaluations, add continual fine-tuning with new data, or implement RAG workflows to reduce dependence on static training datasets.

6. Latency and scaling issues at inference

- Challenge: In real-time applications, private LLMs may respond too slowly or fail under high traffic.

- Solution: Split large models across GPUs (“model sharding”), use load balancers to distribute requests, run quantized (smaller, optimized) versions for speed, or deploy distilled models for heavy-traffic endpoints where ultra-high accuracy is less critical.

7. Internal alignment gaps

- Challenge: Tech, legal, and business teams often move at different speeds, creating friction in deployment.

- Solution: Form a cross-functional steering group for the LLM initiative. Include engineering, compliance, and business leadership from the beginning to align priorities and reduce rework later.

Our AI experts can help you design a private LLM strategy that balances compliance, performance, and cost from day one.

Private LLM deployment isn’t just about choosing the right model. It’s about anticipating the roadblocks that will inevitably appear once theory meets development and production. If you plan for GPU shortages, compliance hurdles, and shifting business needs from the start, you can avoid costly rework and keep your stack reliable long term.

Checklist: is your organization ready to build and run a private LLM?

In our first article, we shared a checklist to help you decide whether a private LLM makes sense for your business in the first place. If you’ve passed that stage, the next question is readiness: does your current infrastructure, team, and process foundation support running a private LLM infrastructure stack? This quick audit is about spotting gaps early, before they become roadblocks.

Infrastructure & compute

- Do you have access to sufficient GPU resources (on-premises or cloud for LLM) to handle fine-tuning and inference?

- Is your hosting environment (cloud, VPC, or on-premises) capable of meeting compliance and data sovereignty rules?

- Can you scale storage and processing capacity as model size and usage increase?

- Do you already use container orchestration for LLM deployment (e.g., Kubernetes, Docker) as part of building scalable LLM infrastructure to support distributed training and serving

Data pipeline readiness

- Can your team ingest, clean, tokenize, and label internal datasets for training?

- Do you have infrastructure for dataset versioning and reproducibility?

- Are pipelines in place to periodically retrain or update models as new data arrives?

Security & compliance

- Have you defined access controls and isolation for training and inference environments?

- Can you log and trace data movement and model outputs for audits?

- Is encryption applied to data at rest and in transit?

Team & tooling

- Does your team have hands-on experience with fine-tuning frameworks (e.g., (e.g., Hugging Face Transformers, LoRA/QLoRA, PEFT, Axolotl, DeepSpeed)?

- Do you have at least basic MLOps/DevOps support for lifecycle management, deployment, and monitoring?

- Are you tracking performance, drift, and versioning with structured metrics?

Budget and sustainability

- Have you estimated the total cost of ownership (hardware, storage, staffing, retraining)?

- Do you have a plan for long-term optimization (e.g., quantization, model distillation, workload offloading)?

Building a private LLM stack is less about having every box checked and more about knowing which boxes still need work. If your answers reveal gaps, that’s not failure, it’s a roadmap. With the right preparation, you can move from experimentation to a stable, scalable private LLM environment that aligns with both business and compliance goals.

Wrapping up: control, clarity, and long-term alignment

Building a private LLM stack is not about chasing the largest model or replicating what’s trending in the AI space. It’s about making deliberate choices that balance control with scalability, compliance with cost, and flexibility with operational sustainability.

With this private LLM deployment guide, we wanted to show that the real challenge isn’t only technical. It lies in aligning infrastructure, data flows, and team capacity with your business priorities. Companies that succeed with private LLMs are those that plan early, evaluate trade-offs honestly, and design their stack with long-term adaptability in mind.

There is no single “perfect” stack, but there is a stack that is right for your organization. And finding it requires expertise across architecture, compliance, and AI engineering.

If you’re considering this path, our AI team can help you create LLM deployment strategy, identify the best-fit technologies to build private LLM stack, design sustainable pipelines, and de-risk your roadmap. Contact us to discuss how we can support your move from planning to a secure, scalable private LLM stack built around your goals.

FAQ

Where can I access managed LLM services that support multi-cloud and hybrid LLM environments?

Managed LLM services are now available from all major cloud ecosystems, often with options to deploy across multiple regions or even in hybrid LLM infrastructure setups that combine cloud LLM with LLM on-premise infrastructure. The key is not just where you host, but how you design the architecture: ensuring workloads can move between environments, compliance requirements are met, and performance scales predictably. A common question from tech leaders is what infrastructure is needed for hybrid LLM agent deployments?—and the answer depends on balancing flexibility with security and cost.

Rather than locking into a single provider, many organizations build private LLM stacks that can integrate with different clouds or run in virtual private clouds (VPCs). This gives flexibility to adapt as needs change while avoiding vendor lock-in. Our team helps companies evaluate these options and design a deployment model that balances compliance, redundancy, and cost efficiency.

How does a private LLM ensure data privacy and security?

A private LLM keeps all training and inference workloads within infrastructure you control, whether on-premise LLM deployment, in a private VPC, or in a dedicated managed environment. This prevents proprietary data from leaving your security perimeter, allows full control over encryption, logging, and access policies, and supports regulatory compliance through auditable processes and data minimization during ingestion and fine-tuning.

Where can I run an open-source LLM like Mistral or LLaMA without setting up my own infrastructure?

You can use services such as Hugging Face Inference Endpoints, Anyscale, Replicate, or Together AI to run open-source models without managing GPUs yourself. These platforms offer scalable APIs, usage-based pricing, and preconfigured environments, making them ideal for piloting or extending workloads before committing to a full self-hosted setup.

How do I assess if my infrastructure is ready for LLM deployment?

Check for four readiness pillars: compute (enough GPU memory and throughput for your chosen model size), storage (fast access to large datasets and model weights), networking (low-latency internal communication between components), and orchestration (capability to automate scaling, updates, and retraining). Running a small-scale test deployment with representative workloads is the fastest way to validate readiness.

What is the best way to deploy a private LLM in an enterprise?

Start with a reference architecture that separates training, fine-tuning, and inference environments, and that includes monitoring, CI/CD for models, and version control for datasets and weights. Use container orchestration (Kubernetes) for portability, integrate vector databases for retrieval, and implement access control at both the model and API layers to safeguard data and manage load.

Which open-source LLM has the best performance-to-cost ratio?

This depends on your use case and available hardware, but as of 2025, models like Mistral 7B, Gemma 3 (4B/12B), Phi-4, and Qwen2.5 (7B/14B) are widely recognized for delivering strong performance on enterprise tasks while running efficiently on fewer GPUs. All support quantization for reduced hardware requirements, with Mistral often favored for raw speed, Gemma and Phi for lightweight efficiency, and Qwen2.5 for scalability across multiple sizes and multilingual use.