Beyond Public AI Models: Why Enterprises Move to Private LLMs for Strategic Advantage

Published: – Updated:

It often begins quietly: a subtle frustration spreads during your routine strategy meetings. Yet another unexpected pricing update arrives, compliance issues resurface, or unsettling questions arise about AI and intellectual property in public AI services. These are not isolated incidents, nor hypothetical concerns; they’re becoming widespread operational challenges, quietly reshaping strategic conversations across boardrooms worldwide.

Initially, public large language models (LLMs), such as OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude, promised companies a compelling combination: immediate access, minimal upfront investment, and straightforward implementation. But beneath their appealing simplicity lie hidden complexities: privacy vulnerabilities, AI data leakage, and escalating and unpredictable costs that increasingly drive organizations to reconsider their AI strategy.

In this first article of our comprehensive series exploring private LLM deployment, we’ll address why enterprises are moving beyond public models towards privately hosted, customizable solutions. As an enterprise LLM solutions development company, we’ll guide you through realistic strategies for securely adopting proven open-source models within your own infrastructure. It’s time to clearly understand the strategic and operational advantages behind the shift to private AI.

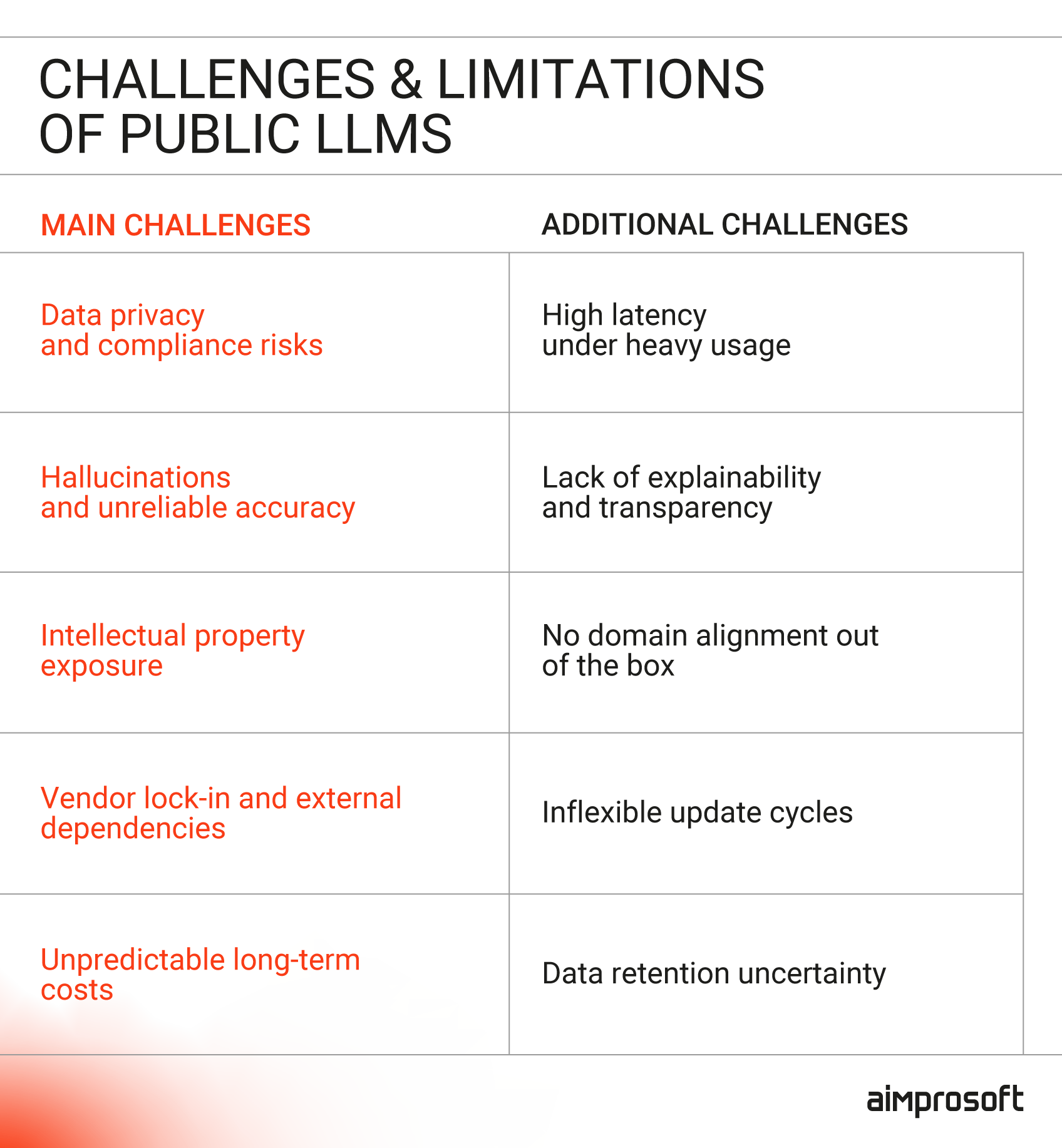

5 critical risks of public LLMs that drive enterprises to private models

Organizations initially captivated by public LLMs soon encounter operational realities that significantly diverge from the early promise. Through deeper enterprise adoption, critical shortcomings in areas such as privacy, intellectual property protection, data integrity, and cost predictability become increasingly apparent. Below, we examine five key challenges that organizations commonly face in deploying public LLMs.

Challenges and limitations of public LLMs for enterprise use

Privacy and compliance risks: the hidden cost of data exposure

Public AI models typically operate as black-box services accessed via external APIs, leaving organizations with little control over how their data is processed or retained. This lack of transparency continues to raise LLM privacy concerns at the enterprise level. In 2023, Samsung banned the use of ChatGPT and similar tools across company-owned and network-connected devices after employees unintentionally submitted confidential source code through the platform. Similarly, Verizon restricted access to ChatGPT within its corporate systems, citing risks of exposing sensitive customer data and internal IP.

These aren’t isolated decisions. They reflect a broader recognition that public LLMs carry material data risks. For organizations trying to determine how to use LLM with private data securely and compliantly, relying on public APIs can quickly lead to regulatory exposure, reputational harm, and governance challenges. In contrast, private LLM deployments allow full control over data handling, storage, and access policies, making them a strong foundation for building a secure AI model for enterprise use and significantly easing alignment with GDPR, HIPAA, SOC 2, and similar regulatory standards.

Hallucinations and unpredictable accuracy: an operational liability

Publicly available LLMs exhibit significant variability in accuracy, commonly known as “hallucinations.” In fact, a Stanford University study analyzing popular legal-focused LLMs found hallucination rates between 69% and 88% when responding to specific legal queries. This isn’t just a statistical anomaly across sectors like law, medicine, and finance. Plausible-sounding but incorrect AI outputs can lead directly to regulatory violations, financial loss, or harm to individuals.

While fine-tuning public models can help improve accuracy, it often raises privacy and compliance concerns when done through external APIs or shared infrastructure. In contrast, self-hosted private LLMs allow organizations to fine-tune models securely on proprietary datasets, reducing hallucination rates and increasing reliability, turning a high-risk liability into a dependable asset.

Data leakage and IP vulnerability: protecting core competencies

The design of public LLMs inherently places enterprise intellectual property at risk of unintended exposure. As organizations begin to confront the risks of AI for intellectual property, many are realizing how easily sensitive assets, such as customer data, internal systems details, or proprietary code, can be submitted to public models without proper oversight. A recent report noted that one in 12 employee prompts contains such confidential information, often unknowingly. This is just one example of the large language model risks in enterprise settings, where seemingly routine use can lead to significant consequences. Prompt leakage, such as employees pasting source code or confidential documents, has led to real-world exposures and corporate bans on AI services.

In addition, data breaches tied to public LLMs often occur through misconfiguration, supplier use, or overlooked storage policies, all of which fall beyond the enterprise’s visibility. These risks threaten not only competitive differentiation but also compliance and legal standing. A key part of AI data leakage prevention is ensuring full control over the model’s infrastructure and data flows. Private enterprise LLM environments provide exactly that: you retain ownership and manage every stage of data access, storage, and processing, effectively safeguarding your critical IP from accidental or unauthorized exposure.

Vendor lock-in and dependence: strategic risks of external reliance

Public LLMs offered via API inherently tie enterprises to specific vendors, leaving them vulnerable to sudden changes in pricing, access policies, or API stability. A recent Forbes article highlights an emerging “AI pricing paradox,” noting that even as user demand grew, OpenAI’s shifting cost structures led to unpredictable budget fluctuations for many corporate users. This volatility highlights the risk that relying on external providers can pose to operational stability and budget discipline.

By comparison, private LLMs built on open-source models allow full autonomy over infrastructure deployment and cost management. Enterprises gain the flexibility to switch models, swap service providers, or upgrade hardware, without being forced into reactive responses to external decisions. Although some dependency on vendor expertise remains (e.g., fine-tuning and infrastructure automation), the strategic control and platform independence offered by private LLMs represent a significant shift toward resilience and predictability.

Escalating costs at scale: the hidden economic trap

Public LLM APIs often appear inexpensive initially, but at scale, they can become a significant cost burden. According to CIO Dive, enterprise cloud costs rose an average of 30% over the past year, with AI and generative models cited by 50% of respondents as a primary driver of inflated expenses. These unpredictable billing fluctuations, combined with variable token charges, make financial planning challenging and often result in unplanned overages. In contrast, private LLM deployments involve upfront setup investments but deliver predictable infrastructure expenses and lower per-inference costs at scale, offering businesses greater cost transparency and long-term stability.

As you can see, the outlined challenges reveal significant strategic and operational vulnerabilities inherent in relying on public LLMs. The private LLM vs public LLM comparison goes beyond infrastructure choices. It’s about long-term control, risk management, and alignment with business goals.

Transitioning to private models can offer meaningful solutions: more robust security, predictable performance, IP protection, vendor independence, and cost transparency. But what exactly makes a private LLM different under the hood? And how do these differences translate into a strategic advantage for your organization? Let’s break it down.

Emergence of the private large language models: how exactly does it differ from public LLMs?

As the limitations of public LLMs become clearer, many enterprises begin exploring alternatives that promise more control, reliability, and alignment with business needs. While the shift toward private models is increasing, the reality is more nuanced than a simple “open source LLM vs private LLM” debate.

In practice, organizations typically weigh several different implementation paths: using public models via consumer interfaces, such as ChatGPT, integrating public APIs like GPT-4 into internal systems, deploying open-source LLMs with minimal customization, or fully hosting and tuning private models within their own infrastructure.

Each of these approaches comes with trade-offs in terms of control, cost, accuracy, and risk. The table below outlines the key characteristics of these options, helping you better understand how private, customized deployments compare and why many enterprises ultimately opt for them.

| Feature | Public UI Access (e.g., ChatGPT, Gemini) | Public LLM via API (e.g., GPT-4 API) | Private LLM (no tuning) (e.g., LLaMA 4, Mistral, Qwen3) | Private LLM (customized) (e.g., LLaMA 4, Mistral, Qwen3) |

|---|---|---|---|---|

| Data control | None (data processed externally) | Partial (still leaves organizational boundaries) | Full (self-hosted) | Full (self-hosted + tailored access control) |

| Customization | None | Prompt-only | Minimal (basic config only) | Full (fine-tuned on internal data) |

| Compliance | No | Depends on provider | Configurable (depends on setup) | Embedded into infrastructure and model workflows |

| Accuracy in the domain | Low | Moderate (some prompt tuning possible) | Moderate | High (trained on internal data) |

| Cost predictability | Fixed tiers | Variable, often escalating per-token costs | Fixed infrastructure, low tuning cost | Predictable infrastructure + tuning investment |

| Operational control | None | Low | High | Full |

| Vendor lock-in | Very high (full dependence on provider UI and model) | High (API terms, pricing, and access controlled externally) | Low (can self-host, but limited optimization flexibility) | Low (open-source flexibility, reduced platform dependence, some reliance on IT vendors in case of delegating certain services) |

While this table provides a high-level comparison across different LLM deployment paths, it’s essential to understand the unique characteristics that define private LLMs themselves. Below, we break down the core characteristics that distinguish private deployments and shape how enterprises build, secure, and manage their AI capabilities.

Controlled deployment environment

LLM data handling privacy concerns are addressed directly in private infrastructures. A private LLM can be deployed on-premises, in a hybrid setup, or within a private cloud, giving organizations full authority over data storage, movement, and access. This ensures compliance with internal governance rules while avoiding reliance on external endpoints. Enterprises define their own guardrails, aligning operations with specific LLM data privacy and security requirements.

Custom training capabilities

Private LLMs allow continuous evolution in line with business priorities. With LLM customization, enterprises can fine-tune and retrain models on proprietary datasets, embedding industry-specific language, workflows, and decision logic into results. This flexibility ensures models stay relevant as processes change or new datasets become available.

Regulatory alignment and auditability

A private large language model integrates compliance at the infrastructure level. Policies for data retention, access control, and logging are implemented natively, making them auditable at every stage. With enterprise AI compliance embedded into technical design, organizations can meet GDPR, HIPAA, SOC2, and other frameworks without retrofitting controls after deployment.

Vendor-neutral architecture with open-source foundations

Private LLMs built on open-source models like LLaMA 4, Mistral, or Qwen3 give enterprises freedom to select where and how their models are deployed. Infrastructure can run across AWS, Azure, GCP, or on-premises, and orchestration tools such as Kubernetes, Docker, or Terraform support scalability and migration. Third-party services like Hugging Face, RunPod, or Replicate may assist with deployment, but ownership and control remain with the enterprise.

Defined cost ownership

With private LLM deployments, infrastructure expenses (computing resources, storage, maintenance) are directly managed by the organization. This creates predictable financial planning and allows resource consumption to be scaled according to real usage patterns, avoiding the unpredictability of external billing models.

Still weighing the right LLM strategy for your business? Explore how AI consultants help businesses like yours evaluate use cases, compare public vs private models, and avoid costly missteps before development even begins.

Clearly, private LLMs differ significantly from their public counterparts in critical operational dimensions: infrastructure control, customization, compliance capabilities, vendor autonomy, and cost predictability. The private AI vs public AI debate is no longer just a technical comparison; it has become a strategic conversation for enterprises seeking long-term control and value. But identifying these differences is only the first step.

To fully understand why these characteristics are important, we must examine their direct implications for enterprise strategy. How exactly do these technical distinctions translate into competitive advantages, like safeguarding intellectual property, embedding compliance inherently within your operations, and delivering unprecedented accuracy tailored precisely to your business needs?

These questions naturally guide us into our next exploration: the strategic benefits enterprises gain by embracing private LLM solutions.

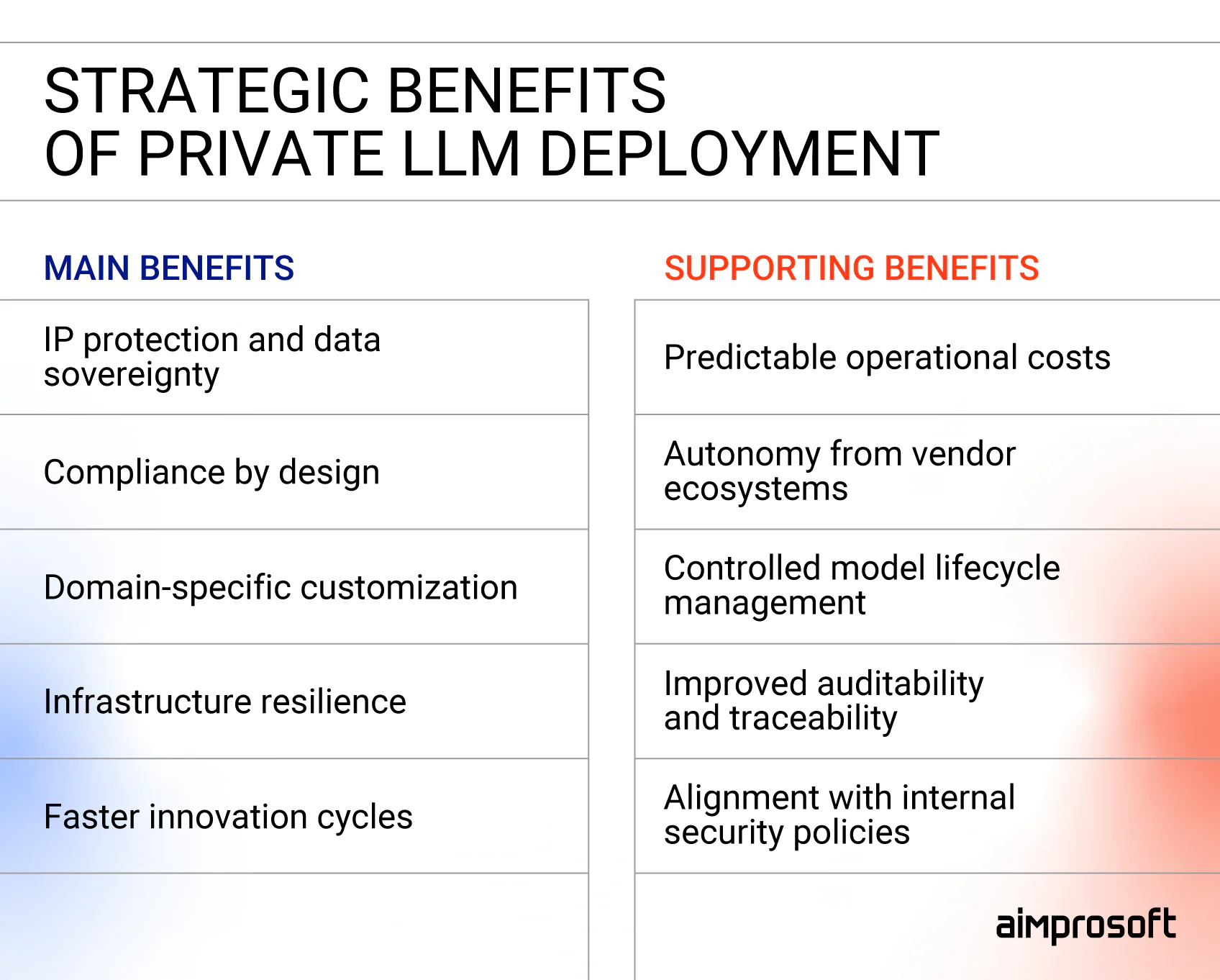

Strategic benefits: unlocking competitive advantage with private large language models

Beyond merely resolving technical or operational hurdles, private LLMs offer enterprises distinct competitive leverage, embedding critical business priorities directly into their core design.

Strategic benefits of private LLM deployment for enterprise environments

Competitive IP protection: keeping business secrets off public clouds

Intellectual property serves as the cornerstone of competitive advantage in knowledge-intensive industries, including pharmaceuticals, finance, legal, and other sectors where proprietary data is central to product development, service delivery, or market differentiation. The inherent risk with public LLM APIs lies in transmitting proprietary data into externally managed infrastructures, effectively relinquishing control and potentially exposing business-critical secrets.

Private large language model deployments fundamentally mitigate this risk by ensuring that all data and model interactions remain securely within enterprise-controlled environments, forming the foundation of a secure AI model for enterprise use. For instance, a global pharmaceutical company fine-tuning a private model to interpret drug trial data ensures proprietary formulas, methodologies, and sensitive research never leave their internal systems, directly protecting critical competitive assets and maintaining their market leadership.

Compliance by design: aligning AI deployment with GDPR, HIPAA, and SOC2 standards

Private LLMs embed compliance controls directly into their infrastructure from day one, with retention policies, audit trails, and data anonymization designed to meet strict regulatory requirements. For healthcare providers, this means HIPAA safeguards are enforced at the core of the system; for financial institutions, GDPR mandates can be implemented structurally rather than retrofitted later. As a result, demonstrating adherence to regulatory frameworks becomes far simpler and more reliable.

Customization and control: precision through domain-specific tuning

Private LLM development resolves customization limitations by enabling precise customization through fine-tuning on internal datasets. For example, legal firms employing privately hosted models trained specifically on precedent-rich databases achieve dramatically improved accuracy in document analysis, litigation prediction, and compliance assessments. Similarly, a diagnostic assistant powered by a privately hosted LLM (trained specifically on anonymized patient medical records and treatment protocols) delivers consistently accurate medical guidance, significantly surpassing generic models in reliability and precision.

Infrastructure resilience: ensuring reliable AI operations

Because you “own” the full stack, you can engineer their private LLM environments for resilience, implementing redundancy, load balancing, and disaster recovery protocols tailored to mission-critical needs. This approach minimizes downtime risks and keeps AI-driven services stable even under high demand or unexpected failures, ensuring continuous business operations.

Accelerated innovation cycles and faster time-to-market

Private LLM deployments enable enterprises to rapidly iterate and refine models within their controlled environment, eliminating external dependencies. Teams can quickly adjust training datasets, fine-tune models, and deploy incremental updates tailored precisely to evolving business needs. This internal agility drastically accelerates innovation cycles. Enterprises in competitive sectors, such as fintech launching tailored financial advisors or retail brands deploying dynamic recommendation engines, can use this agility to achieve faster time-to-market, continuously refining their offerings in response to real-time market feedback.

These strategic advantages (robust IP protection, inherent compliance, and domain-specific customization) underscore the broader business rationale behind the shift towards private LLMs. Rather than merely mitigating technical challenges, private deployments position enterprises to strategically leverage AI for genuine market differentiation and operational excellence.

When private LLMs may not be the right fit

While private LLMs offer distinct strategic advantages, they are not universally the right solution. In some cases, alternative approaches may be more practical or cost-effective. Here are a few scenarios where private deployment, especially with customization, may not be the best path forward:

- Short-term experimentation or MVP development

If your team is still testing AI’s potential or building a time-limited proof of concept, the overhead of deploying and maintaining a private model may outweigh its benefits. In such cases, public APIs offer lower barriers to entry and faster iteration cycles. - Lack of proprietary data

Fine-tuning a private model adds real value only when an organization has high-quality, domain-specific data to work with. If such data doesn’t exist or is too sparse to train meaningfully, the added complexity of private deployment may not yield noticeable returns. - Limited in-house engineering capacity

Even with the support of an IT vendor, such as Aimprosoft, operating a private LLM, especially one hosted on-premises or in a secure VPC, requires infrastructure readiness, ongoing monitoring, and occasional retraining. If internal teams aren’t prepared to manage or maintain these systems, simpler managed solutions might be more sustainable. - Use case does not require customization

For generic use cases (e.g., summarizing documents, generating basic marketing content, or customer FAQs), well-prompted public models or RAG setups using general-purpose APIs may provide sufficient value without the added cost and complexity of deploying a private LLM. - Budget constraints in the early stages

While private LLMs can be more cost-effective at scale, the initial setup, infrastructure, and tuning investments may not be feasible for smaller organizations or those in early funding phases. A phased approach, starting with public APIs and migrating later, may be more appropriate.

Private LLMs offer powerful advantages in the right context: control, compliance, domain precision, and long-term scalability. But like any enterprise technology, the value depends on how well the solution matches your organization’s data maturity, infrastructure readiness, and long-term goals. The decision to adopt a private model shouldn’t hinge solely on the technology. It should align with your company’s broader strategic and operational realities.

That’s where the role of an experienced vendor becomes critical. In the next section, we explore how implementation paths differ and what kind of expertise enterprises need at each stage of the journey.

The vendor’s role: why expertise matters in private LLM deployment

While the strategic advantages of private LLMs are increasingly clear, the path to implementing them is rarely uniform. Some organizations begin with experimentation through public APIs, while others invest in long-term infrastructure and fine-tuned models. The complexity varies, but so does the value.

As an AI-focused IT vendor, our role extends beyond deployment alone. We help organizations evaluate the right model for their needs, build scalable infrastructure, and ensure the solution fits within compliance and operational boundaries. From lightweight pilots to advanced enterprise-grade systems, you can turn to vendors like Aimprosoft not just for technical execution, but for strategic clarity.

Below, we break down how vendor involvement plays out across the four most common paths enterprises take when adopting LLMs.

Option 1: Public LLM via UI (e.g., ChatGPT, Gemini)

This is where many companies begin. Using tools like ChatGPT through a web interface offers a quick way to explore AI potential with zero infrastructure requirements. It’s a low-barrier entry point, ideal for testing use cases, experimenting with prompts, or generating internal content. However, its simplicity comes with major limitations: no data control, no customization, and very little enterprise integration potential.

Where vendors are involved: In most cases, they’re not. This phase is typically handled internally, and enterprises quickly outgrow it.

When to choose this path:

- Early experimentation and ideation

- Zero integration or compliance needs

- Low stakes, non-sensitive use cases

Option 2: Public LLM via API (e.g., GPT-4, Claude)

As businesses become more comfortable with LLMs, many transition to API-based access. This allows them to integrate models into internal systems and workflows, often for chatbots, knowledge bases, or data extraction tools. The public model remains unchanged, but now it’s wrapped in infrastructure and logic. However, vendor lock-in, escalating token costs, and data compliance risks remain.

How we help:

- API integration into internal tools and workflows

- Prompt architecture and fallback logic

- Wrapping APIs with service layers for modularity

- Setting up guardrails, logging, and usage monitoring

When to choose this path:

- MVPs or time-limited pilots

- Business logic is more important than accuracy

- Risk is acceptable, but cost and control are becoming issues

Option 3: Private LLM (no tuning)

This marks a strategic shift. Here, companies deploy open-source models (like LLaMA 4 or Qwen3) within their own infrastructure, but do not fine-tune the model. It’s a step-up in control and data security, offering full sovereignty over hosting, latency, and compliance setup. The tradeoff? Accuracy is limited to what the base model can do, and business context must be provided through careful prompt engineering or RAG (retrieval-augmented generation).

How we help:

- Model selection guidance (license terms, performance, hardware fit)

- Deployment setup: Docker, Kubernetes, GPU provisioning

- Security layers: IAM, encryption, data access auditing

- RAG pipeline implementation: connecting to internal knowledge bases

- Monitoring and observability: dashboards, performance logs, alerts

When to choose this path:

- Internal teams want maximum data control but aren’t ready for full tuning

- Domain accuracy isn’t mission-critical

- Organizations are validating private LLM use cases before further investment

Option 4: Private LLM (customized & fine-tuned)

For organizations that demand high precision, reliability, and domain alignment, custom LLM development is the gold standard. Using the same open-source models as in Option 3, these systems are fine-tuned on proprietary data, enabling the LLM to understand internal terminology, processes, and context with dramatically improved performance. It’s not a fast path, but it’s the most strategic long-term approach for enterprises where LLM output directly impacts customers, compliance, or revenue.

How we help:

- All services from Option 3, plus:

- Proprietary data processing: cleaning, labeling, formatting, anonymizing

- Fine-tuning pipeline setup

- Running evaluations against custom benchmarks

- Model versioning and rollback management

- Continuous tuning workflows for evolving data

- Compliance-focused architecture reviews and documentation support

When to choose this path:

- LLM use cases for business require high domain accuracy or regulatory compliance

- IP or customer trust is at stake

- The business seeks long-term cost savings vs public APIs

- The LLM will evolve as part of the internal knowledge infrastructure

No matter which path you take (API experimentation, secure hosting, or full-scale customization), the role of a capable vendor is to reduce complexity, align infrastructure with strategic goals, and ensure the end result is both functional and future-ready. The real value isn’t in building models from scratch. It’s in making open-source intelligence work safely and effectively inside the enterprise.

In our next article, we’ll dive into selecting the right model and infrastructure setup for your specific context, balancing speed, scalability, and business fit.

Still unsure if your business needs a private LLM?

By now, we’ve covered the most common LLM deployment paths, examined their trade-offs, and outlined where a vendor can help. If you’re still weighing whether a private LLM is the right fit, the LLM deployment checklist below will help clarify that decision. It covers the essentials, including what questions to ask before adopting a private LLM, so you can assess your business priorities, technical constraints, and long-term goals with greater clarity.

Checklist: do you need a private LLM?

1. Data sensitivity and ownership

- Do you handle sensitive data such as customer records, medical information, contracts, or source code?

- Would exposing this data to third-party APIs create compliance or reputational risks?

- Do you require complete control over where and how your data is stored and processed?

2. Compliance and audit readiness

- Are you subject to GDPR, HIPAA, SOC 2, or industry-specific compliance standards?

- Do you need to demonstrate traceability and data handling policies during audits?

3. Domain specificity

- Do public LLMs often produce vague or inaccurate answers in your area of expertise?

- Would a model trained on your internal knowledge improve reliability or performance?

4. Infrastructure and control

- Do you require control over deployment environments (e.g., on-prem, VPC, hybrid cloud)?

- Do you need to integrate the model with internal systems or secure environments?

5. Cost predictability

- Are you concerned about unpredictable or escalating API costs?

- Would you prefer upfront infrastructure investment over long-term variable billing?

If you answered “yes” to several of these questions, it’s likely that a private LLM would provide more control, accuracy, and long-term value than a public alternative.

Wrapping up

The decision to move toward a private LLM is rarely made in isolation. It usually emerges after a series of growing realizations about the limitations of public tools, the risks of external dependencies, and the need for something more secure, more tailored, and ultimately more aligned with the way your business actually operates.

Private LLMs are not about chasing trends. They’re about designing solutions around what matters: control over sensitive data, confidence in compliance, the precision of domain-specific intelligence, and the ability to adapt without relying on someone else’s roadmap.

As the AI landscape matures, so do the questions that matter. And the most important one is no longer “Can we use AI?” It’s “Can we trust the way we’re using it?” Private deployment doesn’t guarantee trust. But it creates the conditions for it, on your terms, in your environment, and for your business. If you’re navigating that decision now and want to talk through it, contact us to explore the right next step for your team.

FAQ

Should I use a public LLM or build a private one?

It depends on your business priorities. Public LLMs are easy to start with, but they offer limited control, raise data privacy concerns, and come with unpredictable costs at scale. Private LLMs, especially those built on open-source models, give you more control over infrastructure, data handling, and customization. If your use case involves sensitive information, compliance requirements, or long-term cost efficiency, private deployment is the better fit.

What’s better for internal data: open-source or private LLM?

They’re not mutually exclusive. Most private LLM deployments use open-source models like LLaMA 4 or Qwen3 as the foundation. The key difference is how and where they’re deployed. Hosting an open-source model privately (on your own infrastructure, with your own access controls) ensures internal data stays protected and never leaves your environment. This makes private deployments based on open-source models the best option for handling internal or sensitive data.

Are private LLMs better for GDPR or HIPAA compliance?

Private LLMs allow you to control where data is stored, how it’s accessed, and what is retained, making it much easier to enforce compliance with GDPR, HIPAA, SOC 2, and other regulatory standards. Public LLM APIs, by contrast, often lack transparency in data handling and retention, leaving businesses with compliance gaps they can’t fully audit or control.

Can private LLMs be customized for my business data?

One of the biggest advantages of private LLMs is the ability to fine-tune or extend models using proprietary datasets, internal documents, and domain-specific language, without exposing sensitive information to external providers. While public LLMs can also be fine-tuned, doing so through third-party APIs often limits data control and compliance guarantees. Private deployment ensures that customization happens securely within your infrastructure, resulting in higher accuracy, stronger data governance, and outputs that reflect how your business actually operates.

How do private LLMs integrate with internal systems?

Private large language models are typically deployed within your own infrastructure or cloud environment, which makes them highly flexible for integration. They can connect to internal APIs, databases, knowledge bases, and authentication systems. With proper orchestration, they can support workflows across HR, legal, finance, customer service, and other departments, while maintaining compliance with your internal security and access policies.